Your new post is loading...

Your new post is loading...

|

Scooped by

Complexity Digest

October 21, 2025 10:53 AM

|

Leila Hedayatifar, Alfredo J. Morales, Dominic E. Saadi, Rachel A. Rigg, Olha Buchel, Amir Akhavan, Egemen Sert, Aabir Abubaker Kar, Mehrzad Sasanpour, Irving R. Epstein & Yaneer Bar-Yam Scientific Reports volume 15, Article number: 33854 (2025) Predicting dynamic behaviors is one of the goals of science in general as well as essential to many specific applications of human knowledge to real world systems. Here, we introduce an analytic approach using the sigmoid growth curve to model the dynamics of individual entities within complex systems. Despite the challenges posed by nonlinearity and unpredictability, we demonstrate that sigmoid-like trajectories frequently emerge in systems where entities undergo phases of acceleration and deceleration of growth. Through case studies of (1) customer purchasing behavior and (2) U.S. legislation adoption, we show that these patterns can be identified and used to predict an entity’s ultimate state well in advance of reaching it. This provides valuable insights for business leaders and policymakers. Moreover, our characterization of individual component dynamics offers a framework to reveal the aggregate behavior of the entire system. Moreover, our classification of entity lifepaths contributes to understanding system-level structure by revealing how individual-level dynamics scale to aggregate behaviors. This study offers a practical modeling framework that captures commonly observed growth dynamics in diverse complex systems and supports predictive decision-making. Read the full article at: www.nature.com

|

Scooped by

Complexity Digest

October 20, 2025 12:11 PM

|

Chao Duan & Adilson E. Motter

Nature Communications volume 16, Article number: 7242 (2025) Averting catastrophic global warming requires decisive action to decarbonize key sectors. Vehicle electrification, alongside renewable energy integration, is a long-term strategy toward zero carbon emissions. However, transitioning to fully renewable electricity may take decades—during which electric vehicles may still rely on carbon-intensive electricity. We analyze the critical role of the transmission network in enabling or constraining emissions reduction from U.S. vehicle electrification. Our models reveal that the available transmission capacity severely limits potential CO2 emissions reduction. With adequate transmission, full electrification could nearly eliminate vehicle operational CO2 emissions once renewable generation reaches the existing nonrenewable capacity. In contrast, the current grid would support only a fraction of that benefit. Achieving the full emissions reduction potential of vehicle electrification during this transition will require a moderate but targeted increase in transmission capacity. Our findings underscore the pressing need to enhance transmission infrastructure to unlock the climate benefits of large-scale electrification and renewable integration. Read the full article at: www.nature.com

|

Scooped by

Complexity Digest

October 19, 2025 7:31 PM

|

compiled and edited by Ricard Solé, Chris Kempes and Susan Stepney

What is life, and how does it begin? This theme issue explores one of science’s deepest questions: how life can emerge from non-living matter. Researchers from many fields — from physics and chemistry to biology and artificial life — are working to uncover the basic principles that make life possible. Key themes include the role of energy and information in early cells, the plausibility of alternative forms of life, and efforts to recreate life-like systems in the lab. By bringing together diverse perspectives, this issue offers a fresh look at both the limits and possibilities for how life may arise, on Earth and beyond. Read the full articles at: royalsocietypublishing.org

|

Scooped by

Complexity Digest

October 18, 2025 9:37 AM

|

Petter Holme, Milena Tsvetkova We review the historical development and current trends of artificially intelligent agents (agentic AI) in the social and behavioral sciences: from the first programmable computers, and social simulations soon thereafter, to today's experiments with large language models. This overview emphasizes the role of AI in the scientific process and the changes brought about, both through technological advancements and the broader evolution of science from around 1950 to the present. Some of the specific points we cover include: the challenges of presenting the first social simulation studies to a world unaware of computers, the rise of social systems science, intelligent game theoretic agents, the age of big data and the epistemic upheaval in its wake, and the current enthusiasm around applications of generative AI, and many other topics. A pervasive theme is how deeply entwined we are with the technologies we use to understand ourselves. Read the full article at: arxiv.org

|

Suggested by

Christoph Riedl

October 17, 2025 3:19 PM

|

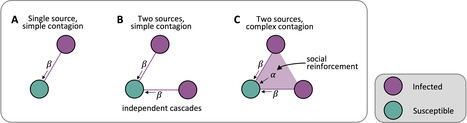

Jaemin Lee, David Lazer, Christoph Riedl Sociological Science Complex contagion rests on the idea that individuals are more likely to adopt a behavior if they experience social reinforcement from multiple sources. We develop a test for complex contagion, conceptualized as social reinforcement, and then use it to examine whether empirical data from a country-scale randomized controlled viral marketing field experiment show evidence of complex contagion. The experiment uses a peer encouragement design in which individuals were randomly exposed to either one or two friends who were encouraged to share a coupon for a mobile data product. Using three different analytical methods to address the empirical challenges of causal identification, we provide strong support for complex contagion: the contagion process cannot be understood as independent cascades but rather as a process in which signals from multiple sources amplify each other through synergistic interdependence. We also find social network embeddedness is an important structural moderator that shapes the effectiveness of social reinforcement.

https://sociologicalscience.com/articles-v12-28-685/

|

Scooped by

Complexity Digest

October 15, 2025 3:35 PM

|

Georgii, Karelin and Nakajima, Kohei and Soto-Astorga, Enrique F. and Carr, Earnest and James, Mark and Froese, Tom Synergy between stochastic noise and deterministic chaos is a canonical route to unpredictable behavior in nonlinear systems. This letter analyzes the origins and consequences of indeterminism that has recently appeared in leading Large Language Models (LLMs), drawing connections to open-endedness, precariousness, artificial life, and the problem of meaning. Computational indeterminism arises in LLMs from a combination of the non-associative nature of floating-point arithmetic and the arbitrary order of execution in large-scale parallel software-hardware systems. This low-level numerical noise is then amplified by the chaotic dynamics of deep neural networks, producing unpredictable macroscopic behavior. We propose that irrepeatable dynamics in computational processes lend them a mortal nature.

Irrepeatability might be recognized as a potential basis for genuinely novel behavior and agentive artificial intelligence and could be explicitly incorporated into system designs.

The presence of beneficial intrinsic unpredictability can then be used to evaluate when artificial computational systems exhibit lifelike autonomy. Read the full article at: philsci-archive.pitt.edu

|

Suggested by

Christoph Riedl

October 13, 2025 5:21 PM

|

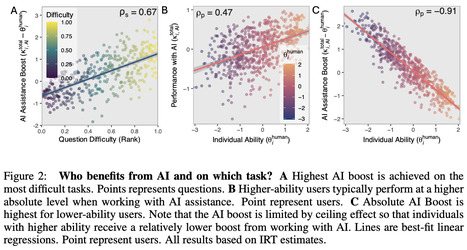

Christoph Riedl, Ben Weidmann We introduce a novel Bayesian Item Response Theory framework to quantify human–AI synergy, separating individual and collaborative ability while controlling for task difficulty in interactive settings. Unlike standard static benchmarks, our approach models human–AI performance as a joint process, capturing both user-specific factors and moment-to-moment fluctuations. We validate the framework by applying it to human–AI benchmark data (n=667) and find significant synergy. We demonstrate that collaboration ability is distinct from individual problem-solving ability. Users better able to infer and adapt to others’ perspectives achieve superior collaborative performance with AI–but not when working alone. Moreover, moment-to-moment fluctuations in perspective taking influence AI response quality, highlighting the role of dynamic user factors in collaboration. By introducing a principled framework to analyze data from human-AI collaboration, interactive benchmarks can better complement current single-task benchmarks and crowd-assessment methods. This work informs the design and training of language models that transcend static prompt benchmarks to achieve adaptive, socially aware collaboration with diverse and dynamic human partners. https://osf.io/preprints/psyarxiv/vbkmt_v1

|

Scooped by

Complexity Digest

September 27, 2025 8:22 PM

|

Martin Hendrick, Andrea Rinaldo, and Gabriele Manoli PNAS 122 (33) e2501224122 Cities can be viewed as living organisms and their metabolism as the set of processes controlling their evolving structure and function. Urban population, transport networks, and all anthropogenic activities have been proposed to mimic body mass, vascular systems, and metabolic rates of living organisms. This analogy is supported by the emergence of seemingly universal scaling laws linking city-scale quantities to population size. However, such scaling relations critically depend on the choices of city boundaries and neglect intraurban variations of urban properties. By capitalizing on today’s availability of high-resolution data, findings emerge on the generality of small-scale covariations in city characteristics and their link to city-wide averages, thus opening broad avenues to understand and design future urban environments. Read the full article at: www.pnas.org

|

Scooped by

Complexity Digest

September 27, 2025 3:07 PM

|

Sergey Gavrilets and Paul Seabright PNAS 122 (32) e2504339122 Beliefs about whether the world is a zero-sum or a positive-sum environment vary across individuals and cultures, and affect people’s willingness to work, invest, and cooperate with others. We model interaction between individuals who are biased toward believing the environment is zero-sum, and those biased toward believing it is positive-sum. Beliefs spread through natural and cultural selection if they lead individuals to have higher utilities. If individuals are matched randomly, selection leads to the more accurate beliefs driving out the less accurate. Nonrandom matching and conformity biases can favor the survival of inaccurate beliefs. Cultural authorities can profit from creating enclaves of like-minded individuals whose higher bias drives out the more accurate beliefs of others. Read the full article at: www.pnas.org

|

Scooped by

Complexity Digest

September 26, 2025 11:33 PM

|

Paul B. Rainey and Michael E. Hochberg PNAS 122 (37) e2509122122 Artificial intelligence (AI)—broadly defined as the capacity of engineered systems to perform tasks that would require intelligence if done by humans—is increasingly embedded in the infrastructure of human life. From personalized recommendation systems to large-scale decision-making frameworks, AI shapes what humans see, choose, believe, and do (1, 2). Much of the current concern about AI centers on its understanding, safety, and alignment with human values (3–5). But alongside these immediate challenges lies a broader, more speculative, and potentially more profound question: could the deepening interdependence between humans and AI give rise to a new kind of evolutionary individual? We argue that as interdependencies grow, humans and AI could come to function not merely as interacting agents, but as an integrated evolutionary individual subject to selection at the collective level. Read the full article at: www.pnas.org

|

Scooped by

Complexity Digest

September 26, 2025 3:25 PM

|

IXANDRA ACHITOUV Advances in Complex SystemsVol. 28, No. 06, 2540005 (2025) Financial stock returns correlations have been studied in the prism of random matrix theory to distinguish the signal from the “noise”. Eigenvalues of the matrix that are above the rescaled Marchenko–Pastur distribution can be interpreted as collective modes behavior while the modes under are usually considered as noise. In this analysis, we use complex network analysis to simulate the “noise” and the “market” component of the return correlations, by introducing some meaningful correlations in simulated geometric Brownian motion for the stocks. We find that the returns correlation matrix is dominated by stocks with high eigenvector centrality and clustering found in the network. We then use simulated “market” random walks to build an optimal portfolio and find that the overall return performs better than using the historical mean-variance data, up to 50% on short-time scale. Read the full article at: www.worldscientific.com

|

Scooped by

Complexity Digest

September 23, 2025 4:41 PM

|

Philip LaPorte, Shiyi Wang, Lenz Pracher, Saptarshi Pal, Martin Nowak In biology, there is often a tension between what is good for the individual and what is good for the population (1–6). Cooperation benefits the community, while defection tempts the individual to garner short term gains. The theory of repeated games specifies that there is a continuum of Nash equilibria which ranges from fully defective to fully cooperative (7,8). The mechanism of direct reciprocity, which relies on repeated interactions, therefore only stipulates that evolution of cooperation is possible, but whether or not cooperation can be established, and for which parameters, depends on the details of the underlying process of mutation and selection (9–18). Many well known evolutionary processes achieve cooperation only in restricted settings. In the case of the donation game (5,6), for example, high benefit to-cost ratios are often needed for selection to favor cooperation (19–22). Here we study a universe of two-player cooperative dilemmas (23), which includes the prisoner’s dilemma (24–27), snowdrift (28–30), stag-hunt (31) and harmony game. Upon those games we apply a universe of evolutionary processes. Among those processes we find a continuous set which has the feature that it achieves maximum payoff for all cooperative dilemmas under direct reciprocity. This set is characterized by a surprisingly simple property which we call parity: competing strategies are evaluated symmetrically. Read the full article at: www.researchsquare.com

|

Scooped by

Complexity Digest

September 12, 2025 3:36 PM

|

Honeybees are renowned for their skills in building intricate and adaptive combs that display notable variation in cell size. However, the extent of their adaptability in constructing honeycombs with varied cell sizes has not been thoroughly investigated. We use 3D-printing and X-ray microscopy to quantify honeybees’ capacity in adjusting the comb to different initial conditions. Our findings suggest three distinct comb construction modes in response to foundations with varying sizes of 3D-printed cells. For smaller foundations, bees occasionally merge adjacent cells to compensate for the reduced space. However, for larger cell sizes, the hive uses adaptive strategies such as tilting for foundations with cells up to twice the reference size and layering for cells that are three times larger than the reference cell. Our findings shed light on honeybees adaptive comb construction abilities, significant for the biology of self-organized collective behavior, as well as for bio-inspired engineered systems.

Gharooni-Fard G, Kavaraganahalli Prasanna C, Peleg O, López Jiménez F (2025) Honeybees adapt to a range of comb cell sizes by merging, tilting, and layering their construction. PLoS Biol 23(8): e3003253. Read the full article at: journals.plos.org

|

|

Scooped by

Complexity Digest

October 20, 2025 12:56 PM

|

J. A. Scott Kelso The European Physical Journal Special Topics This tribute to Hermann Haken, the great theoretical physicist, explores the idea—based on a reconsideration of the experiments that led to the HKB model—that intentions (an emergent ‘mental force’) are hidden~exposed in order parameter fluctuations that arise due to special boundary conditions or rate-independent constraints on the basic coordination dynamics of human brain and behavior. Read the full article at: link.springer.com

|

Scooped by

Complexity Digest

October 20, 2025 10:11 AM

|

P.G. Tello, S. Kauffman BioSystems Volume 258, December 2025, 105618 This work revisits the Maxwell Demon paradigm to explore its implications for evolutionary dynamics from an information-theoretic perspective. By removing the Demon as an intentional agent, we reinterpret the emergence of order as a natural outcome of physical laws combined with stochastic processes. Using models inspired by information theory, such as binary and Z-channels, we show how random fluctuations (e.g., stochastic resonance) can decrease entropy, generate mutual information, and induce non-ergodicity. These dynamics highlight the role of memory and correlation as emergent features of purely physical interactions without recourse to purposeful agency. In this framework, evolutionary exaptations, rather than sole adaptations, emerge as key drivers of biological evolution. Finally, we connect our analysis with recent contributions on agency and memory, underscoring the relevance of informational concepts for understanding the purposeless yet structured dynamics of evolutionary processes. Read the full article at: www.sciencedirect.com

|

Scooped by

Complexity Digest

October 18, 2025 3:30 PM

|

bel Jansma, Erik Hoel One of the reasons complex systems are complex is because they have multiscale structure. How does this multiscale structure come about? We argue that it reflects an emergent hierarchy of scales that contribute to the system's causal workings. An example is how a computer can be described at the level of its hardware circuitry but also its software. But we show that many systems, even simple ones, have such an emergent hierarchy, built from a small subset of all their possible scales of description. Formally, we extend the theory of causal emergence (2.0) so as to analyze the causal contributions across the full multiscale structure of a system rather than just over a single path that traverses the system's scales. Our methods reveal that systems can be classified as being causally top-heavy or bottom-heavy, or their emergent hierarchies can be highly complex. We argue that this provides a more specific notion of scale-freeness (here, when causation is spread equally across the scales of a system) than the standard network science terminology. More broadly, we provide the mathematical tools to quantify this complexity and provide diverse examples of the taxonomy of emergent hierarchies. Finally, we demonstrate the ability to engineer not just degree of emergence in a system, but how that emergence is distributed across the multiscale structure. Read the full article at: arxiv.org

|

Suggested by

Christoph Riedl

October 17, 2025 3:22 PM

|

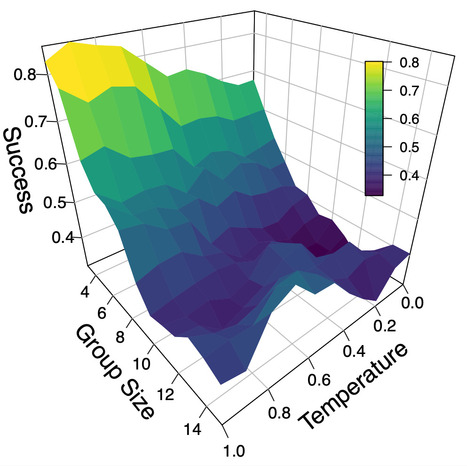

Christoph Riedl When are multi-agent LLM systems merely a collection of individual agents versus an integrated collective with higher-order structure? We introduce an information-theoretic framework to test -- in a purely data-driven way -- whether multi-agent systems show signs of higher-order structure. This information decomposition lets us measure whether dynamical emergence is present in multi-agent LLM systems, localize it, and distinguish spurious temporal coupling from performance-relevant cross-agent synergy. We implement both a practical criterion and an emergence capacity criterion operationalized as partial information decomposition of time-delayed mutual information (TDMI). We apply our framework to experiments using a simple guessing game without direct agent communication and only minimal group-level feedback with three randomized interventions. Groups in the control condition exhibit strong temporal synergy but only little coordinated alignment across agents. Assigning a persona to each agent introduces stable identity-linked differentiation. Combining personas with an instruction to ``think about what other agents might do'' shows identity-linked differentiation and goal-directed complementarity across agents. Taken together, our framework establishes that multi-agent LLM systems can be steered with prompt design from mere aggregates to higher-order collectives. Our results are robust across emergence measures and entropy estimators, and not explained by coordination-free baselines or temporal dynamics alone. Without attributing human-like cognition to the agents, the patterns of interaction we observe mirror well-established principles of collective intelligence in human groups: effective performance requires both alignment on shared objectives and complementary contributions across members. https://arxiv.org/abs/2510.05174

|

Scooped by

Complexity Digest

October 15, 2025 3:38 PM

|

R. Maria del Rio-Chanona, Ekkehard Ernst, Rossana Merola, Daniel Samaan, Ole Teutloff Generative AI is altering work processes, task composition, and organizational design, yet its effects on employment and the macroeconomy remain unresolved. In this review, we synthesize theory and empirical evidence at three levels. First, we trace the evolution from aggregate production frameworks to task- and expertise-based models. Second, we quantitatively review and compare (ex-ante) AI exposure measures of occupations from multiple studies and find convergence towards high-wage jobs. Third, we assemble ex-post evidence of AI's impact on employment from randomized controlled trials (RCTs), field experiments, and digital trace data (e.g., online labor platforms, software repositories), complemented by partial coverage of surveys. Across the reviewed studies, productivity gains are sizable but context-dependent: on the order of 20 to 60 percent in controlled RCTs, and 15 to 30 percent in field experiments. Novice workers tend to benefit more from LLMs in simple tasks. Across complex tasks, evidence is mixed on whether low or high-skilled workers benefit more. Digital trace data show substitution between humans and machines in writing and translation alongside rising demand for AI, with mild evidence of declining demand for novice workers. A more substantial decrease in demand for novice jobs across AI complementary work emerges from recent studies using surveys, platform payment records, or administrative data. Research gaps include the focus on simple tasks in experiments, the limited diversity of LLMs studied, and technology-centric AI exposure measures that overlook adoption dynamics and whether exposure translates into substitution, productivity gains, erode or increase expertise. Read the full article at: arxiv.org

|

Scooped by

Complexity Digest

October 13, 2025 10:34 PM

|

Eleonora Vitanza , Chiara Mocenni and Pietro De Lellis In this paper, we introduce a novel Markovian model that describes the impact of egosyntonicity on emotion dynamics. We focus on the dominant current emotion and describe the time evolution of its valence, modelled as a binary variable, where 0 and 1 correspond to negative and positive valences, respectively. In particular, the one-step transition probabilities will depend on the external events happening in daily life, the attention the individual devotes to such events, and the egosyntonicity, modelled as the agreement between the current valence and the internal mood of the individual. A steady-state analysis shows that, depending on the model parameters, four classes of individuals can be identified. Two classes are somewhat expected, corresponding to individuals spending more (less) time in egosyntonicity experiencing positive valences for longer (shorter) times. Surprisingly, two further classes emerge: the self-deluded individuals, where egosyntonicity is associated to a prevalence of negative valences, and the troubled happy individuals, where egodystonicity is associated to positive valences. These findings are aligned with the literature showing that, even if egosyntonicity typically has a positive impact in the short term, it may not always be beneficial in the long run. Read the full article at: royalsocietypublishing.org

|

Scooped by

Complexity Digest

September 28, 2025 3:18 PM

|

Laurent Hébert-Dufresne, Juniper Lovato, Giulio Burgio, James P. Gleeson, S. Redner, and P. L. Krapivsky Phys. Rev. Lett. 135, 087401 Models of how things spread often assume that transmission mechanisms are fixed over time. However, social contagions—the spread of ideas, beliefs, innovations—can lose or gain in momentum as they spread: ideas can get reinforced, beliefs strengthened, products refined. We study the impacts of such self-reinforcement mechanisms in cascade dynamics. We use different mathematical modeling techniques to capture the recursive, yet changing nature of the process. We find a critical regime with a range of power-law cascade size distributions with nonuniversal scaling exponents. This regime clashes with classic models, where criticality requires fine-tuning at a precise critical point. Self-reinforced cascades produce critical-like behavior over a wide range of parameters, which may help explain the ubiquity of power-law distributions in empirical social data. Read the full article at: link.aps.org

|

Scooped by

Complexity Digest

September 27, 2025 3:21 PM

|

Akarsh Kumar, Chris Lu, Louis Kirsch, Yujin Tang, Kenneth O. Stanley, Phillip Isola, David Ha Artificial Life (2025) 31 (3): 368–396. With the recent Nobel Prize awarded for radical advances in protein discovery, foundation models (FMs) for exploring large combinatorial spaces promise to revolutionize many scientific fields. Artificial Life (ALife) has not yet integrated FMs, thus presenting a major opportunity for the field to alleviate the historical burden of relying chiefly on manual design and trial and error to discover the configurations of lifelike simulations. This article presents, for the first time, a successful realization of this opportunity using vision-language FMs. The proposed approach, called automated search for Artificial Life (ASAL), (a) finds simulations that produce target phenomena, (b) discovers simulations that generate temporally open-ended novelty, and (c) illuminates an entire space of interestingly diverse simulations. Because of the generality of FMs, ASAL works effectively across a diverse range of ALife substrates, including Boids, Particle Life, the Game of Life, Lenia, and neural cellular automata. A major result highlighting the potential of this technique is the discovery of previously unseen Lenia and Boids life-forms, as well as cellular automata that are open-ended like Conway’s Game of Life. Additionally, the use of FMs allows for the quantification of previously qualitative phenomena in a human-aligned way. This new paradigm promises to accelerate ALife research beyond what is possible through human ingenuity alone. Read the full article at: direct.mit.edu

|

Scooped by

Complexity Digest

September 27, 2025 12:55 PM

|

Pedro A M Mediano, Fernando E Rosas, Andrea I Luppi, Robin L Carhart-Harris, Daniel Bor , Anil K Seth, and Adam B Barrett PNAS 122 (39) e2423297122 Complex systems, from the human brain to the global economy, are made of multiple elements that interact dynamically, often giving rise to collective behaviors that are not readily predictable from the “sum of the parts.” To advance our understanding of how this can occur, here we present a mathematical framework to disentangle and quantify different “modes” of information storage, transfer, and integration in complex systems. This framework reveals previously unreported collective behavior phenomena in experimental data across scientific fields, and provides principles to classify and formally relate diverse measures of dynamical complexity and information processing. Read the full article at: www.pnas.org

|

Scooped by

Complexity Digest

September 26, 2025 5:25 PM

|

GIOVANNI MAURO, NICOLA PEDRESCHI, RENAUD LAMBIOTTE, and LUCA PAPPALARDO Advances in Complex SystemsVol. 28, No. 06, 2540006 (2025) The phenomenon of gentrification of an urban area is characterized by the displacement of lower-income residents due to rising living costs and an influx of wealthier individuals. This study presents an agent-based model that simulates urban gentrification through the relocation of three income groups — low, middle, and high — driven by living costs. The model incorporates economic and sociological theories to generate realistic neighborhood transition patterns. We introduce a temporal network-based measure to track the outflow of low-income residents and the inflow of middle- and high-income residents over time. Our experiments reveal that high-income residents trigger gentrification and that our network-based measure consistently detects gentrification patterns earlier than traditional count-based methods, potentially serving as an early detection tool in real-world scenarios. Moreover, the analysis highlights how city density promotes gentrification. This framework offers valuable insights for understanding gentrification dynamics and informing urban planning and policy decisions. Read the full article at: www.worldscientific.com

|

Scooped by

Complexity Digest

September 24, 2025 4:47 PM

|

Qianyang Chen, Nihat Ay, Mikhail Prokopenko Self-organizing systems consume energy to generate internal order. The concept of thermodynamic efficiency, drawing from statistical physics and information theory, has previously been proposed to characterize a change in control parameter by relating the resulting predictability gain to the required amount of work. However, previous studies have taken a system-centric perspective and considered only single control parameters. Here, we generalize thermodynamic efficiency to multi-parameter settings and derive two observer-centric formulations. The first, an inferential form, relates efficiency to fluctuations of macroscopic observables, interpreting thermodynamic efficiency in terms of how well the system parameters can be inferred from observable macroscopic behaviour. The second, an information-geometric form, expresses efficiency in terms of the Fisher information matrix, interpreting it with respect to how difficult it is to navigate the statistical manifold defined by the control protocol. This observer-centric perspective is contrasted with the existing system-centric view, where efficiency is considered an intrinsic property of the system. Read the full article at: arxiv.org

|

Scooped by

Complexity Digest

September 22, 2025 4:37 PM

|

Manuel de J. Luevano-Robledo, Alejandro Puga-Candelas Physica D: Nonlinear Phenomena

Volume 482, November 2025, 134844 In this work, several random Boolean networks (RBNs) are generated and analyzed based on two fundamental features: their time evolution diagrams and their transition diagrams. For this purpose, we estimate randomness using three measures, among which Algorithmic Complexity stands out because it can (a) reveal transitions towards the chaotic regime more distinctly, and (b) disclose the algorithmic contribution of certain states to the transition diagrams, including their relationship with the order they occupy in the temporal evolution of the respective RBN. Results from both types of analysis illustrate the potential of Algorithmic Complexity and Perturbation Analysis for Boolean networks, paving the way for possible applications in modeling biological regulatory networks. Read the full article at: www.sciencedirect.com

|

Your new post is loading...

Your new post is loading...