At Artisan, we’ve processed billions of analytics events. Our infrastructure pipeline digesting this steady stream of data is complex yet nimble and performant. Collecting and extracting the data contained in these events in a manner that enables our customers to leverage it, immediately, is paramount. This post focuses on one small facet of that subsystem, our use of Redis Pipelining and Scripting.

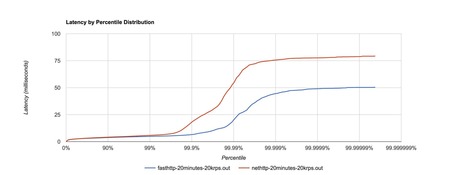

Redis is a critical data store used across our stack. Its read and write latencies are impressive, and we take full advantage of it. Every millisecond we’re able shave off in the process of sifting data adds value. To this point, where applicable, we leverage pipelining.

Your new post is loading...

Your new post is loading...