This tutorial describes the various stages in a bug aka defect life cycle and its importance.

Get Started for FREE

Sign up with Facebook Sign up with X

I don't have a Facebook or a X account

Your new post is loading... Your new post is loading...

Your new post is loading... Your new post is loading...

Current selected tag: 'test'. Clear

Features of SDLC

Features of STLC

For example Name of the test: __, Responsibilities: __; Date of the test: __, Outcome of the test: __; etc. The last step in agile test plan is the QA team’s functions and tasks. In this section, the functions and tasks of developers and testers are listed. As far as agile testing is considered, everyone must converse and come together to make sure the quality is preserved while maintaining the process of the software programming. References

From

medium

DTAP encourages the creation of queues, whereas Agile software development encourages removal of queues.

Mickael Ruau's insight:

Imagine a team that can reliably deploy a single feature to a live environment without disrupting it. They develop new features on a development-environment. The team integrates work through version-control (e.g. Git). Features are developed on a ‘develop’-branch in their version-control system. When a feature is done, it is committed to a ‘master’-branch in their version-control system. A build server picks up the commit, builds the entire codebase from scratch and runs all the automated tests it can find. When there are no issues, the build is packaged for deployment. A deployment server picks up the package, connects to the webserver/webfarm, creates a snapshot for rapid rollback and runs the deployment. The webfarm is updated one server at a time. If a problem occurs, the entire deployment is aborted and rolled back. The deployment takes place in such a manner that active users don’t experience disruptions. After a deployment, a number of automated smoke tests are run to verify that critical components are still functioning and performing. Various telemetry sensors monitor the application throughout the deployment to notify the team as soon as something breaks down.

It became very apparent the model wasn’t working; we were doing it wrong. We were not the first team to recognize the problem. There were services before us, like Bing, that saw this. And we started observing the best practices of some of the companies born in the cloud

In other words, we pushed testing left, we pushed testing right and got rid of most of the testing in the middle. This is a departure from the past where most of the testing that was happening in the middle – integration style testing in the lab. The rest of the document describes #1 in a little bit more detail.

Mickael Ruau's insight:

The Org Change for Quality OwnershipWe did ‘combined engineering’ – a term used at Microsoft to indicate merging of responsibilities for dev and test in a single engineering role. It is not just an organization change where you bring the Dev and Test teams together. It is an actual discipline merge, with single Engineer role that has qualifications and responsibilities of the SDE and SDET disciplines of the past. Everyone has a new role, everyone needs to learn new skills. This is a very important point. When we talk about combined engineering, a common question we get is how we trained the former testers. We had to train both ways. A former developer had to learn how to be a good tester and a former tester had to pick up good design skills. Managers had to learn how to manage end to end feature delivery. In this model, there is no handoff to another person or team for testing. Each engineer owns E2E quality of the feature they build – from unit testing, to integration testing, to performance testing, to deployment, to monitoring live site and so on. Partnership with other engineers is still valued, even more so. There is now greater emphasis on peer reviews, design reviews, code reviews, test reviews etc. But the accountability for delivering a high quality feature is not diluted across multiple disciplines. This was a big cultural shift across the company. This change happened first in one org, but then over a few years, every team across Microsoft moved to this model. There are some variations to this model but at this point there are no separate dev and test teams at Microsoft. They are just engineering teams with the combined engineer roles.

Your boss says it's time to become a continuous-delivery shop. Here's how testing pros can lead the charge.

Alan, Ken Johnston, and Bj Rollison recently published How We Test Software at Microsoft (448 pages, ISBN: 9780735624252) in Microsoft Press's Best Practices series for developers. The authors have also created a website devoted to the book: HWTSAM (Information and discussion on the MS Press release "How We Test Software at Microsoft”), where you can read reviews, review the book’s table of contents, and see pictures of the book (in the wild indeed!). And Alan’s guest-post contains a lengthy excerpt from the book. Enjoy!

Cet article a été écrit et est paru dans le livre du CFTL "Les tests en agile". La qualité de la mise en plage est par conséquent nettement meilleur sur le livre. Néanmoins, le contenu reste le même

Mickael Ruau's insight:

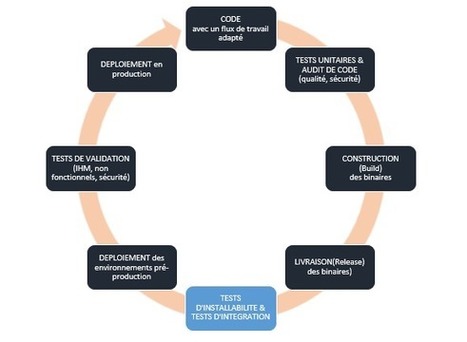

Voici un schéma permettant d’appréhender la différence d’échelle entre les mises en service

Mariam Shaikh and Melissa Powel talk about sketching and paper prototyping. Have you ever struggled with how to get from an idea to a high fidelit

The Economic Value of DFT

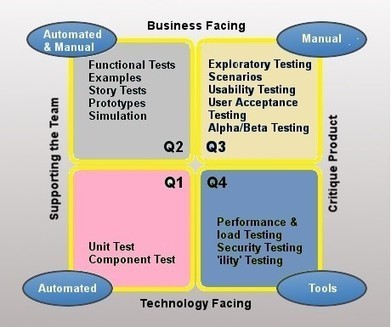

Agile testing covers two specific business perspectives: on the one hand, it offers the ability to critique the product, thereby minimizing the impact of defects’ being delivered to the user. On the other, it supports iterative development by providing quick feedback within a continuous integration process. None of these factors can come into a play if the system does not allow for simple system/component/unit-level testing. This means that agile programs, which sustain testability through every design decision, will enable the enterprise to achieve shorter runway for business and architectural epics. DFT helps reduce the impact of large system scope, and affords agile teams the luxury of working with something that is more manageable. That is why the role of a System Architect is so important in agile at scale, but it also reflects a different motivation: instead of defining a Big Design Up-front (BDUF), agile architect helps to establish and sustain an effective product development flow by assuring the assets that are developed are of high quality and needn’t be revisited. This reduces the cost of delay in development because in a system that is designed for testability, all jobs require less time.

© Scaled Agile, Inc. Read the FAQs on how to use SAFe content and trademarks here:

Mickael Ruau's insight:

System Architect Role and DFT With respect to designing for testability, the system architect can play a primarily role in DFT:

Table 1. Aspects of the system architect’s role in fostering system testability.

© Scaled Agile, Inc. Read the FAQs on how to use SAFe content and trademarks here:

Microsoft CEO Satya Nadella is preaching a more nimble approach to building software as part of the the company's transformation.

Mickael Ruau's insight:

Following a July 10 memo in which he promised to “develop leaner business processes,” Mr. Nadella told Bloomberg Thursday that it makes more sense to have developers test and fix bugs instead of a separate team of testers to build cloud software. Such an approach, a departure from the company’s traditional practice of dividing engineering teams comprised of program managers, developers and testers, would make Microsoft more efficient, enabling it to cut costs while building software faster, experts say. Read more ...

From

xp123

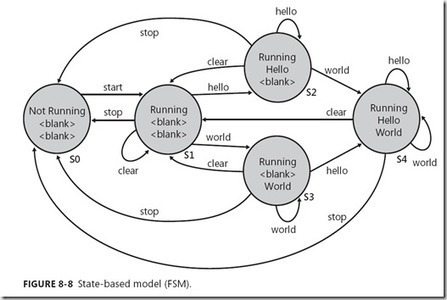

Oracles tell us if a test is returning the right answer. Some tests need simple oracles, but problems with many possible answers need combinatorial oracles.

I’ve been meaning to post this for ages. So while I’m polishing the rough edges on my Part 2 of 2 post, I thought I’d take this opportunity to finally make good on that promise. Here it is: the Quality Tree Software, Inc. Test Heuristics Cheat Sheet, formerly only available by taking one of our testing classes. |

Scrum methodology comes as a solution for executing such a complicated task. It helps the development team to focus on all aspects of the product like quality, performance, usability and so on. In this tutorial, you will learn-

Mickael Ruau's insight:

Testing Activities in ScrumTesters do following activities during the various stages of Scrum- Sprint Planning

Sprint

Sprint Retrospective

Une guide d'introduction à la méthodologie SCRUM et aux bases de l'analyse d'application - obrassard/scrum-sensei

Mickael Ruau's insight:

Table des matières

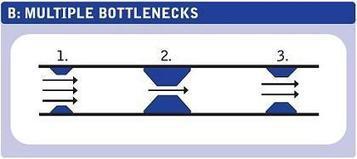

When You're Out to Fix Bottlenecks, Be Sure You're Able to Distinguish Them From System Failures and Slow Spots

Alice rêve de tests à ajouter dans son application quand elle aperçoit le Lapin blanc soucieux de qualité. Partie à sa poursuite, elle se trouve propulsée dans un monde ressemblant étrangement à son code, et commence à faire apparaître de nombreux tests unitaires. Pourtant, le Lapin blanc est encore insatisfait ; lesdits tests se rebellent, deviennent incontrôlables et ne veulent plus vérifier ce qu'elle veut. Comment Alice va-t-elle réussir à reprendre la main sur les tests et les faire fonctionner correctement ?

À travers les aventures d'Alice, je vais vous présenter les pièges courants du testing qui découragent souvent les débutants, mais également les bonnes pratiques et des outils pour obtenir des tests fonctionnels et efficaces.

As traditional knowledge sharing is no longer an effective way to deliver great software, the presenter has modified the mob programming concept to mob testing to improve the way teams communicate.

This innovative approach to software testing allows the whole software development team to share every piece of information early on. Mob testing tightens loopholes in the traditional approach and tackles the painful headaches of environment setup and config issues faced by a new arrival to the team.

Think of mob testing as an evaluation process to build trust and understanding.

Cet article a été écrit pour et publié initialement dans le magazine Programmez! d'avril 2019

Know when, how, and why you should paper prototype. Tips, templates, and resources included.

Mickael Ruau's insight:

Testing & Presenting Paper PrototypesWhen it comes time to show your paper prototypes to other people — whether stakeholders or testers — things get tricky. Because so much is dependent on the user’s imagination, you need to set the right context.

Find out what kinds of automated tests you should implement for your application and learn by examples what these tests could look like.

Testing becomes part of the development process. It is not something you do at the end with a separate team. Both Ken Schwaber and I were consultants on that project.The next problem is Windows. At Agile 2013 last summer, Microsoft reported on a companywide initiative to get agile. 85% of every development dollar was spent on fixing bugs in the nonagile groups of over 20,000 developers. To fix that requires a major reorganization at Microsoft.

In this Webinar, Michael Bolton takes a hard look at the testing mission and how we go about it. As alternatives to test cases, he’ll offer ways to think about… |