Your new post is loading...

Your new post is loading...

|

Scooped by

Philippe J DEWOST

December 2, 2019 3:18 AM

|

How Fireflies.ai works? Users can connect their Google or Outlook calendars with Fireflies and have our AI system capture meetings in real-time across more than a dozen different web-conferencing platforms like Zoom, Google Meet, Skype, GoToMeeting, Webex, and many more systems. These meetings are then indexed, transcribed, and made searchable inside the Fireflies dashboard. You can comment, annotate key moments, and automatically extract relevant information around numerous topics like the next steps, questions, and red flags. Instead of spending time frantically taking notes in meetings, Fireflies users take comfort knowing that shortly after a meeting they are provided with a transcript of the conversation and an easy way to collaborate on the project going forward. Fireflies can also sync all this vital information back into the places where you already work thanks to robust integrations with Slack, Salesforce, Hubspot, and other platforms. Fireflies.ai is the bridge that helps data flow seamlessly from your communication systems to your system of records. This approach is possible today because of major technological changes over the last 5 years in the field of machine learning. Fireflies leverage recent enhancements in Automatic Speech Recognition (ASR), natural language processing (NLP), and neural nets to create a seamless way for users to record, annotate, search, and share important moments from their meetings. Who is Fireflies for? The beauty of Fireflies is that it’s been adopted by people in different roles across organizations big and small: - Sales managers use Fireflies to review their reps’ calls at lightning speed and provide on the spot coaching

- Marketers create key customer soundbites from calls to use in their campaigns.

- Recruiters no longer worry about taking hasty notes and instead spend more time paying attention to candidates during interviews.

- Engineers refer back to specific parts of calls using our smart search capabilities to make everyone aware of the decisions that were finalized.

- Product managers and executives rely on Fireflies to document knowledge and important initiatives that are discussed during all-hands and product planning meetings on how to get access Fireflies have a free tier for individuals and teams to easily get started. For more advanced capabilities like augmented call search, more storage, and admin controls, we offer different tiers for growing teams and enterprises. You can learn more about our pricing and tiers by going to fireflies.ai/pricing.

|

Scooped by

Philippe J DEWOST

November 14, 2019 6:02 AM

|

“Mirage” is the polite world I use to characterize the idea that small individual transportation vehicles could be the solution to urban congestion or pollution. Micromobility is not an innovation… Micromobility is socially selective, environmentally unfriendly, and it is not even supported by a sustainable model. But it is still well-hyped and it continues to draw large investments. Here is why.

“Mirage” is the polite world I use to characterize the idea that small individual transportation vehicles could be the solution to urban congestion or pollution.

Micromobility is not an innovation. It is a bad remedy to a failure of the public apparatus — at the state or the municipal level — unable to develop adequate infrastructure despite opulent fiscal bases.

|

Scooped by

Philippe J DEWOST

November 8, 2019 12:41 PM

|

Something strange is happening with text messages in the US right now. Overnight, a multitude of people received text messages that appear to have originally been sent on or around Valentine’s Day 2019. These people never received the text messages in the first place; the people who sent the messages had no idea that they had never been received, and they did nothing to attempt to resend them overnight. Delayed messages were sent from and received by both iPhones and Android phones, and the messages seem to have been sent and received across all major carriers in the US. Many of the complaints involve T-Mobile or Sprint, although AT&T and Verizon have been mentioned as well. People using regional US carriers, carriers in Canada, and even Google Voice also seem to have experienced delays. At fault seems to be a system that multiple cell carriers use for messaging. A Sprint spokesperson said a “maintenance update” last night caused the error. “The issue was resolved not long after it occurred,” the spokesperson said. “We apologize for any confusion this may have caused.” T-Mobile blamed the issue on a “third party vendor.” It didn’t clarify what company that was or what service they provided. “We’re aware of this and it is resolved,” a T-Mobile spokesperson said. The statements speak to why the messages were sent last night, but it’s still unknown why the messages were all from Valentine’s Day and weren’t sent in the first place. The Verge has asked Sprint and T-Mobile to provide more information about what happened. Dozens and dozens of people have posted about receiving messages overnight. Most expressed confusion or spoke to the awkwardness of the situation, having been told by friends that they sent a mysterious early-morning text message. A few spoke to much more distressing repercussions of this error: one person said they received a message from an ex-boyfriend who had died; another received messages from a best friend who is now dead. “It was a punch in the gut. Honestly I thought I was dreaming and for a second I thought she was still here,” said one person, who goes by KuribHoe on Twitter, who received the message from their best friend who had died. “The last few months haven’t been easy and just when I thought I was getting some type of closure this just ripped open a new hole.” Barbara Coll, who lives in California, said she received an old message from her sister saying that their mom was upbeat and doing well. She knew the message must have been sent before their mother died in June, but she said it was still shocking to receive. “I haven’t stopped thinking about that message since I got it,” Coll said. “I’m out looking at the ocean right now because I needed a break.” Coll said her sister also received a delayed message that she had sent about planning to visit to see their mother. Another person said a text message that she sent in February was received at 5AM by someone who is now her ex-boyfriend. The result was “a lot of confusion,” said Jamie. But she said that “it was actually kinda nice that it opened up a short conversation.” The Verge has reached out to Verizon, AT&T, and Google for comment.

|

Scooped by

Philippe J DEWOST

October 7, 2019 1:58 AM

|

Nous connaissons tous, dans notre entourage, des gens qui parlent comme de véritables « mitraillettes » et d'autres dont l'élocution est au contraire plutôt traînante. Si nous sommes un minimum polyglottes, nous aurons également remarqué que ces différences dans le débit de paroles ne se rencontrent pas que d'un locuteur à l'autre, mais aussi d'une langue à l'autre : pas besoin de lire Murakami ou Cervantès dans le texte pour savoir que ces deux langues, le japonais et l'espagnol, se parlent plutôt vite… Une équipe de linguistes du laboratoire Dynamique du langage (université Lumière Lyon II) s'est posé une intéressante question. Elle s'est demandé si le fait que certaines langues se parlaient avec un débit plus rapide que d'autres les rendaient plus efficaces pour transmettre de l'information. Et leurs conclusions, parues le mois dernier dans la revue « Science Advances » , sont tout à fait étonnantes. Densité syllabique Pour leur expérience, les linguistes lyonnais ont demandé à 170 locuteurs de 17 langues différentes de lire à voix haute des séries de textes. Et ils ont appliqué à leurs enregistrements les méthodes et outils d'analyse hérités de la théorie de l'information du génial Claude Shannon. Première observation : le constat intuitif que certaines langues semblent plus rapides que d'autres à l'oreille est parfaitement justifié. Le débit de parole, mesuré en nombre de syllabes prononcées par seconde, varie quasiment du simple au double, sur un éventail allant de 8,03 syllabes par seconde pour le japonais à 5,25 pour le vietnamien et même 4,70 pour le thaï - comme quoi ces variations n'ont rien à voir avec la répartition géographique, puisque les langues asiatiques se trouvent aux deux extrémités du spectre. Mais cela ne nous renseigne pas sur la plus ou moins grande efficacité supposée des langues à transmettre de l'information. Pour cela, les signataires de l'étude ont mesuré un autre paramètre, un peu plus difficile à saisir : la densité syllabique d'information. Principal auteur de l'étude, le linguiste François Pellegrino nous explique ce qu'il entend par ces termes : « Si une syllabe peut être facilement déduite de celles qui la précèdent, c'est qu'elle apporte peu d'information, au sens de Shannon ; si, au contraire, elle est difficilement prédictible, elle en apporte beaucoup. » Prenons un exemple tiré du français courant pour rendre cette définition plus concrète. Si vous lisez le mot « parce », il y a fort à parier que la syllabe suivante sera « que » : le simple fait de pouvoir la prédire avec une quasi-certitude signifie que sa densité d'information est quasi nulle. Deux stratégies Toutes les langues n'encodent pas la même quantité moyenne d'information - mesurée en bits - dans chacune de leurs syllabes. « La densité moyenne d'information des différentes langues varie sur un intervalle allant de 5,03 bits par syllabe, pour le japonais, à 8,02 bits, pour le vietnamien », indique François Pellegrino. Que le japonais ait une densité moyenne d'information d'environ 5 bits par syllabe signifie que prédire quelle sera la syllabe suivante à partir de celles qui l'ont précédée revient à faire le bon choix parmi 32 (25) possibilités ; en vietnamien, cela revient à faire le bon choix parmi 256 (28) possibilités ; c'est donc 8 fois plus facile à faire pour le japonais que pour le vietnamien. En d'autres termes, la densité d'information du japonais est 8 fois plus faible que celle du vietnamien. Or, l'étude menée par le laboratoire Dynamique du langage sur les 17 langues choisies a montré que ces deux paramètres, débit de parole (mesuré en nombre de syllabes par seconde) et densité d'information (mesuré en bits par syllabe), variaient en sens inverse. Un débit de parole plus important s'accompagne systématiquement d'une densité d'information plus faible, et vice versa. Et il en résulte un phénomène tout à fait remarquable. C'est que si l'on considère à présent le débit d'information d'une langue, défini comme le produit des deux paramètres ci-dessus, celui-ci s'avère constant en tout point du globe : il s'établit à environ 39 bits par seconde. Si dissemblables soient-elles, qu'elles sonnent rapidement ou lentement à nos oreilles, toutes les langues parlées à la surface de la Terre véhiculent, dans un laps de temps donné, la même quantité d'information. « Une bonne façon de voir les choses, commente François Pellegrino, consiste à dire que, pour être efficaces en termes de transmission d'information, les langues ont le choix entre deux stratégies opposées : soit elles privilégient un débit de paroles élevé au prix d'une faible densité d'information, soit elles font l'inverse. » A cet égard, le français est une langue « moyenne », située à peu près à équidistance des deux extrêmes tant pour le débit de paroles (6,85 syllabes par seconde) que pour la densité d'information (6,68 bits par syllabe). Ce qui lui permet, lui aussi, d'atteindre un débit d'information proche de 39 bits par seconde. Un mécanisme darwinien Que cette dernière valeur soit universelle signifie sans doute qu'elle ne doit rien au hasard, mais elle est étroitement contrainte par nos capacités cognitives et la façon dont notre cerveau traite le langage. Une langue qui serait très en deçà de ce seuil de 39 bits par seconde ne permettrait pas à ses locuteurs de faire face à la complexité du monde et serait vite éliminée. Si elle le dépassait, elle surchargerait leurs capacités cognitives (il nous est impossible de maintenir en permanence la production ou le traitement d'un débit d'information trop important) et connaîtrait le même sort. Ce seuil de 39 bits par seconde correspond donc à une niche à la fois biologique et culturelle, qui définit la zone de viabilité des langues humaines. Cette vision des langues est d'autant plus fascinante que celles-ci, loin d'être figées, évoluent sans cesse au cours du temps. Comme le montre clairement une précédente étude du même laboratoire lyonnais (lire ci-dessous), il peut arriver que de nouveaux sons apparaissent, par exemple, par l'ajout de voyelles nasales (« an », « in », « on », etc.) qui vient doubler le nombre de voyelles total dont dispose une langue. Plus de sons et donc plus de syllabes augmentent la densité d'information d'une langue. Quitte à la faire dévier de sa niche de 39 bits par seconde ? Non, répond François Pellegrino. « Notre hypothèse est que, chaque fois qu'un changement dans la structure d'une langue a modifié sa densité syllabique d'information, ce changement a également conduit ses locuteurs à modifier en sens inverse leur débit de parole, afin de préserver un débit d'information optimal. » Un mécanisme darwinien qui ressemble beaucoup à la façon dont les espèces vivantes, soumises aux lois de l'évolution, s'adaptent pour ne pas mourir. L'énigme des sons « f » et « v » Une équipe du laboratoire lyonnais Dynamique du langage, réunie autour de Dan Dediu, a validé une géniale intuition qu'avait eue, dans les années 1980, le grand linguiste américain Charles Hockett. Celui-ci avait remarqué que les consonnes dites labiodentales - le « f » et le « v » -, n'existaient que chez les peuples ayant accès à des aliments mous. Au terme d'une rigoureuse enquête de plusieurs années qui a mêlé analyse statistique, modèle biomécanique et données phylogénétiques, Dan Dediu et les coauteurs de l' étude parue au printemps dernier dans la revue « Science » ont validé ce point. Les consonnes labiodentales sont apparues dans la grande famille des langues indo-européennes (plus de 1.000 langues !) plus tard que les autres sons, il y a entre 6.000 et 3.500 ans. Et leur apparition a été la conséquence de l'invention de l'agriculture, qui a substitué aux aliments très abrasifs consommés par les chasseurs-cueilleurs, telles les racines, d'autres denrées plus molles, comme le pain ou le fromage. Un changement alimentaire qui a modifié la position des incisives. Alors que, chez les enfants et les adultes des populations pratiquant l'agriculture, les incisives du haut sont un peu en avant sur celles du bas, chez les chasseurs-cueilleurs, l'usure des dents fait que cet écart disparaît. Or, le modèle biomécanique utilisé dans l'étude a montré que, avec des incisives du haut alignées sur celles du bas, l'effort musculaire à fournir pour prononcer les sons « f » et « v » était de 29 % plus élevé que la normale. Plus coûteux sur le plan énergétique, ces sons ne sont donc pas apparus dans les langues des chasseurs-cueilleurs. « Notre étude montre comment un changement culturel a entraîné un changement biologique qui a entraîné un changement linguistique », résume Dan Dediu. Des débits de paroles variables en Europe En syllabes par seconde : Espagnol : 7,71 Italien : 7,16 Français : 6,85 Anglais : 6,34 Allemand : 6,09

|

Scooped by

Philippe J DEWOST

September 25, 2019 12:46 AM

|

Huawei announced its own 4K television, the Huawei Vision, during the Mate 30 Pro event today. Like the Honor Vision and Vision Pro TVs that were announced back in August, Huawei’s self-branded TV runs the company’s brand-new Harmony OS software as its foundation. Huawei will offer 65-inch and 75-inch models to start, with 55-inch and 85-inch models coming later. The Huawei TV features quantum dot color, thin metal bezels, and a pop-up camera for video conferencing that lowers into the television when not in use. On TVs, Harmony OS is able to serve as a hub for smart home devices that support the HiLink platform. Huawei is also touting the TV’s AI capabilities, likening it to a “smart speaker with a big screen.” The TV supports voice commands and includes facial recognition and tracking capabilities. Apparently, there’s some AI mode that helps protect the eyes of young viewers — presumably by filtering blue light. The Vision also allows “one-hop projection” from a Huawei smartphone. The TV’s remote has a touchpad and charges over USB-C.

|

Scooped by

Philippe J DEWOST

September 11, 2019 2:32 AM

|

McDonald’s is increasingly looking at tech acquisitions as a way to reinvent the fast-food experience. Today, it’s announcing that it’s buying Apprente, a startup building conversational agents that can automate voice-based ordering in multiple languages. If that sounds like a good fit for fast-food drive thru, that’s exactly what McDonald’s leadership has in mind. In fact, the company has already been testing Apprente’s technology in select locations, creating voice-activated drive-thrus (along with robot fryers) that it said will offer “faster, simpler and more accurate order taking.” McDonald’s said the technology also could be used in mobile and kiosk ordering. Presumably, besides lowering wait times, this could allow restaurants to operate with smaller staffs. Earlier this year, McDonald’s acquired online personalization startup Dynamic Yield for more than $300 million, with the goal of creating a drive-thru experience that’s customized based on things like weather and restaurant traffic. It also invested in mobile app company Plexure. Now the company is looking to double down on its tech investments by creating a new Silicon Valley-based group called McD Tech Labs, with the Apprente team becoming the group’s founding members, and Apprente co-founder Itamar Arel becoming vice president of McD Tech Labs. McDonald’s said it will expand the team by hiring more engineers, data scientists and other tech experts.

|

Scooped by

Philippe J DEWOST

September 5, 2019 2:42 AM

|

We learn from our personal interaction with the world, and our memories of those experiences help guide our behaviors. Experience and memory are inexorably linked, or at least they seemed to be before a recent report on the formation of completely artificial memories. Using laboratory animals, investigators reverse engineered a specific natural memory by mapped the brain circuits underlying its formation. They then “trained” another animal by stimulating brain cells in the pattern of the natural memory. Doing so created an artificial memory that was retained and recalled in a manner indistinguishable from a natural one. Memories are essential to the sense of identity that emerges from the narrative of personal experience. This study is remarkable because it demonstrates that by manipulating specific circuits in the brain, memories can be separated from that narrative and formed in the complete absence of real experience. The work shows that brain circuits that normally respond to specific experiences can be artificially stimulated and linked together in an artificial memory. That memory can be elicited by the appropriate sensory cues in the real environment. The research provides some fundamental understanding of how memories are formed in the brain and is part of a burgeoning science of memory manipulation that includes the transfer, prosthetic enhancement and erasure of memory. These efforts could have a tremendous impact on a wide range of individuals, from those struggling with memory impairments to those enduring traumatic memories, and they also have broad social and ethical implications.

|

Scooped by

Philippe J DEWOST

September 2, 2019 3:30 AM

|

C'est un investissement surprenant pour Xavier Niel. Le patron de Free et copropriétaire du journal "Le Monde" a acquis, via son école, un fort napoléonien au cœur d'une forêt sur l'île d'Oléron. C'est Sud Ouest qui assure que le dirigeant de l'opérateur de téléphonie mobile aurait fait l'acquisition de ce site de 25.600 m² datant de 1818. Des informations confirmées à Capital par une source proche du dossier. C'est en réalité son école, l'École 42, qui a acquis ces locaux d'exception. On ne sait pas encore s'il s'agit de créer une annexe à ces écoles du numérique ou un centre de vacances pour ces étudiants formés pour être développeurs. Les trois établissements 42 délivrent des certifications professionnelles labellisées Grande École du Numérique et font partie de l’association à but non lucratif dont Xavier Niel est le président. Sud Ouest précise que le ministère de la Défense avait publié une annonce et procédé à un appel à candidatures pour choisir parmi les possibles acheteurs qui avaient jusqu'au 29 octobre pour faire leur offre. Le journal local évoque "80 candidatures, dont 15 solides". Le projet d'école du numérique de l'École 42 de Xavier Niel semble donc avoir séduit le ministère. Si le montant de l'achat n'a pas été révélé, le bâtiment est été évalué aux alentours de 850.000 euros par la Direction régionale des finances publiques de Bordeaux. Ce fort s'étend sur un terrain de 3.200 mètres carrés au cœur d'une forêt de 645 hectares. Un lieu reculé qui, après avoir été garnison et prison, servait de lieu de colonie de vacances pour les enfants de militaires et du personnel civil des armées au 20e siècle, avant de fermer en 2012. Construit de 1810 à 1818 en face de Fort Boyard pour consolider les lignes de défense française face aux Anglais, il accueillera donc peut-être bientôt des étudiants du patron de Free que ce soit pour des vacances ou des formations. Un projet important pour le dirigeant qui déplore "un ascenseur social qui ne marche pas en France" et a la volonté de "passer le relais" et de "donner leur chance à des jeunes défavorisés ou qui ne savent pas ce qu’ils veulent faire en les poussant vers l’entrepreneuriat".

|

Scooped by

Philippe J DEWOST

July 19, 2019 9:08 AM

|

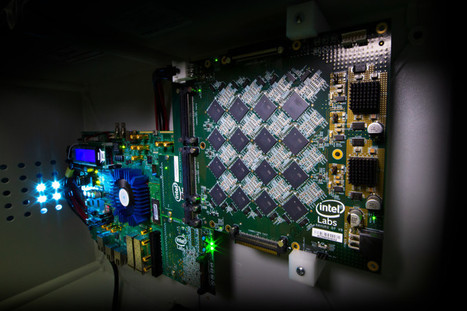

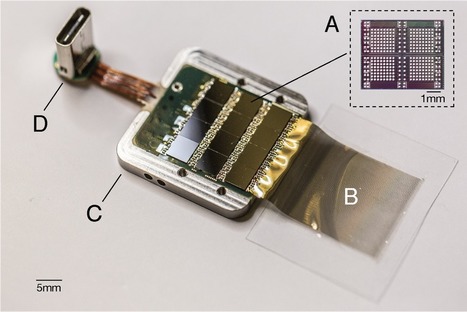

At DARPA's Electronics Resurgence Initiative 2019 in Michigan, Intel introduced a new neuromorphic computer capable of simulating 8 million neurons. Neuromorphic engineering, also known as neuromorphic computing, describes the use of systems containing electronic analog circuits to mimic neuro-biological architectures present in the nervous system. Scientists at MIT, Perdue, Stanford, IBM, HP, and elsewhere have pioneered pieces of full-stack systems, but arguably few have come closer than Intel when it comes to tackling one of the longstanding goals of neuromorphic research — a supercomputer a thousand times more powerful than any today. Case in point? Today during the Defense Advanced Research Projects Agency’s (DARPA) Electronics Resurgence Initiative 2019 summit in Detroit, Michigan, Intel unveiled a system codenamed “Pohoiki Beach,” a 64-chip computer capable of simulating 8 million neurons in total. Intel Labs managing director Rich Uhlig said Pohoiki Beach will be made available to 60 research partners to “advance the field” and scale up AI algorithms like spare coding and path planning. “We are impressed with the early results demonstrated as we scale Loihi to create more powerful neuromorphic systems. Pohoiki Beach will now be available to more than 60 ecosystem partners, who will use this specialized system to solve complex, compute-intensive problems,” said Uhlig. Pohoiki Beach packs 64 128-core, 14-nanometer Loihi neuromorphic chips, which were first detailed in October 2017 at the 2018 Neuro Inspired Computational Elements (NICE) workshop in Oregon. They have a 60-millimeter die size and contain over 2 billion transistors, 130,000 artificial neurons, and 130 million synapses, in addition to three managing Lakemont cores for task orchestration. Uniquely, Loihi features a programmable microcode learning engine for on-chip training of asynchronous spiking neural networks (SNNs) — AI models that incorporate time into their operating model, such that components of the model don’t process input data simultaneously. This will be used for the implementation of adaptive self-modifying, event-driven, and fine-grained parallel computations with high efficiency.

|

Scooped by

Philippe J DEWOST

June 24, 2019 12:21 AM

|

Slack is on an internal Microsoft list of prohibited technology — software, apps, online services and plug-ins that the company doesn’t want its employees using as part of their day-to-day work. But the document, obtained by GeekWire, asserts that the primary reason is security, not competition. And Slack is just one of many on the list. GeekWire obtained an internal Microsoft list of prohibited and discouraged technology — software and online services that the company doesn’t want its employees using as part of their day-to-day work. We first picked up on rumblings of the prohibition from Microsoft employees who were surprised that they couldn’t use Slack at work, before tracking down the list and verifying its authenticity. While the list references the competitive nature of these services in some situations, the primary criteria for landing in the “prohibited” category are related to IT security and safeguarding company secrets. Slack is on the “prohibited” category of the internal Microsoft list, along with tools such as the Grammarly grammar checker and Kaspersky security software. Services in the “discouraged” category include Amazon Web Services, Google Docs, PagerDuty and even the cloud version of GitHub, the popular software development hub and community acquired by Microsoft last year for $7.5 billion.

|

Scooped by

Philippe J DEWOST

June 4, 2019 11:51 AM

|

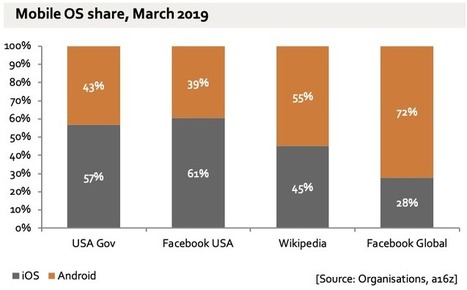

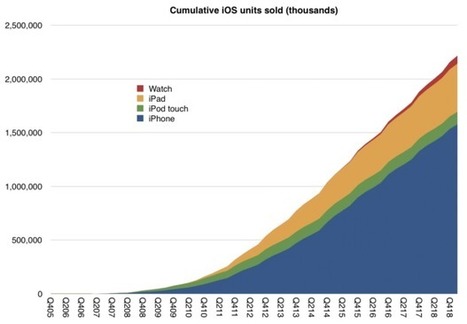

There are about 5.3bn people on earth aged over 15. Of these, around 5bn have a mobile phone and 4bn a smartphone. The platform wars ended a long time ago, and Apple and Google both won (outside China, at least). So it is time to stop making charts.

|

Scooped by

Philippe J DEWOST

May 21, 2019 11:57 PM

|

Researchers trained a neural network to map audio “voiceprints” from one language to another. The results aren’t perfect, but you can sort of hear how Google’s translator was able to retain the voice and tone of the original speaker. It can do this because it converts audio input directly to audio output without any intermediary steps. In contrast, traditional translational systems convert audio into text, translate the text, and then resynthesize the audio, losing the characteristics of the original voice along the way. The new system, dubbed the Translatotron, has three components, all of which look at the speaker’s audio spectrogram—a visual snapshot of the frequencies used when the sound is playing, often called a voiceprint. The first component uses a neural network trained to map the audio spectrogram in the input language to the audio spectrogram in the output language. The second converts the spectrogram into an audio wave that can be played. The third component can then layer the original speaker’s vocal characteristics back into the final audio output. Not only does this approach produce more nuanced translations by retaining important nonverbal cues, but in theory it should also minimize translation error, because it reduces the task to fewer steps. Translatotron is currently a proof of concept. During testing, the researchers trialed the system only with Spanish-to-English translation, which already took a lot of carefully curated training data. But audio outputs like the clip above demonstrate the potential for a commercial system later down the line. You can listen to more of them here.

|

Scooped by

Philippe J DEWOST

May 13, 2019 8:52 AM

|

In the film Minority Report, mutants predict future crimes, allowing police to swoop in before they can be committed. In China, stopping “precrime” with algorithms is a part of law enforcement, using…

|

|

Scooped by

Philippe J DEWOST

November 14, 2019 12:34 PM

|

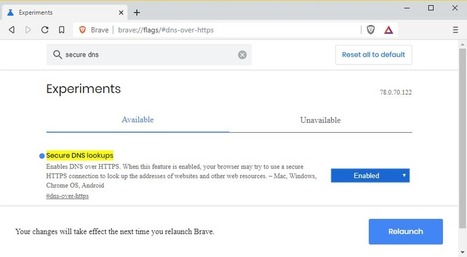

All six major browser vendors have plans to support DNS-over-HTTPS (or DoH), a protocol that encrypts DNS traffic and helps improve a user's privacy on the web. The DoH protocol has been one of the year's hot topics. It's a protocol that, when deployed inside a browser, it allows the browser to hide DNS requests and responses inside regular-looking HTTPS traffic. Doing this makes a user's DNS traffic invisible to third-party network observers, such as ISPs. But while users love DoH and have deemed it a privacy boon, ISPs, networking operators, and cyber-security vendors hate it. A UK ISP called Mozilla an "internet villain" for its plans to roll out DoH, and a Comcast-backed lobby group has been caught preparing a misleading document about DoH that they were planning to present to US lawmakers in the hopes of preventing DoH's broader rollout. However, this may be a little too late. ZDNet has spent the week reaching out to major web browser providers to gauge their future plans regarding DoH, and all vendors plan to ship it, in one form or another.

|

Scooped by

Philippe J DEWOST

November 9, 2019 2:37 AM

|

Indoor farming startup Bowery announced today it has raised an additional $50M in an extension of its Series B round. This comes just nearly 11 months after it raised $90 million in a Series B round that we reported on at the time. In a written statement, Bowery said the add-on was the result “of significant momentum in the business.” Temasek led the extension and Henry Kravis, co-founder of Kohlberg Kravis Roberts & Co., also put money in the “B+ round.” The financing brings the New York-based company’s total raised to $172.5 million since its inception in 2015, according to Bowery. The startup, which aims to grow “sustainably grown produce,” also announced today its new indoor farm in the Baltimore-DC area. The new farm is 3.5 times larger than Bowery’s last facility, according to the company. Its network of farms “essentially communicate using Bowery’s software.” according to the company, and benefits from the collective intelligence of 2+ years of data.”

|

Scooped by

Philippe J DEWOST

November 8, 2019 5:50 AM

|

The research lab OpenAI has released the full version of a text-generating AI system that experts warned could be used for malicious purposes. The institute originally announced the system, GPT-2, in February this year, but withheld the full version of the program out of fear it would be used to spread fake news, spam, and disinformation. Since then it’s released smaller, less complex versions of GPT-2 and studied their reception. Others also replicated the work. In a blog post this week, OpenAI now says it’s seen “no strong evidence of misuse” and has released the model in full. GPT-2 is part of a new breed of text-generation systems that have impressed experts with their ability to generate coherent text from minimal prompts. The system was trained on eight million text documents scraped from the web and responds to text snippets supplied by users. Feed it a fake headline, for example, and it will write a news story; give it the first line of a poem and it’ll supply a whole verse. It’s tricky to convey exactly how good GPT-2’s output is, but the model frequently produces eerily cogent writing that can often give the appearance of intelligence (though that’s not to say what GPT-2 is doing involves anything we’d recognize as cognition). Play around with the system long enough, though, and its limitations become clear. It particularly suffers with the challenge of long-term coherence; for example, using the names and attributes of characters consistently in a story, or sticking to a single subject in a news article. The best way to get a feel for GPT-2’s abilities is to try it out yourself. You can access a web version at TalkToTransformer.com and enter your own prompts. (A “transformer” is a component of machine learning architecture used to create GPT-2 and its fellows.)

|

Scooped by

Philippe J DEWOST

September 25, 2019 12:37 PM

|

Xiaomi’s Mi Mix series has always pushed the boundaries of phone screens and form factors, from the original model that kicked off the bezel wars to last year’s sliding, notchless Mi Mix 3. Now, just as we’re starting to see “waterfall” displays with extreme curved edges, Xiaomi is taking this to a wild new level with the Mi Mix Alpha. The “surround screen” on the Alpha wraps entirely around the device to the point where it meets the camera module on the other side. The effect is of a phone that’s almost completely made of screen, with status icons like network signal and battery charge level displayed on the side. Pressure-sensitive volume buttons are also shown on the side of the phone. Xiaomi is claiming more than 180 percent screen-to-body ratio, a stat that no longer makes any sense to cite at all. The Mix Alpha uses Samsung’s new 108-megapixel camera sensor, which was co-developed with Xiaomi. As with other recent high-resolution Samsung sensors, pixels are combined into 2x2 squares for better light sensitivity in low light, which in this case will produce 27-megapixel images. We’ll have to see how that works in practice, but the 1/1.33-inch sensor is unusually large for a phone and should give the Mix Alpha a lot of light-gathering capability. There’s also no need for a selfie camera — you just turn the phone around and use the rear portion of the display as a viewfinder for the 108-megapixel shooter.

|

Rescooped by

Philippe J DEWOST

from pixels and pictures

September 11, 2019 1:29 PM

|

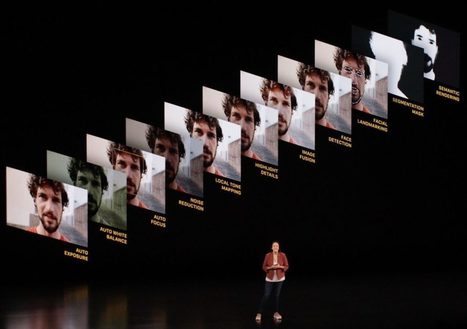

Capturing light is becoming a thing of the past : with computational photography it is now eclipsed by processing pixels.

iPhone 11 and its « Deep Fusion » mode leave no doubt that photography is now software and that software is also eating cameras.

|

Scooped by

Philippe J DEWOST

September 9, 2019 2:34 AM

|

The MIT-Epstein debacle shows ‘the prostitution of intellectual activity’ and calls for a radical agenda pleads Evgeny Morozov. As Frederic Filloux points in today's edition of The Monday Note, "It matters because the MediaLab scandal is the tip of the iceberg. American universities are plagued by conflicts of interest. It is prevalent at Stanford for instance. I personally don’t mind an experienced professor charging $3,000 an hour to talk to foreign corporate visitors or asking $15,000 to appear at a conference. These people are high-valued and they also often work for free when needed. What bothers me is when a board membership collides with the content of a class, when a research paper is redacted to avoid upsetting a powerful VC firm who provides both generous donations and advisory fees to the faculty, when a prominent professor regurgitates a paid study they have done for a foreign bank as a support of a class, or when another keeps hammering Google because they advise a direct competitor. This is unethical and offensive to students who regularly pay $60,000-$100,000 in tuition each year."

|

Scooped by

Philippe J DEWOST

September 4, 2019 6:09 AM

|

Operating independently for the first time since Chandrayaan-2was launched on July 22, Vikram, the lander, underwent its first manoeuvre around Moon.

Isro successfully completed the first de-orbiting manoeuvre at 8.50 am Tuesday, using for the first time, the propulsion systems on Vikram. All these days all operations were carried out by systems on the orbiter, from which Vikram, carrying Pragyan (rover) inside it, separated from on Monday.

"The duration of the maneuver was 4 seconds. The orbit of Vikram is 104kmX128 km, the Chandrayaan-2 orbiter continues to orbit Moon in the existing orbit and both the orbiter and lander are healthy," Isro said.

The next de-orbiting maneuver is scheduled on September 04 between 3.30 am and 4.30 am.

As reported by TOI, the landing module (Vikram and Pragyan) successfully separated from the orbiter at 1.15 pm Monday (September 2), pushing India's Chandrayaan-2 mission into the last and most crucial leg: Moon landing.

"The operation was great in the sense that we were able to separate the lander and rover from the orbiter—It is the first time in the history of Isro that we've separated two modules in space. This was very critical and we did it very meticulously," Isro chairman K Sivan told TOI soon after the separation.

|

Scooped by

Philippe J DEWOST

August 17, 2019 4:18 AM

|

A Twitter user’s claim to have tweeted from a kitchen appliance went viral but experts have cast doubt

|

Scooped by

Philippe J DEWOST

July 17, 2019 1:03 PM

|

Elon Musk’s Neuralink, the secretive company developing brain-machine interfaces, showed off some of the technology it has been developing to the public for the first time. The goal is to eventually begin implanting devices in paralyzed humans, allowing them to control phones or computers. The first big advance is flexible “threads,” which are less likely to damage the brain than the materials currently used in brain-machine interfaces. These threads also create the possibility of transferring a higher volume of data, according to a white paper credited to “Elon Musk & Neuralink.” The abstract notes that the system could include “as many as 3,072 electrodes per array distributed across 96 threads.” The threads are 4 to 6 μm in width, which makes them considerably thinner than a human hair. In addition to developing the threads, Neuralink’s other big advance is a machine that automatically embeds them. Musk gave a big presentation of Neuralink’s research Tuesday night, though he said that it wasn’t simply for hype. “The main reason for doing this presentation is recruiting,” Musk said, asking people to go apply to work there. Max Hodak, president of Neuralink, also came on stage and admitted that he wasn’t originally sure “this technology was a good idea,” but that Musk convinced him it would be possible. In the future, scientists from Neuralink hope to use a laser beam to get through the skull, rather than drilling holes, they said in interviews with The New York Times. Early experiments will be done with neuroscientists at Stanford University, according to that report. “We hope to have this in a human patient by the end of next year,” Musk said. During a Q&A at the end of the presentation, Musk revealed results that the rest of the team hadn’t realized he would: “A monkey has been able to control a computer with its brain.”

|

Scooped by

Philippe J DEWOST

June 6, 2019 12:31 AM

|

More than 100 cracker-size miniprobes successfully phoned home in March, one day after deploying from their carrier spacecraft in Earth orbit, mission team members announced today (June 3).

|

Rescooped by

Philippe J DEWOST

from pixels and pictures

June 4, 2019 3:39 AM

|

Just hours after Oppo revealed the world’s first under-display camera, Xiaomi has hit back with its own take on the new technology. Xiaomi president Lin Bin has posted a video to Weibo (later re-posted to Twitter) of the Xiaomi Mi 9 with a front facing camera concealed entirely behind the phone’s screen. That means the new version of the handset has no need for any notches, hole-punches, or even pop-up selfie cameras alongside its OLED display. It’s not entirely clear how Xiaomi’s new technology works. The Phone Talks notes that Xiaomi recently filed for a patent that appears to cover similar functionality, which uses two alternately-driven display portions to allow light to pass through to the camera sensor.

|

Scooped by

Philippe J DEWOST

May 19, 2019 11:49 PM

|

Over 1.6 billion have been sold. The total iOS products sold is over 2.2 billion of which 1.5 billion are still in use.

There are about 1 billion iPhone users. Economically speaking, iPhone sales have reached one trillion dollars. Since the iPhone launched, Apple’s sales have totaled $1.918 trillion.

|

Your new post is loading...

Your new post is loading...

What if meeting notes were automatically generated and made available shortly after the conference call ? What if action items were assigned too ?

No more need for post processing, nor in meeting typing pollution : here is #AI (read "automated pattern detection and in context recognition") 's promised made by Firefly.

History reminds us how cautiously we shall face the longstanding fantasy of voice dictation (not speaking here of voice assistants) : Dragon Dictate in the 1990's never lived up to the promise, not did

SpinVox in 2009 (it ended in tears). Now with growing concerns on the privacy vs. convenience balance, war is still not over.