Your new post is loading...

Your new post is loading...

|

Scooped by

Philippe J DEWOST

January 9, 2017 10:48 AM

|

When Mathieu Stern came to us with his 3D printed photographic lens project, we were immediately excited by the idea. Not only Mathieu is one of those enthusiasts of photography well known on the web (he launched for example an original and poetic web series “The Weird Lenses Challenge“) but also our professional proximity with the world of art, design, and images we work on on a daily basis at Fabulous, naturally brought us closer. Moreover, as an application design office specialized in additive manufacturing, it is always a pleasure to embark on a new challenge. The question posed by Mathieu is identical to that posed daily by our customers: how to use the competitive advantages of 3D printing to design and manufacture a new innovative product, cheap, and above all performing.

|

Scooped by

Philippe J DEWOST

December 21, 2016 3:58 AM

|

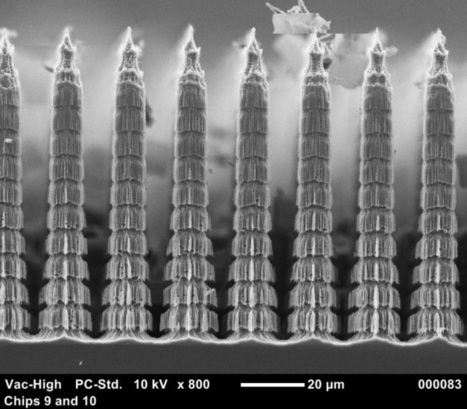

If you wanted to see in the dark, you could do worse than follow the example of moths, which have of course made something of a specialty of it. That, at least, is what NASA researchers did when designing a powerful new camera that will capture the faintest features in the galaxy. This biomimetic “bolometer detector array,” as the instrument is called, is part of the Stratospheric Observatory for Infrared Astronomy, or SOFIA. This ongoing mission uses a custom 747 to fly at high altitudes, where observations can be made of infrared radiation that would otherwise be blocked by the water in our atmosphere. But even the infrared that does make it here is pretty faint, so you want to capture every photon you can get. The team, led by Christine Jhabvala and Ed Wollack at NASA’s Goddard Space Flight Center, originally looked into carbon nanotubes, which have many desirable properties. But they didn’t quite fit the bill. The eye of the common moth, however — or at least, a design inspired by it — did what the latest nanomaterial didn’t. Insects’ eyes are of course very different from our own, essentially composed of hundreds or thousands of tiny lenses that refract an image onto their own dedicated photosensitive surface at the base of a column or ommatidia. In moths, this surface is also covered in microscopic, tapered columns or spikes. These have the effect of preventing light from bouncing back out, ensuring a greater proportion of it is detected. The team replicated this design in silicon, and you can see the result at top. Each spike is carefully engineered to reflect light downwards and retain it, making the High-Resolution Airborne Wideband Camera-plus, or HAWC+, one of the most sensitive instruments out there. Funnily enough, the sensor they modified was originally called the backshort under-grid sensor — BUGS.

|

Scooped by

Philippe J DEWOST

July 26, 2016 5:30 AM

|

You may think you’re no good at seeing in the dark, but your eyes are actually incredibly sensitive. In fact, according to a new study, the human eye is so sensitive it can detect even a single photon of light! The study was conducted by a team at Rockefeller University who used an innovative (and complicated) technique to reliably fire a single high energy photon directly at participants’ retinas. For their part, the participants just had to tell them when they saw something and rate how confident they were about the sighting.

The results were surprising to say the least. We’re talking about the smallest particle of light, and the results from the study show that people were able to accurately determine when a photon was fired 51.6% of the time (60% when they were very confident)—a statistically significant percentage that couldn’t possibly result from subjects guessing their way through it. What’s more, subjects were more likely to detect a second photon if it was fired less than 10 seconds after the first.

|

Scooped by

Philippe J DEWOST

May 8, 2016 8:33 AM

|

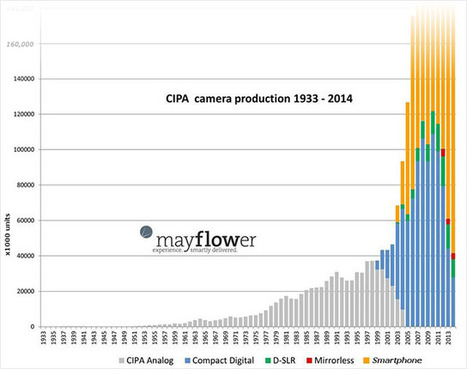

Few months ago, we shared a chart showing how sales the camera market have changed between 1947 and 2014. The data shows that after a large spike in the late 2000s, the sales of dedicated cameras have been shrinking by double digit figures each of the following years. Mix in data for smartphone sales, and the chart can shed some more light on the state of the industry.

|

Scooped by

Philippe J DEWOST

April 26, 2016 1:24 AM

|

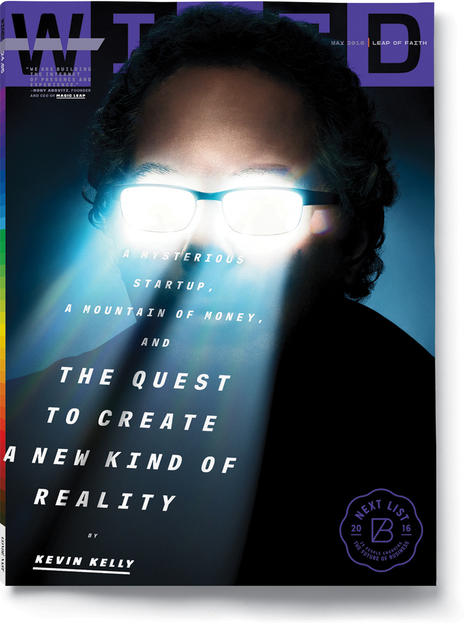

Among the first people Abovitz hired at Magic Leap was Neal Stephenson, author of the other seminal VR anticipation, Snow Crash. He wanted Stephenson to be Magic Leap’s chief futurist because “he has an engineer’s mind fused with that of a great writer.” Abovitz wanted him to lead a small team developing new forms of narrative. Again, the mythmaker would be making the myths real.

|

Scooped by

Philippe J DEWOST

March 30, 2016 7:42 AM

|

These user created images are the product of an art technique known as Inceptionism, using neural networks to generate a single mind-bending picture from two source images. The images are possible thanks to DeepDream software, which finds and enhances patterns in images by a process known as algorithmic pareidolia. It was pioneered by Google and was originally code-named Inception after the film of the same name.

|

Scooped by

Philippe J DEWOST

February 19, 2016 7:02 AM

|

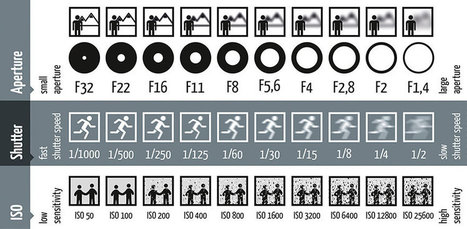

If you’re a beginner photographer, it can be helpful to have a simple guide that helps you understand the different settings that you can toggle on your DSLR camera. While this helpful exposure chart by Daniel Peters at Fotoblog Hamburg won’t explain HOW the optics of photography work, it will show you exactly what happens when you tweak your camera’s settings.

|

Scooped by

Philippe J DEWOST

February 17, 2016 12:50 AM

|

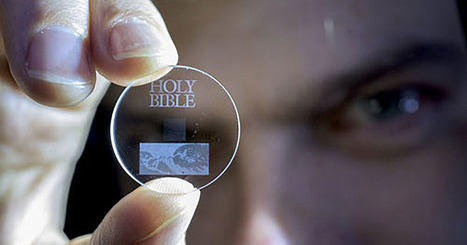

Scientists have created nanostructured glass discs that can storage digital data for billions of years.Researchers at the University of Southampton announced this week that they’ve figured out how to store huge amounts of data on small glass discs using laser writing. They call it five dimensional (5D) digital data because in addition to the position of the data, the size and orientation plays a role too.The glass storage discs can hold a whopping 360 terabytes each, are stable at temperatures up to 1,000°C (1,832°F), and are expected to keep the data intact for 13.8 billion years at room temperature (anything up to 190°C, or 374°F).

|

Scooped by

Philippe J DEWOST

February 7, 2016 6:47 AM

|

You will be shown 10 pairs of pictures. In each pair, one is painted by a human and another one is generated by artificial intelligence based on a photo and a style of a painter. Click on a picture painted by a human.

|

Scooped by

Philippe J DEWOST

February 2, 2016 9:41 AM

|

Technically, today is the 15th anniversary of the relicensing of all the VideoLAN software to the GPL license, as agreed by the École Centrale Paris, on February 1st, 2001. If you've been to one of my talks, (if you haven't, you should come to one), you know that the project that became VideoLAN and VLC, is almost 5 years older than that, and was called Network 2000. Moreover, the first commit on the VideoLAN Client project is from August 8th 1999, by Michel Kaempf had 21275 lines of code already, so the VLC software was started earlier in 1999. However, the most important date for the birth of VLC is when it was allowed to be used outside of the school, and therefore when the project was GPL-ized: February 1st, 2001. Facts and numbersSince then, only on VLC, we've had around - 700 contributors,

- 70000 commits,

- at least 2 billion downloads,

- hundreds of millions users!

And all that, mostly with volunteers and without turning into a business!

We have now ports for Windows, GNU/Linux, BSD, OS X, iPhone and iPad, Android, Solaris, Windows Phones, BeOS, OS/2, Android TV, Apple TV, Tizen and ChromeOS.

|

Scooped by

Philippe J DEWOST

December 8, 2015 1:44 PM

|

Robert Bösch est un guide de montagne Suisse, mais aussi un célèbre photographe travaillant actuellement en collaboration avec la marque Mammut. Cette entreprise, qui vend des équipements pour les sports de montagne, a souhaité rendre hommage à l'un des pionniers de l'Alpinisme : Edward Whymper. Robert Bosch ainsi que des alpinistes vont mettre en scène la première ascension du Cervin, la montagne la plus connue de Suisse, réalisée 150 ans plus tôt par Edward Whymper. Les lumières rouges le long de l'arête Hörnli retracent le parcours de l'alpiniste anglais. À l'époque, ce fût un véritable défi contre la mort au nom de l'exploration.

|

Scooped by

Philippe J DEWOST

October 29, 2015 8:03 AM

|

Parts of Chile's Atacama Desert, one of the driest places on Earth, look like a psychedelic wonderland as pink mallow flowers bloom in the valley, following a year of unprecedented rain.Massive downpours in March gave parts of the desert its first taste of rain in almost seven years. Some areas got as much as seven years' worth of rain in just 12 hours.

|

Scooped by

Philippe J DEWOST

October 27, 2015 10:36 AM

|

Xiaomi, on Monday, unveiled the Mi TV 3, a 60-inch TV with 4K display at a price point of RMB 4,999 ($780). Much like the Mi TV 2 and previous generation television sets from the company, the Mi TV 3 has been launched in the company's home market China.

The Mi TV 3 sports an LG-made 60-inch 4K display with lossless quality, MEMC, and color gamut. The display comes with full aluminum frame running along the sides. At 11.6mm, the Mi TV 3 is pretty sleek too. It is powered by a MStar 6A928 processor consisting of Cortex-A17 and Mali T760 GPU. An 8GB eMMC 5.0 flash is used as the internal storage. The company hasn't revealed any information about the RAM module.

On the connectivity side, there are three HDMI ports, two USB ports, one VGA port, one Ethernet port, one AV in, an output for Subwoofer, and a standard RF Modulator. On the audio front, the Mi TV 3 features Virtual Surround technology, deeper bass, and dialogue enhance tool with auto volume balance support. The company says that acoustics for the TV has been put together by Grammy award winner Luca Bugnardi, and former research head at Philips acoustics Wang Fuyu.

The speaker bar, which sells separately at RMB 999 ($160), is made of four mid-range 2.5-inch subwoofer, and interestingly comes with the main board of the TV. The company says that it has separated the processor board from the screen, as doing this significantly reduces the replacement cost of internal components. This also increases the life cycle of the TV, the company claimed.

|

|

Scooped by

Philippe J DEWOST

December 24, 2016 9:42 AM

|

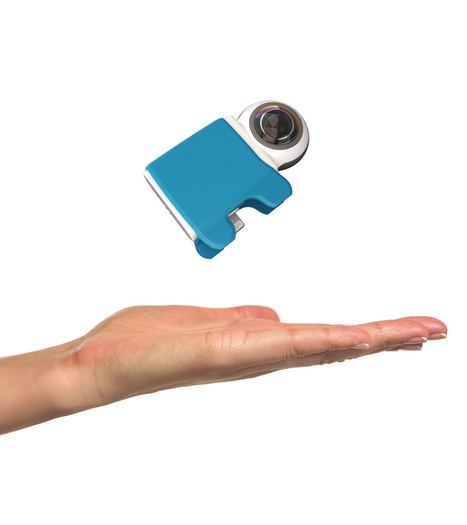

Giroptic, creator of the standalone ‘360cam’, today announced the launch of the iO 360 camera which attaches to any Lightning-enabled iPhone or iPad. The Giroptic iO 360 camera for iPhone/iPad is available starting today for $250. With two counter-facing lenses, the camera enables Apple devices to capture full 360 degree photo and videospheres. The camera currently supports 360 degree livestreaming via YouTube, and support for 360 Facebook Live is planned Specs include two 195 degree lenses with an aperture of F/1.8, onboard stereo microphone and a rechargeable battery. Video is captured at 1920×960 resolution at 30 FPS and is stitched in real time with no post-processing needed. Photos are shot at a higher 3840×1920 resolution.

|

Scooped by

Philippe J DEWOST

November 9, 2016 2:39 AM

|

Umberto Eco en parlait déjà dans le Pendule de Foucault en 1988.

- N’avez-vous jamais été au numéro 145 de la rue Lafayette?

—- J’avoue que non.

—- Un peu hors de portée, entre la gare de l’Est et la gare du Nord. Un édifice d’abord indiscernable. Seulement si vous l’observez mieux, vous vous rendez compte que les portes semblent en bois mais sont en fer peint, et que les fenêtres donnent sur des pièces inhabitées depuis des siècles. Jamais une lumière. Mais les gens passent et ne savent pas.

– Ne savent pas quoi?

– Que c’est une fausse maison. C’est une façade, une enveloppe sans toit, sans rien à l’intérieur. Vide. Ce n’est que l’orifice d’une cheminée. Elle sert à l’aération ou à évacuer les émanations du RER. Et quand vous le comprenez, vous avez l’impression d’être devant la gueule des Enfers; et que seulement si vous pouviez pénétrer dans ces murs, vous auriez accès au Paris souterrain. Il m’est arrivé de passer des heures et des heures devant ces portes qui masquent la porte des portes, la station de départ pour le voyage au centre de la terre. Pourquoi croyez-vous qu’ils ont fait ça?

– — Pour aérer le métro, vous avez dit.

– — Les bouches d’aération suffisaient. Non, c’est devant ces souterrains que je commence à avoir des soupçons. Me comprenez-vous?

|

Scooped by

Philippe J DEWOST

June 1, 2016 9:09 AM

|

Les intempéries qui touchent la France depuis plusieurs jours ont presque fait du château de Chambord (Loir-et-Cher) une île. La moitié des routes d’accès sont fermées, le système de sécurité incendie est hors d’usage, tous les parcs de stationnement sont inaccessibles ou sous l’eau. Par sécurité, le monument est fermé au public ce mercredi. Le rêve de François Ier d'un château surgissant des eaux, celles qu’il aurait voulu détourner de la Loire, est exaucé.

|

Scooped by

Philippe J DEWOST

April 27, 2016 2:20 AM

|

Moonset over Mount Sharp

This image combines a single Mastcam frame taken of Phobos behind Mt. Sharp on sol 613 (April 28, 2014) with three images from a 360-degree mosaic acquired during the afternoon of sol 610 (April 24, 2014) to extend the foreground view and balance the image composition.

The moonset view came from one sol; Justin extended the mosaic with some images taken a previous sol. "The sol 610 frames were adjusted to match the color of the Sol 613 image. As these additional frames were in the opposite direction of the Sun, very few shadows are present, ideal for matching the post-sunset lighting conditions of the sol 613 image," he writes.

A bit of Phobos trivia: Curiosity's view here is to the east, but this is indeed a moonset, not a moonrise. Phobos orbits so close to Mars that it moves around Mars faster than Mars rotates, and consequently it appears to rise in the west and set in the east!

|

Scooped by

Philippe J DEWOST

April 13, 2016 5:22 AM

|

Introducing Facebook Surround 360: An open, high-quality 3D-360 video capture system - Facebook has designed and built a durable, high-quality 3D-360 video capture system.

- The system includes a design for camera hardware and the accompanying stitching code, and we will make both available on GitHub this summer. We're open-sourcing the camera and the software to accelerate the growth of the 3D-360 ecosystem — developers can leverage the designs and code, and content creators can use the camera in their productions.

- Building on top of an optical flow algorithm is a mathematically rigorous approach that produces superior results. Our code uses optical flow to compute left-right eye stereo disparity. We leverage this ability to generate seamless stereoscopic 360 panoramas, with little to no hand intervention.

- The stitching code drastically reduces post-production time. What is usually done by hand can now be done by algorithm, taking the stitching time from weeks to overnight.

- The system exports 4K, 6K, and 8K video for each eye. The 8K videos double industry standard output and can be played on Gear VR with Facebook's custom Dynamic Streaming technology.

Today we announced Facebook Surround 360 — a high-quality, production-ready 3D-360 hardware and software video capture system. In designing this camera, we wanted to create a professional-grade end-to-end system that would capture, edit, and render high-quality 3D-360 video. In doing so, we hoped to meaningfully contribute to the 3D-360 camera landscape by creating a system that would enable more VR content producers and artists to start producing 3D-360 video. Defining the challenges of VR capture When we started this project, all the existing 3D-360 video cameras we saw were either proprietary (so the community could not access those designs), available only by special request, or fundamentally unreliable as an end-to-end system in a production environment. In most cases, the cameras in these systems would overheat, the rigs weren't sturdy enough to mount to production gear, and the stitching would take a prohibitively long time because it had to be done by hand. So we set out to design and build a 3D-360 video camera that did what you'd expect an everyday camera to do — capture, edit, and render reliably every time. That sounds obvious and almost silly, but it turned out to be a technically daunting challenge for 3D-360 video. Many of the technical challenges for 3D video stem from shooting the footage in stereoscopic 360. Monoscopic 360, using two or more cameras to capture the whole 360 scene, is pretty mainstream. The resultant images allow you to look around the whole scene but are rather flat, much like a still photo. However, things get much more complicated when you want to capture 3D-360 video. Unlike monoscopic video, 3D video requires depth. We get depth by capturing each location in a scene with two cameras — the camera equivalent of your left eye and right eye. That means you have to shoot in stereoscopic 360, with 10 to 20 cameras collectively pointing in every direction. Furthermore, all the cameras must capture 30 or 60 frames per second, exactly and simultaneously. In other words, they must be globally synchronized. Finally, you need to fuse or stitch all the images from each camera into one seamless video, and you have to do it twice: once from the virtual position for the left eye, and once for the right eye. This last step is perhaps the hardest to achieve, and it requires fairly sophisticated computational photography and computer vision techniques. The good news is that both of these have been active areas of research for more than 20 years. The combination of past algorithm research, the rapid improvement and availability of image sensors, and the decreasing cost of memory components like SSDs makes this project possible today. It would have been nearly impossible as recently as five years ago. The VR capture system With these challenges in mind, we began experimenting with various prototypes and settled on the three major components we felt were needed to make a reliable, high-quality, end-to-end capture system: - The hardware (the camera and control computer)

- The camera control software (for synchronized capture)

- The stitching and rendering software

All three are interconnected and require careful design and control to achieve our goals of reliability and quality. Weakness in one area would compromise quality or reliability in another area. Additionally, we wanted the hardware to be off-the-shelf. We wanted others to be able to replicate or modify our design based on our design specs and software without having to rely on us to build it for them. We wanted to empower technical and creative teams outside of Facebook by allowing them full access to develop on top of this technology. The camera hardware As with any system, we started by laying out the basic hardware requirements. Relaxing any one of these would compromise quality or reliability, and sometimes both. Camera requirements: - The cameras must be globally synchronized. All the frames must capture the scene at the same time within less than 1 ms of one another. If the frames are not synchronized, it can become quite hard to stitch them together into a single coherent image.

- Each camera must have a global shutter. All the pixels must see the scene at the same time. That's something, for example, cell phone cameras don't do; they have a rolling shutter. Without a global shutter, fast-moving objects will diagonally smear across the camera, from top to bottom.

- The cameras themselves can’t overheat, and they need to be able to run reliably over many hours of on-and-off shooting.

- The rig and cameras must be rigid and rugged. Processing later becomes much easier and higher quality if the cameras stay in one position.

- The rig should be relatively simple to construct from off-the-shelf parts so that others can replicate, repair, and replace parts.

We addressed each of these requirements in our design. Industrial-strength cameras by Point Grey have global shutters and do not overheat when they run for a long time. The cameras are bolted onto an aluminum chassis, which ensures that the rig and cameras won't bounce around. The outer shell is made with powder-coated steel to protect the internal components from damage. (Lest anyone think an aluminum chassis or steel frame is hard to come by, any machining shop will do the honors once handed the specs.)

|

Scooped by

Philippe J DEWOST

March 23, 2016 1:58 AM

|

Cambridge-based image fusion pioneer attracts major backing to commercialise product portfolioSpectral Edge, (http://www.spectraledge.co.uk/) today announced the successful completion of an oversubscribed £1.5 million second funding round. New lead investors IQ Capital and Parkwalk Advisors, along with angel investors from Cambridge Angels, Wren Capital, Cambridge Capital Group and Martlet, the Marshall of Cambridge Corporate Angel investment fund, join the Rainbow Seed Fund/Midven and Iceni in backing the company. Spun out of the University of East Anglia (UEA) Colour Lab, Spectral Edge has developed innovative image fusion technology. This combines different types of image, ranging from the visible to invisible (such as infrared and thermal), to enhance detail, aid visual accessibility, and create ever more beautiful pictures. Spectral Edge’s Phusion technology platform has already been proven in the visual accessibility market, where independent studies have shown that it can transform the TV viewing experience for the estimated 4% of the world’s population that suffers from colour-blindness. It enhances live TV and video, allowing colour-blind viewers to differentiate between colour combinations such as red-green and pink-grey so that otherwise inaccessible content such as sport can be enjoyed. The new funding will be used to expand Spectral Edge’s team, increase investment in sales and marketing, and underpin development of its product portfolio into IP-licensable products and reference designs. Spectral Edge is mainly targeting computational photography, where blending near-infrared and visible images gives higher quality, more beautiful results with greater depth. Other applications include security, where the combination of visible and thermal imaging enhances details to provide easier identification of people filmed on surveillance cameras, as well as visual accessibility through its Eyeteq brand. "Spectral Edge is a true pioneer in the field of photography. They are set to disrupt and transform the imaging sector, not just within consumer and professional photography, but also across a broad range of business sectors,” said Max Bautin, Managing Partner at IQ Capital. "Backed by a robust catalogue of IP, Spectral Edge’s technology enables individuals and companies to take pictures and record videos with unparalleled detail by taking advantage of non-visible information like near-infra red and heat. We are proud to add Spectral Edge to our portfolio of companies. We back cutting-edge IP-rich technology which pushes the boundaries but also has a proven track record of experiencing stable growth, and Spectral Edge fits that mould perfectly." “We are delighted to support Professor Graham Finlayson and his team at Spectral Edge,” said Alastair Kilgour CIO Parkwalk Advisors. “We believe Phusion could prove to be a substantial enhancement to the quality of digital imaging and as such have significant commercial prospects.” Spectral Edge is led by an experienced team that combines deep technical and business experience. It includes Professor Graham Finlayson, Head of Vision Group and Professor of Computing Science, UEA, Christopher Cytera (managing director) and serial entrepreneur Dr Robert Swann (chairman).

|

Scooped by

Philippe J DEWOST

February 18, 2016 2:32 AM

|

Destin of ‘Smarter Every Day‘ explores the mystery of Prince Rupert’s drop using an ultra high speed camera

|

Scooped by

Philippe J DEWOST

February 12, 2016 4:38 AM

|

The Guangzhou, China-based company Techart has officially unveiled the Techart PRO AF adapter, world’s first autofocus adapter for manual focus lenses. The adapter, which was teased last month, actually lets you autofocus with lenses that don’t have that ability.

|

Rescooped by

Philippe J DEWOST

from cross pond high tech

February 3, 2016 10:09 AM

|

Magic Leap raised $794 million in new funding and CEO Rony Abovitz posted a blog suggesting the secretive company is moving closer toward a product, writing “we are setting up supply chain operations, manufacturing.” Chinese e-commerce company Alibaba led the round and Joe Tsai, Alibaba’s Executive Vice Chairman, is getting a seat on the board. The announcement roughly confirms a December report suggesting the company was raising money in this ballpark. The Series C round puts the Florida startup’s funding to date close to $1.4 billion. Magic Leap also seems to have named its technology “Mixed Reality Lightfield” with subtle language in the blog post linked above that might be commentary about current VR technology, which isn’t able to perfectly reproduce what your eyes see in the real world. “It comes to life by following the rules of the eye and the brain, by being gentle, and by working with us, not against us,” Abovitz wrote about the company’s technology. “By following as closely as possible the rules of nature and biology.” Abovitz previously suggested Rift-like VR headsets have a history of “issues that near-eye stereoscopic 3d may cause” and that “we have done an internal hazard and risk analysis….on the spectrum of hazards that may occur to a wide array of users.”

|

Scooped by

Philippe J DEWOST

December 29, 2015 10:32 AM

|

Dave Sandford is a professional sports photographer of 18 years whose hometown is London, Ontario, Canada. Over the past 4 weeks, for 2 to 3 days per week, Sandford has been driving 45 minutes to Lake Erie, spending up to 6 hours a day photographing the lake.The photos are awe-inspiring: Sandford gets in the water and shoots the powerful choppy waves in a way that makes them look like epic mountain peaks that are exploding into the atmosphere.

|

Scooped by

Philippe J DEWOST

November 5, 2015 1:52 AM

|

an affordable camera technology being developed by the University of Washington and Microsoft Research might enable consumers of the future to tell which piece of fruit is perfectly ripe or what’s rotting in the fridge.The team of computer science and electrical engineers developed HyperCam, a lower-cost hyperspectral camera that uses both visible and invisible near-infrared light to “see” beneath surfaces and capture unseen details. This type of camera is typically used in industrial applications and can cost between several thousand to tens of thousands of dollars.In a paper presented at the UbiComp 2015 conference, the team detailed a hardware solution that costs roughly $800, or potentially as little as $50 to add to a mobile phone camera. They also developed intelligent software that easily finds “hidden” differences between what the hyperspectral camera captures and what can be seen with the naked eye.

|

Scooped by

Philippe J DEWOST

October 27, 2015 4:47 PM

|

After five years of development and about 40,000 tests worldwide, the smartphone-powered eye-test devices developed by MIT spinout EyeNetra is coming to hospitals, optometric clinics, optical stores, and even homes nationwide. But on the heels of its commercial release, EyeNetra says it’s been pursuing opportunities to collaborate with virtual-reality companies seeking to use the technology to develop “vision-corrected” virtual-reality displays. “As much as we want to solve the prescription glasses market, we could also [help] bring virtual reality to the masses,” says EyeNetra co-founder Ramesh Raskar, an associate professor of media arts and sciences at the MIT Media Lab who co-invented the device. The device, called Netra, is a plastic, binocular-like headset. Users attach a smartphone, with the startup’s app, to the front and peer through the headset at the phone’s display. Patterns, such as separate red and green lines or circles, appear on the screen. The user turns a dial to align the patterns and pushes a button to lock them in place. After eight interactions, the app calculates the difference between what the user sees as “aligned” and the actual alignment of the patterns. This signals any refractive errors, such as nearsightedness, farsightedness, and astigmatism. The app then displays the refractive powers, axis of astigmatism, and pupillary distance required for eyeglasses prescriptions. In April, the startup launched Blink, an on-demand refractive test service in New York, where employees bring the startup's optometry tools, including the Netra device, to people’s homes and offices. In India, EyeNetra has launched Nayantara, a similar program to provide low-cost eye tests to the poor and uninsured in remote villages, far from eye doctors. Both efforts used EyeNetra’s suite of tools, now available for eye-care providers worldwide. According to the World Health Organization, uncorrected refractive errors are the world’s second-highest cause of blindness. EyeNetra originally invented the device for the developing world — specifically, for poor and remote regions of Africa and Asia, where many people can’t find health care easily. India alone has around 300 million people in need of eyeglasses.

|

Your new post is loading...

Your new post is loading...

3D Printed Photo Lenses may seem a conceptual bizarrerie. Yet they exist and even seem to deliver ...