Your new post is loading...

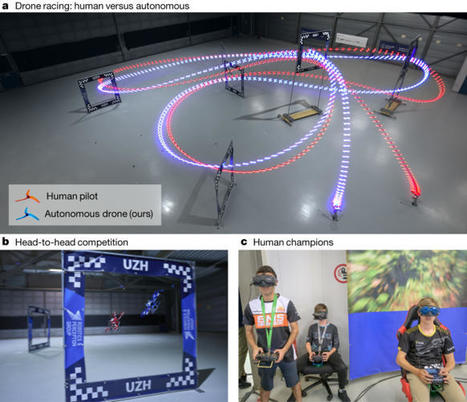

An autonomous drone has competed against human drone-racing champions — and won. The victory can be attributed to savvy engineering and a type of artificial intelligence that learns mostly through trial and error. First-person view (FPV) drone racing is a televised sport in which professional competitors pilot high-speed aircraft through a 3D circuit. Each pilot sees the environment from the perspective of their drone by means of video streamed from an onboard camera. Reaching the level of professional pilots with an autonomous drone is challenging because the robot needs to fly at its physical limits while estimating its speed and location in the circuit exclusively from onboard sensors. Here the authors of this paper introduce Swift, an autonomous system that can race physical vehicles at the level of the human world champions. The system combines deep reinforcement learning (RL) in simulation with data collected in the physical world. Swift competed against three human champions, including the world champions of two international leagues, in real-world head-to-head races. Swift won several races against each of the human champions and demonstrated the fastest recorded race time. This work represents a milestone for mobile robotics and machine intelligence, which may inspire the deployment of hybrid learning-based solutions in other physical systems. An autonomous system is described that combines deep reinforcement learning with onboard sensors collecting data from the physical world, enabling it to fly faster than human world champion drone pilots around a race track.

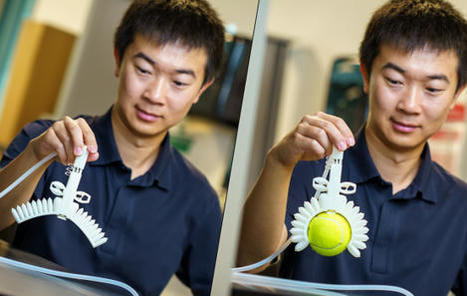

Inspired by the effortless way humans handle objects without seeing them, a team led by engineers at the University of California San Diego has developed a new approach that enables a robotic hand to rotate objects solely through touch, without relying on vision. Using their technique, the researchers built a robotic hand that can smoothly rotate a wide array of objects, from small toys, cans, and even fruits and vegetables, without bruising or squishing them. The robotic hand accomplished these tasks using only information based on touch. This work could aid in the development of robots that can manipulate objects in the dark. The team recently presented their work at the 2023 Robotics: Science and Systems Conference. To build their system, the researchers attached 16 touch sensors to the palm and fingers of a four-fingered robotic hand. Each sensor costs about $12 and serves a simple function: detect whether an object is touching it or not. What makes this approach unique is that it relies on many low-cost, low-resolution touch sensors that use simple, binary signals -- touch or no touch -- to perform robotic in-hand rotation. These sensors are spread over a large area of the robotic hand. This contrasts with a variety of other approaches that rely on a few high-cost, high-resolution touch sensors affixed to a small area of the robotic hand, primarily at the fingertips. There are several problems with these approaches, explained Xiaolong Wang, a professor of electrical and computer engineering at UC San Diego, who led the current study. First, having a small number of sensors on the robotic hand minimizes the chance that they will come in contact with the object. That limits the system's sensing ability. Second, high-resolution touch sensors that provide information about texture are extremely difficult to simulate, not to mention extremely expensive. That makes it more challenging to use them in real-world experiments. Lastly, a lot of these approaches still rely on vision. "Here, we use a very simple solution," said Wang. "We show that we don't need details about an object's texture to do this task. We just need simple binary signals of whether the sensors have touched the object or not, and these are much easier to simulate and transfer to the real world."

Humanoid robots built around cutting-edge AI brains promise shocking, disruptive change to labor markets and the wider global economy – and near-unlimited investor returns to whoever gets them right at scale. Big money is now flowing into the sector. The jarring emergence of ChatGPT has made it clear: AIs are advancing at a wild and accelerating pace, and they're beginning to transform industries based around desk jobs that typically marshal human intelligence. They'll begin taking over portions of many white-collar jobs in the coming years, leading initially to huge increases in productivity, and eventually, many believe, to huge increases in unemployment. If you're coming out of school right now and looking to be useful, blue collar work involving actual physical labor might be a better bet than anything that'd put you behind a desk. It's starting to look like a general-purpose humanoid robot worker might be closer than anyone thinks, imbued with light-speed, swarm-based learning capabilities to go along with GPT-version-X communication abilities, a whole internet's worth of knowledge, and whatever physical attributes you need for a given job. Such humanoids will begin as dumbass job-site apprentices with zero common sense, but they'll learn – at a frightening pace, if the last few months in AI has been any kind of indication. They'll be available 24/7, power sources permitting, gradually expanding their capabilities and overcoming their limitations until they begin displacing humans. They could potentially crash the cost of labor, leading to enormous gains in productivity – and a fundamental upheaval of the blue-collar labor market at a size and scale limited mainly by manufacturing, materials, and what kinds of jobs they're capable of taking over. The best-known humanoid to date has been Atlas, by Boston Dynamics. Atlas has shown impressive progress over the last 10 years. But Atlas is a research platform, and Boston Dynamics founder Marc Raibert has been clear that the company is in no rush to take humanoids to the mass market. Hanson Robotics and Engineered Arts, among others, have focused on human/robot interaction, concentrating on making humanoids like Sophia and Ameca, with lifelike and expressive faces. Ameca in particular communicates using the GPT language model. Others are absolutely focused on getting these things into mass usage as quickly as possible. Elon Musk announced Tesla's entry into humanoid robotics in 2021, and the company appears to have made pretty decent progress on the Optimus prototypes for its Tesla Bot in the intervening months. "It's probably the least understood or appreciated part of what we're doing at Tesla," he told investors last month. "But it will probably be worth significantly more than the car side of things long-term." Just this year, entrepreneur Brett Adcock, founder of the Vettery "online talent marketplace," and more recently Archer Aviation, one of the leading contenders in the emerging electric VTOL aircraft movement, announced his latest venture is focused on humanoid robots. The first phase of a Musk-like "master plan" for the new company, Figure, aims first to "build a feature-complete electromechanical humanoid," then to "perform human-like manipulation," then to "integrate humanoids into the labor force."

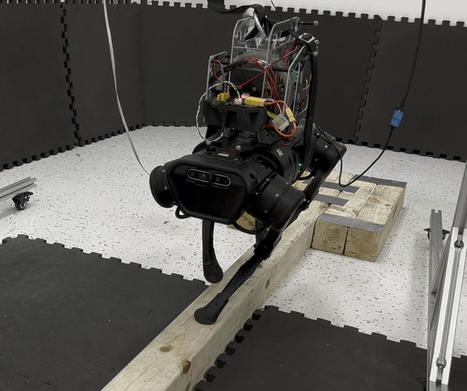

Researchers in Carnegie Mellon University's Robotics Institute (RI) have designed a system that makes an off-the-shelf quadruped robot nimble enough to walk a narrow balance beam — a feat that is likely the first of its kind. "This experiment was huge," said Zachary Manchester, an assistant professor in the RI and head of the Robotic Exploration Lab. "I don't think anyone has ever successfully done balance beam walking with a robot before." By leveraging hardware often used to control satellites in space, Manchester and his team offset existing constraints in the quadruped's design to improve its balancing capabilities. The standard elements of most modern quadruped robots include a torso and four legs that each end in a rounded foot, allowing the robot to traverse basic, flat surfaces and even climb stairs. Their design resembles a four-legged animal, but unlike cheetahs who can use their tails to control sharp turns or falling cats that adjust their orientation in mid-air with the help of their flexible spines, quadruped robots do not have such instinctive agility. As long as three of the robot's feet remain in contact with the ground, it can avoid tipping over. But if only one or two feet are on the ground, the robot can't easily correct for disturbances and has a much higher risk of falling. This lack of balance makes walking over rough terrain particularly difficult. "With current control methods, a quadruped robot's body and legs are decoupled and don't speak to one another to coordinate their movements," Manchester said. "So how can we improve their balance?" The team's solution employs a reaction wheel actuator (RWA) system that mounts to the back of a quadruped robot. With the help of a novel control technique, the RWA allows the robot to balance independent of the positions of its feet. RWAs are widely used in the aerospace industry to perform attitude control on satellites by manipulating the angular momentum of the spacecraft. "You basically have a big flywheel with a motor attached," said Manchester, who worked on the project with RI graduate student Chi-Yen Lee and mechanical engineering graduate students Shuo Yang and Benjamin Boksor. "If you spin the heavy flywheel one way, it makes the satellite spin the other way. Now take that and put it on the body of a quadruped robot."

Bumblebees are clumsy fliers. It is estimated that a foraging bee bumps into a flower about once per second, which damages its wings over time. Yet despite having many tiny rips or holes in their wings, bumblebees can still fly. Aerial robots, on the other hand, are not so resilient. Poke holes in the robot’s wing motors or chop off part of its propellor, and odds are pretty good it will be grounded. Inspired by the hardiness of bumblebees, MIT researchers have developed repair techniques that enable a bug-sized aerial robot to sustain severe damage to the actuators, or artificial muscles, that power its wings — but to still fly effectively. They optimized these artificial muscles so the robot can better isolate defects and overcome minor damage, like tiny holes in the actuator. In addition, they demonstrated a novel laser repair method that can help the robot recover from severe damage, such as a fire that scorches the device. Using their techniques, a damaged robot could maintain flight-level performance after one of its artificial muscles was jabbed by 10 needles, and the actuator was still able to operate after a large hole was burnt into it. Their repair methods enabled a robot to keep flying even after the researchers cut off 20 percent of its wing tip. This could make swarms of tiny robots better able to perform tasks in tough environments, like conducting a search mission through a collapsing building or dense forest. “We spent a lot of time understanding the dynamics of soft, artificial muscles and, through both a new fabrication method and a new understanding, we can show a level of resilience to damage that is comparable to insects,” says Kevin Chen, the D. Reid Weedon, Jr. Assistant Professor in the Department of Electrical Engineering and Computer Science (EECS), the head of the Soft and Micro Robotics Laboratory in the Research Laboratory of Electronics (RLE), and the senior author of the paper on these latest advances. “We’re very excited about this. But the insects are still superior to us, in the sense that they can lose up to 40 percent of their wing and still fly. We still have some catch-up work to do.”

Researchers combined optical sensors with a composite material to create a soft robot that can detect when and where it was damaged – and then heal itself on the spot. "Our lab is always trying to make robots more enduring and agile, so they operate longer with more capabilities," said Rob Shepherd, associate professor of mechanical and aerospace engineering. "If you make robots operate for a long time, they're going to accumulate damage. And so how can we allow them to repair or deal with that damage?" Shepherd's Organic Robotics Lab has developed stretchable fiber-optic sensors for use in soft robots and related components -- from skin to wearable technology. For self-healing to work, Shepard says the key first step is that the robot must be able to identify that there is, in fact, something that needs to be fixed. To do this, researchers have pioneered a technique using fiber-optic sensors coupled with LED lights capable of detecting minute changes on the surface of the robot. These sensors are combined with a polyurethane urea elastomer that incorporates hydrogen bonds, for rapid healing, and disulfide exchanges, for strength. The resulting SHeaLDS -- self-healing light guides for dynamic sensing -- provides a damage-resistant soft robot that can self-heal from cuts at room temperature without any external intervention. To demonstrate the technology, the researchers installed the SHeaLDS in a soft robot resembling a four-legged starfish and equipped it with feedback control. Researchers then punctured one of its legs six times, after which the robot was then able to detect the damage and self-heal each cut in about a minute. The robot could also autonomously adapt its gait based on the damage it sensed. While the material is sturdy, it is not indestructible. "They have similar properties to human flesh," Shepherd said. "You don't heal well from burning, or from things with acid or heat, because that will change the chemical properties. But we can do a good job of healing from cuts." Shepherd plans to integrate SHeaLDS with machine learning algorithms capable of recognizing tactile events to eventually create "a very enduring robot that has a self-healing skin but uses the same skin to feel its environment to be able to do more tasks."

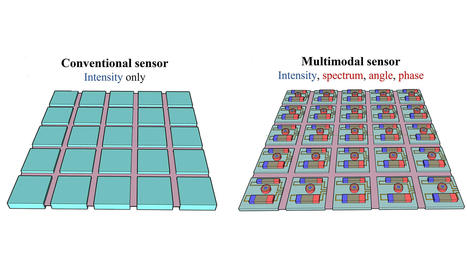

Image sensors measure light intensity, but angle, spectrum, and other aspects of light must also be extracted to significantly advance machine vision. In Applied Physics Letters, published by AIP Publishing, researchers at the University of Wisconsin-Madison, Washington University in St. Louis, and OmniVision Technologies highlight the latest nano-structured components integrated on image sensor chips that are most likely to make the biggest impact in multimodal imaging. The developments could enable autonomous vehicles to see around corners instead of just a straight line, biomedical imaging to detect abnormalities at different tissue depths, and telescopes to see through interstellar dust. “Image sensors will gradually undergo a transition to become the ideal artificial eyes of machines,” co-author Yurui Qu, from the University of Wisconsin-Madison, said. “An evolution leveraging the remarkable achievement of existing imaging sensors is likely to generate more immediate impacts.” Image sensors, which converts light into electrical signals, are composed of millions of pixels on a single chip. The challenge is how to combine and miniaturize multifunctional components as part of the sensor. In their own work, the researchers detailed a promising approach to detect multiple-band spectra by fabricating an on-chip spectrometer. They deposited photonic crystal filters made up of silicon directly on top of the pixels to create complex interactions between incident light and the sensor. The pixels beneath the films record the distribution of light energy, from which light spectral information can be inferred. The device – less than a hundredth of a square inch in size – is programmable to meet various dynamic ranges, resolution levels, and almost any spectral regime from visible to infrared. The researchers built a component that detects angular information to measure depth and construct 3D shapes at subcellular scales. Their work was inspired by directional hearing sensors found in animals, like geckos, whose heads are too small to determine where sound is coming from in the same way humans and other animals can. Instead, they use coupled eardrums to measure the direction of sound within a size that is orders of magnitude smaller than the corresponding acoustic wavelength. Similarly, pairs of silicon nanowires were constructed as resonators to support optical resonance. The optical energy stored in two resonators is sensitive to the incident angle. The wire closest to the light sends the strongest current. By comparing the strongest and weakest currents from both wires, the angle of the incoming light waves can be determined. Millions of these nanowires can be placed on a 1-square-millimeter chip. The research could support advances in lensless cameras, augmented reality, and robotic vision.

The skin is water-resistant, has self-healing properties, and can move and stretch with the movement of the robotic body parts. Scientists may have moved us one step closer to creating truly life-like robots, after successfully covering a robotic finger with living skin that can heal when damaged. In a process that sounds like something out of a sci-fi movie, the robotic parts are submerged in a vat of jelly, and come out with living skin tissue covering them. The skin allows for the finger to move and bend like a human finger, and cuts can even be healed by applying a sheet of gel. It also provides a realism that current silicone skin made for robots cannot achieve, such as subtle textures like wrinkles, and skin-specific functions like moisture retention. The scientists behind the work say it is “the first step of the proof of concept that something could be covered by skin”, although it may still be some time before an entire humanoid is successfully covered. Previous attempts at covering robots with living skin sheets have only had limited success due to the difficulty of fitting them to dynamic objects with uneven surfaces. The team at the University of Tokyo in Japan may have solved this problem, using a novel method to cover the robotic finger with skin. The finger is submerged in a cylinder filled with the jelly - a solution of collagen and human dermal fibroblasts, the two main components that make up the skin’s connective tissues.

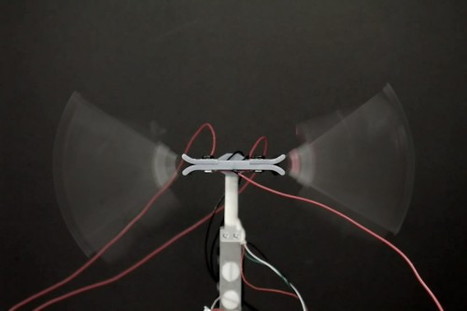

A new drive system for flapping wing autonomous robots has been developed by a University of Bristol team, using a new method of electromechanical zipping that does away with the need for conventional motors and gears. This new advance, published today in the journal Science Robotics, could pave the way for smaller, lighter and more effective micro flying robots for environmental monitoring, search and rescue, and deployment in hazardous environments. Until now, typical micro flying robots have used motors, gears and other complex transmission systems to achieve the up-and-down motion of the wings. This has added complexity, weight and undesired dynamic effects. Taking inspiration from bees and other flying insects, researchers from Bristol's Faculty of Engineering, led by Professor of Robotics Jonathan Rossiter, have successfully demonstrated a direct-drive artificial muscle system, called the Liquid-amplified Zipping Actuator (LAZA), that achieves wing motion using no rotating parts or gears. The LAZA system greatly simplifies the flapping mechanism, enabling future miniaturization of flapping robots down to the size of insects. In the new paper, the team show how a pair of LAZA-powered flapping wings can provide more power compared with insect muscle of the same weight, enough to fly a robot across a room at 18 body lengths per second. They also demonstrated how the LAZA can deliver consistent flapping over more than one million cycles, important for making flapping robots that can undertake long-haul flights. The team expect the LAZA to be adopted as a fundamental building block for a range of autonomous insect-like flying robots. Dr Tim Helps, lead author and developer of the LAZA system said "With the LAZA, we apply electrostatic forces directly on the wing, rather than through a complex, inefficient transmission system. This leads to better performance, simpler design, and will unlock a new class of low-cost, lightweight flapping micro-air vehicles for future applications, like autonomous inspection of off-shore wind turbines."

Researchers at Columbia Univ. built a 3D printable, synthetic soft muscle that can mimic nature’s biology — lifting 1000 times its own weight. The artificial muscle is 3 times stronger than natural muscle — and can push, pull, bend, twist, and lift weighty objects. The breakthrough enables a new generation of completely soft robots. Today’s mechanisms that move robotics — called actuators — are bulky pneumatic (gas pressure) or hydraulic (fluid pressure) inflation systems made of elastomer skins — that expand when air or liquid is pushed into them. But those require external compressors + pressure regulating equipment. The team is led by award-winning roboticist Hod Lipson PhD — from the Creative Machines Lab at Columbia Univ. We’ve had great strides in making robot software — but robot bodies are still primitive. This is a big piece of the puzzle. Just like biology, the new actuator can be shaped + re-shaped thousands of ways. We’ve overcome one of the final barriers to making life-like robots.

Ultrasound measurements of muscle dynamics provide customized, activity-specific assistance People rarely walk at a constant speed and a single incline. We change speed when rushing to the next appointment, catching a crosswalk signal, or going for a casual stroll in the park. Slopes change all the time too, whether we're going for a hike or up a ramp into a building. In addition to environmental variably, how we walk is influenced by sex, height, age, and muscle strength, and sometimes by neural or muscular disorders such as stroke or Parkinson's Disease. This human and task variability is a major challenge in designing wearable robotics to assist or augment walking in real-world conditions. To date, customizing wearable robotic assistance to an individual's walking requires hours of manual or automatic tuning -- a tedious task for healthy individuals and often impossible for older adults or clinical patients. Now, researchers from the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) have developed a new approach in which robotic exosuit assistance can be calibrated to an individual and adapt to a variety of real-world walking tasks in a matter of seconds. The bioinspired system uses ultrasound measurements of muscle dynamics to develop a personalized and activity-specific assistance profile for users of the exosuit. "Our muscle-based approach enables relatively rapid generation of individualized assistance profiles that provide real benefit to the person walking," said Robert D. Howe, the Abbott and James Lawrence Professor of Engineering, and co-author of the paper. The research is published in Science Robotics. Previous bioinspired attempts at developing individualized assistance profiles for robotic exosuits focused on the dynamic movements of the limbs of the wearer. The SEAS researchers took a different approach. The research was a collaboration between Howe's Harvard Biorobotics Laboratory, which has extensive experience in ultrasound imaging and real-time image processing, and the Harvard Biodesign Lab, run by Conor J. Walsh, the Paul A. Maeder Professor of Engineering and Applied Sciences at SEAS, which develops soft wearable robots for augmenting and restoring human performance. "We used ultrasound to look under the skin and directly measured what the user's muscles were doing during several walking tasks," said Richard Nuckols, a Postdoctoral Research Associate at SEAS and co-first author of the paper. "Our muscles and tendons have compliance which means there is not necessarily a direct mapping between the movement of the limbs and that of the underlying muscles driving their motion."

Computers can now beat the best human minds in the most intellectually challenging games — like chess. They can also perform tasks that are difficult for adult humans to learn — like driving cars. Yet autonomous machines have difficulty learning to cooperate, something even young children do.

Human cooperation appears easy — but it’s very difficult to emulate because it relies on cultural norms, deeply rooted instincts, and social mechanisms that express disapproval of non-cooperative behavior.

Such common sense mechanisms aren’t easily built into machines. In fact, the same AI software programs that effectively play the board games of chess + checkers, Atari video games, and the card game of poker — often fail to consistently cooperate when cooperation is necessary.

Other AI software often takes 100s of rounds of experience to learn to cooperate with each other, if they cooperate at all. Can we build computers that cooperate with humans — the way humans cooperate with each other? Building on decades of research in AI, we built a new software program that learns to cooperate with other machines — simply by trying to maximize its own world.

We ran experiments that paired the AI with people in various social scenarios — including a “prisoner’s dilemma” challenge and a sophisticated block-sharing game. While the program consistently learns to cooperate with another computer — it doesn’t cooperate very well with people. But people didn’t cooperate much with each other either.

As we all know: humans can cooperate better if they can communicate their intentions through words + body language. So in hopes of creating an program that consistently learns to cooperate with people — we gave our AI a way to listen to people, and to talk to them.

We did that in a way that lets the AI play in previously unanticipated scenarios. The resulting algorithm achieved our goal. It consistently learns to cooperate with people as well as people do. Our results show that 2 computers make a much better team — better than 2 humans, and better than a human + a computer.

But the program isn’t a blind cooperator. In fact, the AI can get pretty angry if people don’t behave well. The historic computer scientist Alan Turing PhD believed machines could potentially demonstrate human-like intelligence. Since then, AI has been regularly portrayed as a threat to humanity or human jobs.

To protect people, programmers have tried to code AI to follow legal + ethical principles — like the 3 Laws of Robotics written by Isaac Asimov PhD. Our research demonstrates that a new path is possible.

Machines designed to selfishly maximize their pay-offs can — and should — make an autonomous choice to cooperate with humans across a wide range of situations. 2 humans — if they were honest with each other + loyal — would have done as well as 2 machines. About half of the humans lied at some point. So the AI is learning that moral characteristics are better — since it’s programmed to not lie — and it also learns to maintain cooperation once it emerges.

The goal is we need to understand the math behind cooperating with people — what attributes does AI need so it can develop social skills. AI must be able to respond to us — and articulate what it’s doing. It must interact with other people. This research could help humans with their relationships. In society, relationships break-down all the time. People that were friends for years all-of-a-sudden become enemies. Because the AI is often better at reaching these compromises than we are, it could teach us how to get-along better.

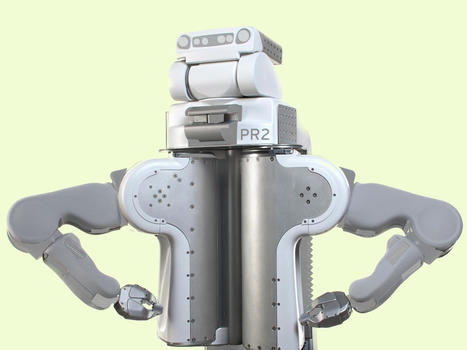

University of California researchers have developed a new computer AI software that enables robots to learn physical skills — called motor tasks — by trial + error. The robot uses a step-by-step process similar to the way humans learn. The lab made a demo of their technique — called re-inforcement learning. In the test: the robot completes a variety of physical tasks — without any pre-programmed details about its surroundings. Some of the robot’s successful test tasks:

- putting a clothes hanger on a pole

- stacking wood donuts on a pole

- assembling a toy airplane

- screwing the cap on a water bottle

- inserting a shaped peg into its matching hole

Robots learn motor tasks autonomously with this AI. The lead researcher Pieter Abbeel PhD said: "What we are showing is a new approach to enable a robot to learn. The key is that when a robot is faced with something new, we won’t have to re-program it. The same AI software enables the robot to learn all the different tasks we gave it." Team member Trevor Darrell PhD added: "Most robotic applications happen in controlled environments — where physical objects are in predictable positions in the surroundings. The challenge of putting robots into real-life settings — like homes, offices, or transported to new or unknown facilities — is that those environments are constantly changing. The robot must be able to sense + adapt to its surroundings." The research is part of the People + Robots Initiative — at the Univ. of California’s Center for Information Technology Research in the Interest of Society — CITRIS. The center develops info-tech solutions for world-wide benefit. They advance AI, robotics, and automation.

|

Introducing Robotic Transformer 2 (RT-2), a novel vision-language-action (VLA) model that learns from both web and robotics data, and translates this knowledge into generalized instructions for robotic control, while retaining web-scale capabilities. This work builds upon Robotic Transformer 1 (RT-1), a model trained on multi-task demonstrations which can learn combinations of tasks and objects seen in the robotic data. RT-2 shows improved generalisation capabilities and semantic and visual understanding, beyond the robotic data it was exposed to. This includes interpreting new commands and responding to user commands by performing rudimentary reasoning, such as reasoning about object categories or high-level descriptions.

Figure 01 is a bipedal, AI-powered humanoid. And it wants to work in a warehouse. Key to Figure’s robotics approach is its reliance on artificial intelligence to enable its robots to learn and improve their abilities. As movements and performance evolves, the robots can progress from basic lifting and carrying tasks to more advanced functions. That work will start out in the warehouse setting, doing the kind of heavy lifting that people don’t particularly enjoy. “Our business plan is to get to revenue as fast as we can. And honestly that means doing things that are technically easier,” says Figure founder Brett Adcock. Single-purpose robots are already common in warehouses, like the roving robots that carry boxes to shelves in Amazon fulfillment centers, and a robot created for DHL Supply Chain that unloads boxes from trucks at warehouse loading bays. Human-like robots could be a more versatile solution for the warehouse, Adcock says. Instead of relying on one robot to unload a truck and another to haul boxes around, Figure’s plan is to create a robot that can do almost anything a human worker otherwise would. In some ways, it’s a narrower approach to the humanoid robot, which many other companies have sought to build. Honda’s Asimo was intended to help a range of users, from people with limited mobility to older folks needing full-time care. NASA has invested in humanoid “robonauts” that are capable of assisting with missions in space. Boston Dynamics has pushed the limits of robotics by creating humanoids that can jump and flip. “Existing humanoids today have just been stunts and demos,” Adcock says. “We want to get away from that and show that they can be really useful.” Figure has produced five prototypes of its humanoid. They’re designed to have 25 degrees of motion, including the ability to bend over fully at the waist and lift a box from the ground up to a high shelf. Hands will add even more flexibility and utility. That is the plan, at least. Right now, the prototype robots are mainly just walking. Adcock expects to conduct extensive testing and refinement in the coming months, getting the robots to the point where they can handle most general warehouse applications by the end of the year. A pilot of 50 robots working in a real warehouse setting is being eyed for 2024. “Hardware companies take time,” he says. “This will take 20 or 30 years for us to really build out.” With a reflective featureless face mask that may remind some viewers of a character from G.I. Joe, Figure 01 is a sleeker vision of the humanoid robots people may now be familiar with. Atlas, the walking, jumping, and parkour-enabled robot from the research wing of Boston Dynamics, for example, has prioritized mechanical skills over aesthetics. Figure’s robot bears some similarity to Tesla’s Optimus robot. Announced in 2021 during a presentation featuring a human in a spandex robot suit, Optimus also aspires to a smoother form but its latest prototype looks more science science than science fiction. Adcock says Figure’s robots use advanced electric motors, enabling smoother movement than the hydraulically run Atlas, which is allowing the prototypes to have a more natural gait while also fitting the mechanical systems into a smaller package. “We want to fool like 90% of people in a walking Turing test,” he says. The concept is being built around the notion that the robot can be continually improved over time, learning new skills and eventually expanding into more complex tasks. Being able to do more, Adcock says, makes what could be a very expensive device much more affordable to build and buy. That could someday lead to Figure’s robots working in manufacturing, retail, home care, or even outer space. “I really believe that humanoids will colonize planets,” Adcock says. For now, Figure is targeting the humble warehouse. “If we unveil the humanoid at some big event, it’ll just be doing warehouse work on stage the whole time,” Adcock says. “No back flips, none of that crazy parkour stuff. We just want to do real, practical work.”

Extra parts, from a thumb to an arm, could be designed to help boost our capabilities Whether it is managing childcare, operating on a patient or cooking a Sunday dinner, there are many occasions when an extra pair of arms would come in, well, handy. Now researchers say such human augmentation could be on the horizon, suggesting additional robotic body parts could be designed to boost our capabilities. Tamar Makin, a professor of cognitive neuroscience at the MRC cognition and brain unit at Cambridge University, said the approach could increase productivity. “If you want an extra arm while you’re cooking in the kitchen so you can stir the soup while chopping the vegetables, you might have the option to wear and independently control an extra robotic arm,” she said. The approach has precedence: Dani Clode, a designer and colleague of Makin’s at Cambridge University, has already created a 3D-printed thumb that can be added to any hand. Clode will be discussing the device as part of panel on “Homo cyberneticus: motor augmentation for the future body” at the annual meeting of the American Association for the Advancement of Science (AAAS) in Washington DC on Friday. Makin said the extra thumb could be helpful for waiters holding plates, or for electrical engineers when soldering, for example, and other robotic body parts could be designed for particular workplace needs. For example, extra arm could help a builder hammer a nail while holding a joist in place. “We spoke with a surgeon [who] was really interested in holding his camera whilst he’s doing shoulder surgery, rather than his assistant holding his camera,” said Clode. “He wanted to be in full control of the tools that he’s using with the two hands whilst also holding that camera and being able to manipulate that as well.” The team say robotic body parts could allow far more control than a simple mounted device, with their operation inspired by our natural mechanisms of agency. “We want something that we’d be able to control [very] precisely without us having to articulate what it is exactly that we want,” said Makin. She said the team’s approach was rooted in the idea that the additional appendages could be used to build on the existing capabilities of a person’s body. “If you’re missing a limb, instead of trying to replace that limb, why don’t we augment your intact hand to allow you to do more with it?” she said. But the team envisage such devices also being used by people who are not living with disabilities. Clode said an important feature of human augmentation devices is that they do not take away from the wearer’s original capabilities. “[It’s] a layer on the body that can be used with the least amount of impact as possible for the most amount of gain,” she said. A key aspect of that, Clode added, is that – unlike a spade being used to increase our abilities to dig a hole – such robotic body parts must not be hand-operated.

A new robotic arm could be a game changer in surgeries, with the capability to 3D-print biomaterial directly onto organs inside a patient’s body. The arm, designed by a team of engineers from the University of New South Wales in Australia, can place a tiny, flexible 3D bioprinter inside the body, using bio-ink to “print” tissue-like structures onto internal organs. The device, dubbed F3DB, is designed with a swivel head to offer full flexibility of movement and features an array of soft artificial muscles to allow movement in three separate directions. The entire structure can be controlled externally. “Existing 3D-bioprinting techniques require biomaterials to be made outside the body and implanting that into a person would usually require large open-field open surgery which increases infection risks,” said Thanh Do, study lead. “Our flexible 3D bioprinter means biomaterials can be directly delivered into the target tissue or organs with a minimally invasive approach. This system offers the potential for the precise reconstruction of three-dimensional wounds inside the body. Our approach also addresses significant limitations in existing 3D bioprinters such as surface mismatches between 3D-printed biomaterials and target tissues/organs as well as structural damage during manual handling, transferring, and transportation process.” Bioprinting in the medical industry is primarily used for research purposes, such as tissue engineering and drug development, and typically requires large-scale, external 3D printers to create the cellular structures. According to the team, in the next few years, the technology could be used to reach and operate on hard-to-reach areas in the body. In the project’s next stage, the F3DB will be used in animal test subjects, as well as further development of the arm to include an integrated camera and scanning system.

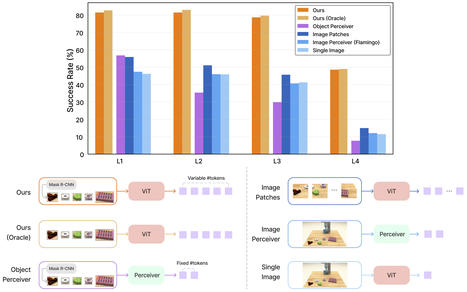

Prompt-based learning has emerged as a successful paradigm in natural language processing, where a single general-purpose language model can be instructed to perform any task specified by input prompts. Yet task specification in robotics comes in various forms, such as imitating one-shot demonstrations, following language instructions, and reaching visual goals. They are often considered different tasks and tackled by specialized models. This present work shows that a wide spectrum of robot manipulation tasks can be expressed with multimodal prompts, interleaving textual and visual tokens. The robotic team designed a transformer-based generalist robot agent, VIMA, that processes these prompts and outputs motor actions auto-regressively. To train and evaluate VIMA, they developed a new simulation benchmark with thousands of procedurally-generated tabletop tasks with multimodal prompts, 600K+ expert trajectories for imitation learning, and four levels of evaluation protocol for systematic generalization. VIMA was able to achieve strong scalability in both model capacity and data size. It outperformed prior SOTA methods in the hardest zero-shot generalization setting by up to 2.9 times task success rate given the same training data. With 10 times less training data, VIMA still performed 2.7 times better than the top competing approach.

In San Francisco, California, the self-driving tech company – owned by Google parent Alphabet – moved a step closer to launching a fully autonomous commercialized ride-hailing service, as is currently being operated by its chief rival, General Motors-owned Cruise. And in Phoenix, Arizona, Waymo’s driverless service has been made available to members of the general public in the central Downtown area. The breakthrough in San Francisco has come via the approval by the California Department of Motor Vehicles to an amendment of the company’s current permit to operate. Now Waymo will be able to charge fees for driverless services in its autonomous vehicles (AVs), such as deliveries. Once it has operated a driverless service on public roads in the city for a total of 30 days, it will then be eligible to submit an application to the California Public Utilities Commission (CPUC) for a permit that would enable it to charge fares for passenger-only autonomous rides in its vehicles. This is the same permit that provided the greenlight for Cruise’s commercial driverless ride-hail service at the start of June. The CPUC awarded a drivered deployment permit to Waymo in February which allowed the company to charge its ‘trusted testers’ for autonomous rides with a safety operator on board. The trusted tester program comprises vetted members of the public who have applied to use the service and have signed an NDA which means they will not talk about their experiences publicly. In downtown Phoenix, the extension of the driverless ride-hail service is the latest evidence of the incremental progress Waymo has made in the city. Over the past couple of years, the company has operated a paid rider-only service in some of Phoenix’s eastern suburbs, such as Gilbert, Mesa, Chandler and Tempe. Earlier this year it moved into the busier, more central downtown area, where driverless rides were made available for trusted testers. It also trialled an autonomous service for employees at Phoenix Sky Harbor International Airport, albeit with a safety operator on board. In early November, it was confirmed that airport rides would be offered to trusted testers, although again Waymo made clear that there would be a specialist in the driver’s seat, initially at least.

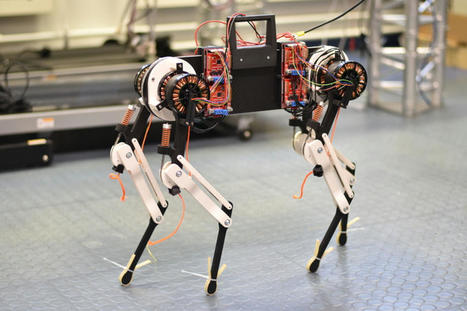

A newborn giraffe or foal must learn to walk on its legs as fast as possible to avoid predators. Animals are born with muscle coordination networks located in their spinal cord. However, learning the precise coordination of leg muscles and tendons takes some time. Initially, baby animals rely heavily on hard-wired spinal cord reflexes. While somewhat more basic, motor control reflexes help the animal to avoid falling and hurting themselves during their first walking attempts. The following, more advanced and precise muscle control must be practiced, until eventually the nervous system is well adapted to the young animal's leg muscles and tendons. No more uncontrolled stumbling -- the young animal can now keep up with the adults. Researchers at the Max Planck Institute for Intelligent Systems (MPI-IS) in Stuttgart conducted a research study to find out how animals learn to walk and learn from stumbling. They built a four-legged, dog-sized robot, that helped them figure out the details. "As engineers and roboticists, we sought the answer by building a robot that features reflexes just like an animal and learns from mistakes," says Felix Ruppert, a former doctoral student in the Dynamic Locomotion research group at MPI-IS. "If an animal stumbles, is that a mistake? Not if it happens once. But if it stumbles frequently, it gives us a measure of how well the robot walks." Felix Ruppert is first author of "Learning Plastic Matching of Robot Dynamics in Closed-loop Central Pattern Generators," which will be published July 18, 2022 in the journal Nature Machine Intelligence. Learning algorithm optimizes virtual spinal cord After learning to walk in just one hour, Ruppert's robot makes good use of its complex leg mechanics. A Bayesian optimization algorithm guides the learning: the measured foot sensor information is matched with target data from the modeled virtual spinal cord running as a program in the robot's computer. The robot learns to walk by continuously comparing sent and expected sensor information, running reflex loops, and adapting its motor control patterns. The learning algorithm adapts control parameters of a Central Pattern Generator (CPG). In humans and animals, these central pattern generators are networks of neurons in the spinal cord that produce periodic muscle contractions without input from the brain. Central pattern generator networks aid the generation of rhythmic tasks such as walking, blinking or digestion. Furthermore, reflexes are involuntary motor control actions triggered by hard-coded neural pathways that connect sensors in the leg with the spinal cord. As long as the young animal walks over a perfectly flat surface, CPGs can be sufficient to control the movement signals from the spinal cord. A small bump on the ground, however, changes the walk. Reflexes kick in and adjust the movement patterns to keep the animal from falling. These momentary changes in the movement signals are reversible, or 'elastic', and the movement patterns return to their original configuration after the disturbance. But if the animal does not stop stumbling over many cycles of movement -- despite active reflexes -- then the movement patterns must be relearned and made 'plastic', i.e., irreversible. In the newborn animal, CPGs are initially not yet adjusted well enough and the animal stumbles around, both on even or uneven terrain. But the animal rapidly learns how its CPGs and reflexes control leg muscles and tendons. The same holds true for the Labrador-sized robot-dog named "Morti." Even more, the robot optimizes its movement patterns faster than an animal, in about one hour. Morti's CPG is simulated on a small and lightweight computer that controls the motion of the robot's legs. This virtual spinal cord is placed on the quadruped robot's back where the head would be. During the hour it takes for the robot to walk smoothly, sensor data from the robot's feet are continuously compared with the expected touch-down predicted by the robot's CPG. If the robot stumbles, the learning algorithm changes how far the legs swing back and forth, how fast the legs swing, and how long a leg is on the ground. The adjusted motion also affects how well the robot can utilize its compliant leg mechanics.

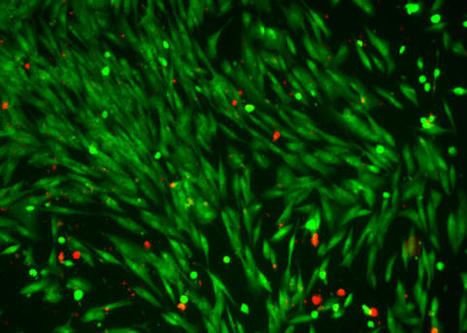

Scientists from the Max Planck Institute for Intelligent Systems and the Max Planck Institute for Solid State Research develop organic microparticles that can steer through biological fluids and dissolved blood in unprecedented ways. Even in very salty liquids, the microswimmers can be propelled forward at high speed by visible light, either individually or as a swarm. Additionally, they are partially biocompatible and can take up and release cargo on demand. The material properties are so ideal they could pave the way toward designing semi-autonomous microrobots applied in biomedicine. Science Fiction novelists couldn't have come up with a crazier plot: microrobots streaming through blood or through other fluids in our body which are driven by light, can carry drugs to cancer cells and drop off the medication on the spot. What sounds like a far-fetched fantasy, is however the short summary of a research project published in the journal Science Robotics. The microswimmers presented in the work bear the potential to one day perform tasks in living organisms or biological environments that are not easily accessible otherwise. Looking even further ahead, the swimmers could perhaps one day help treat cancer or other diseases. In their recent paper "Light-driven carbon nitride microswimmers with propulsion in biological and ionic media and responsive on-demand drug delivery," a team of scientists from the Max Planck Institute for Intelligent Systems (MPI-IS) and its neighboring institute, the Max Planck Institute for Solid State Research (MPI-FKF), demonstrate organic microparticles that can steer through biological fluids and dissolved blood in an unprecedented way. Even in very salty liquids, the microswimmers can be propelled forward at high speed by visible light, either individually or as a swarm. Additionally, they are partially biocompatible and can take up and release cargo on demand. At MPI-IS, scientists from the Physical Intelligence Department led by Metin Sitti were involved and at MPI-FKF, scientists from the Nanochemistry Department led by Bettina Lotsch. Designing and fabricating such highly advanced microswimmers seemed impossible up until now. Locomotion by light energy is hindered by the salts found in water or the body. This requires a sophisticated design that is difficult to scale up. Additionally, controlling the robots from the outside is challenging and often costly. Controlled cargo uptake and on-the-spot delivery is another supreme discipline in the field of nanorobotics. The scientists used a porous two-dimensional carbon nitride (CNx) that can be synthesized from organic materials, for instance, urea. Like the solar cells of a photovoltaic panel, carbon nitride can absorb light which then provides the energy to propel the robot forward when light illuminates the particle surface. High ion tolerance "The use of light as the energy source of propulsion is very convenient when doing experiments in a petri dish or for applications directly under the skin," says Filip Podjaski, a group leader in the Nanochemistry Department at MPI-FKF. "There is just one problem: even tiny concentrations of salts prohibit light-controlled motion. Salts are found in all biological liquids: in blood, cellular fluids, digestive fluids etc. However, we have shown that our CNx microswimmers function in all biological liquids -- even when the concentration of salt ions is very high. This is only possible due to a favorable interplay of different factors: efficient light energy conversion as the driving force, as well as the porous structure of the nanoparticles, which allows ions to flow through them, reducing the resistance created by salt, so to speak. In addition, in this material, light favors the mobility of ions -- making the particle even faster."

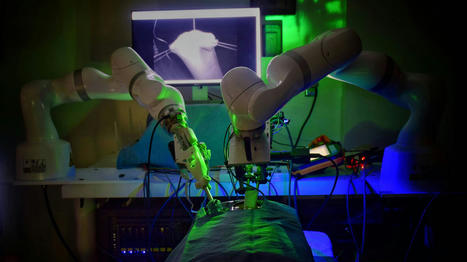

In four experiments on pig tissues, the robot excelled at suturing two ends of intestine—one of the most intricate and delicate tasks in abdominal surgery. A robot has performed laparoscopic surgery on the soft tissue of a pig without the guiding hand of a human -- a significant step in robotics toward fully automated surgery on humans. Designed by a team of Johns Hopkins University researchers, the Smart Tissue Autonomous Robot (STAR) is described today in Science Robotics. "Our findings show that we can automate one of the most intricate and delicate tasks in surgery: the reconnection of two ends of an intestine. The STAR performed the procedure in four animals and it produced significantly better results than humans performing the same procedure," said senior author Axel Krieger, an assistant professor of mechanical engineering at Johns Hopkins' Whiting School of Engineering. The robot excelled at intestinal anastomosis, a procedure that requires a high level of repetitive motion and precision. Connecting two ends of an intestine is arguably the most challenging step in gastrointestinal surgery, requiring a surgeon to suture with high accuracy and consistency. Even the slightest hand tremor or misplaced stitch can result in a leak that could have catastrophic complications for the patient. Working with collaborators at the Children's National Hospital in Washington, D.C. and Jin Kang, a Johns Hopkins professor of electrical and computer engineering, Krieger helped create the robot, a vision-guided system designed specifically to suture soft tissue. Their current iteration advances a 2016 model that repaired a pig's intestines accurately, but required a large incision to access the intestine and more guidance from humans. The team equipped the STAR with new features for enhanced autonomy and improved surgical precision, including specialized suturing tools and state-of-the art imaging systems that provide more accurate visualizations of the surgical field. Soft-tissue surgery is especially hard for robots because of its unpredictability, forcing them to be able to adapt quickly to handle unexpected obstacles, Krieger said. The STAR has a novel control system that can adjust the surgical plan in real time, just as a human surgeon would. "What makes the STAR special is that it is the first robotic system to plan, adapt, and execute a surgical plan in soft tissue with minimal human intervention," Krieger said.

Researchers at the Italian Institute of Technology (IIT) have recently been exploring a fascinating idea, that of creating humanoid robots that can fly. To efficiently control the movements of flying robots, objects or vehicles, however, researchers require systems that can reliably estimate the intensity of the thrust produced by propellers, which allow them to move through the air. As thrust forces are difficult to measure directly, they are usually estimated based on data collected by onboard sensors. The team at IIT recently introduced a new framework that can estimate thrust intensities of flying multi-body systems that are not equipped with thrust-measuring sensors. This framework, presented in a paper published in IEEE Robotics and Automation Letters, could ultimately help them to realize their envisioned flying humanoid robot. "Our early ideas of making a flying humanoid robot came up around 2016," Daniele Pucci, head of the Artificial and Mechanical Intelligence lab that carried out the study, told TechXplore. "The main purpose was to conceive robots that could operate in disaster-like scenarios, where there are survivors to rescue inside partially destroyed buildings, and these buildings are difficult to reach because of potential floods and fire around them." The key objective of the recent work by Pucci and his colleagues was to devise a robot that can manipulate objects, walk on the ground and fly. As many humanoid robots can both manipulate objects and move on the ground, the team decided to extend the capabilities of a humanoid robot to include flight; rather than developing an entirely new robotic structure. "Once provided with flight abilities, humanoid robots could fly from one building to another avoiding debris, fire and floods," Pucci said. "After landing, they could manipulate objects to open doors and close gas valves, or walk inside buildings for indoor inspection, for instance looking for survivors of a fire or natural disaster." Initially, Pucci and his colleagues tried to provide iCub, a renowned humanoid robot created at IIT, with the ability to balance its body on the ground, for instance standing on a single foot. Once they achieved this, they started working on broadening the robot's locomotion skills, so that it could also fly and move in the air. The team refer to the area of research they have been focusing on as 'aerial humanoid robotics." "To the best of our knowledge, we produced the first work about flying humanoid robots," Pucci said. "That paper was obviously testing flight controllers in simulation environments only, but given the promising outcomes, we embarked upon the journey of designing iRonCub, the first jet-powered humanoid robot presented in our latest paper."

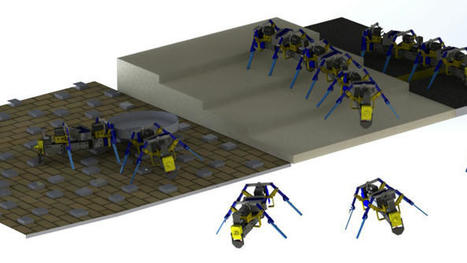

As a robotics engineer, Yasemin Ozkan-Aydin, assistant professor of electrical engineering at the University of Notre Dame, gets her inspiration from biological systems. The collective behaviors of ants, honeybees and birds to solve problems and overcome obstacles is something researchers have developed in aerial and underwater robotics. Developing small-scale swarm robots with the capability to traverse complex terrain, however, comes with a unique set of challenges. In research published in Science Robotics, Ozkan-Aydin presents how she was able to build multi-legged robots capable of maneuvering in challenging environments and accomplishing difficult tasks collectively, mimicking their natural-world counterparts. “Legged robots can navigate challenging environments such as rough terrain and tight spaces, and the use of limbs offers effective body support, enables rapid maneuverability and facilitates obstacle crossing,” Ozkan-Aydin said. “However, legged robots face unique mobility challenges in terrestrial environments, which results in reduced locomotor performance.” For the study, Ozkan-Aydin said, she hypothesized that a physical connection between individual robots could enhance the mobility of a terrestrial legged collective system. Individual robots performed simple or small tasks such as moving over a smooth surface or carrying a light object, but if the task was beyond the capability of the single unit, the robots physically connected to each other to form a larger multi-legged system and collectively overcome issues. “When ants collect or transport objects, if one comes upon an obstacle, the group works collectively to overcome that obstacle. If there’s a gap in the path, for example, they will form a bridge so the other ants can travel across — and that is the inspiration for this study,” she said. “Through robotics we’re able to gain a better understanding of the dynamics and collective behaviors of these biological systems and explore how we might be able to use this kind of technology in the future.” Using a 3D printer, Ozkan-Aydin built four-legged robots measuring 15 to 20 centimeters, or roughly 6 to 8 inches, in length. Each was equipped with a lithium polymer battery, microcontroller and three sensors — a light sensor at the front and two magnetic touch sensors at the front and back, allowing the robots to connect to one another. Four flexible legs reduced the need for additional sensors and parts and gave the robots a level of mechanical intelligence, which helped when interacting with rough or uneven terrain. “You don’t need additional sensors to detect obstacles because the flexibility in the legs helps the robot to move right past them,” said Ozkan-Aydin. “They can test for gaps in a path, building a bridge with their bodies; move objects individually; or connect to move objects collectively in different types of environments, not dissimilar to ants.”

Ever since humanity has grasped the idea of a robot, we’ve wanted to imagine them into walking humanoid form. But making a robot walk like a human is not an easy task, and even the best of them end up with the somewhat shuffling gait of a Honda Asimo rather than the graceful poise of a balerina. Only in recent years have walking robots appeared to come of age, and then not by mimicking the human gait but something more akin to a bird. We’ve seen it in the Boston Dynamics models, and also now in a self-balancing two-legged robot developed at Oregon State University that has demonstrated its abilities by completing an unaided 5 km run having used its machine learning skills to teach itself to run from scratch. It’s believed to be the first time a robot has achieved such a feat without first being programmed for the specific task. The university’s PR piece envisages a time in which walking robots of this type have become commonplace, and when humans interact with them on a daily basis. We can certainly see that they could perform a huge number of autonomous outdoor tasks that perhaps a wheeled robot might find to be difficult, so maybe they have a bright future. Decide for yourself, after watching the video.

|

Your new post is loading...

Your new post is loading...

Oxycodone without a prescription

Phentermine 37.5 mg for sale

Phentremin weight loss

purchase Adderall online

Where Fentanyl Patches online

Where to buy Acxion Fentermina