Your new post is loading...

Your new post is loading...

Educational Policies and Youth in the 21st Century // Edited by Sharon L. Nichols, University of Texas at San Antonio] Published 2016 "As our student population diversifies rapidly, there is a critical need to better understand how national, regional, and/or local policies impact youth in school settings. In many cases, educational policies constructed with the goal of helping youth often have the unintended consequence of inhibiting youth’s potential. This is especially the case when it comes to youth from historically underrepresented groups. Over and over, educational legislation aimed at improving life for youth has had the negative effect of eroding opportunities for our most vulnerable and often times less visible youth.

The authors of this book examine the schooling experiences of Hispanic, African American, Indigenous, poor, and LGBT youth groups as a way to spotlight the marginalizing and shortsighted effects of national education language, immigration, and school reform policies. Leading authors from across the country highlight how educational policies impact youth’s development and socialization in school contexts. In most cases, policies are constructed by adults, implemented by adults, but are rarely informed by the needs and opinions of youth. Not only are youth not consulted but also policymakers often neglect what we know about the psychological, emotional, and educational health of youth. Therefore, both the short and long term impact of these policies have but limited effects on improving students’ school performance or personal health issues such as depression or suicide.

In highlighting the demographic and cultural shifts of the 21st century, this book provides a compelling case for policymakers and their constituents to become more sensitive to the diverse needs of our changing student population and to advocate for policies that better serve them. CONTENTS

Preface. Acknowledgments.

PART I: CHARACTERISTICS AND EXPERIENCES OF 21ST CENTURY YOUTH.

Educational Policy and Latin@ Youth in the 21st Century, P. Zitlali Morales, Tina M. Trujillo, and René Espinoza Kissell.

The Languaging Practices and Counternarrative Production of Black Youth, Carlotta Penn, Valerie Kinloch, and Tanja Burkhard.

Undocumented Youth, Agency, and Power: The Tension Between Policy and Praxis, Leticia Alvarez Gutiérrez and Patricia D. Quijada.

Sexual Orientation and Gender Identity in Education: Making Schools Safe for All Students, Charlotte J. Patterson, Bernadette V. Blanchfield, and Rachel G. Riskind.

Youths of Poverty, Bruce J. Biddle.

PART II: PROMINENT EDUCATIONAL POLICIES AFFECTING YOUTH. Language Education Policies and Latino Youth, Francesca López. The Impact of Immigration Policy on Education, Sandra A. Alvear and Ruth N. López Turley.

Mismatched Assumptions: Motivation, Grit, and High‐Stakes Testing, Julian Vasquez Heilig, Roxana Marachi, and Diana E. Cruz.

PART III: IMPLICATIONS FOR BETTER POLICY DEVELOPMENT FOR 21ST CENTURY YOUTH.

Searching Beyond Success and Standards for What Will Matter in the 21st Century, Luke Reynolds.

New Policies for the 21st Century, Sharon L. Nichols and Nicole Svenkerud‐Hale.

Social Policies and the Motivational Struggles of Youth: Some Closing Comments, Mary McCaslin."

http://www.infoagepub.com/products/Educational-Policies-and-Youth-in-the-21st-Century

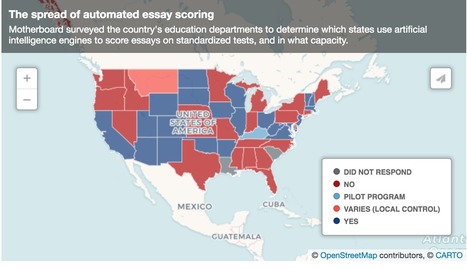

"Every year, millions of students sit down for standardized tests that carry weighty consequences. National tests like the Graduate Record Examinations (GRE) serve as gatekeepers to higher education, while state assessments can determine everything from whether a student will graduate to federal funding for schools and teacher pay. The education industry has long grappled with conscious and subconscious bias against students from certain language backgrounds, as demonstrated by efforts to ban the teaching of black English vernacular in several states. AI has the potential to exacerbate discrimination, experts say. Training essay-scoring engines on datasets of human-scored answers can ingrain existing bias in the algorithms. But the engines also focus heavily on metrics like sentence length, vocabulary, spelling, and subject-verb agreement—the parts of writing that English language learners and other groups are more likely to do differently. The systems are also unable to judge more nuanced aspects of writing, like creativity."... For full post, please visit: https://www.vice.com/amp/en_us/article/pa7dj9/flawed-algorithms-are-grading-millions-of-students-essays

[Published July 6th, 2015] ..."A recent description of results from a Public Policy Institute report reveals that the majority of California’s Public School parents are uninformed about the new tests their children took this year. And despite numerous concerns regarding technological barriers, biases, and testing problems, it appears that in a matter of weeks, “test scores” will be released to the public.

It is important to consider that unless assessments are independently verified to adhere to basic standards of test development regarding validity, reliability, security, accessibility, and fairness in administration, the resulting scores will be meaningless and should not be used to make claims nor conclusions of student learning, progress, aptitude, nor readiness for college or career."...

For full post, click on image or title above: https://eduresearcher.com/2015/07/06/critical-questions-computerized-testing-sbac/

By Nancy Flanagan "My friend Nick Krieger, whose blog, Fix the Mitten, is one of my must-reads, raised this issue earlier. I was intrigued--was this a loophole for the increasing number of parents who want to opt their children out of excessive and harmful testing? Nick offered this clarification:

THE TYPOGRAPHICAL ERROR: President Barack Obama signed the Every Student Succeeds Act (“ESSA”) into law on December 10, 2015, replacing the No Child Left Behind Act of 2001 (“NCLBA”) Under the ESSA, states must test public school students in mathematics and reading every year in grades 3 through 8, and at least once during high school. It has been widely reported that the ESSA mandates 95% participation by pupils on these required state tests. But does it really?

The section that purports to establish the 95% participation requirement, § 1111(c)(4)(E)(i), contains a typographical error. It provides that each state must "[a]nnually measure the achievement of not less than 95 percent of all students, and 95 percent of all students in each subgroup of students . . . on the assessments described under subsection (b)(2)(v)(I).” So what’s the problem? Well, there are no “assessments described under subsection (b)(2)(v)(I).” That’s because “subsection (b)(2)(v)(I)” doesn’t exist.

Congress almost certainly meant to say “subsection (b)(2)(B)(v)(I).” After all, this is the subsection that mandates the administration of mathematics and reading assessments each year in grades 3 through 8, and once in grades 9 through 12. But that’s not what the plain language of the statute actually states. And as Chief Justice John Roberts has reminded us, “the best evidence of Congress’s intent is the statutory text."*

So, while the ESSA unquestionably requires the states to administer yearly assessments in mathematics and reading, it does not actually require 95% student participation on those tests. Instead, as currently written, it requires 95% student participation on some other, completely different set of nonexistent assessments. Congress might want to consider hiring a few more proofreaders. EXCEEDING STATUTORY AUTHORITY: Despite this typographical error, let’s assume that the 95% participation mandate is valid.

One of the major differences between the ESSA and the NCLBA is that the ESSA eliminates several federally mandated corrective measures and school intervention strategies, and returns significant control over the improvement of low-achieving schools to state and local education agencies. To this end, § 1111(e)(1)(B)(iii)(XI) of the ESSA expressly prohibits the Secretary of Education from promulgating any rules that prescribe the manner in which the states take into account and enforce the 95% participation mandate.

In spite of this statutory prohibition, Secretary of Education John B. King, Jr. has announced several proposed amendments to 34 C.F.R. § 200.15 that would (1) order the states to comply with the 95% participation mandate in a uniform manner, (2) require each state to implement one of several punitive reform measures for any school that fails to comply with the 95% participation mandate, and (3) direct any school that does not meet the 95% mandate to adopt an “improvement plan."** Secretary King’s proposed amendments to Regulation § 200.15 plainly violate the intent of Congress as unambiguously expressed in the statute. Will anyone step forward to stop the Department of Education from promulgating these illegal rules? Only time will tell.

WHY IT MATTERS: The ESSA explicitly recognizes the right of parents to opt their children out of state standardized assessments if authorized by state or local law. In particular, § 1111(b)(2)(K) provides that "[n]othing in this paragraph shall be construed as preempting a State or local law regarding the decision of a parent to not have the parent’s child participate in the academic assessments under this paragraph.” Secretary King is a known proponent of high-stakes standardized testing. Indeed, during his tenure as New York State Education Commissioner, King’s onerous testing policies led to one of the largest parental opt-out movements in the country.

King has already tipped his hand by proposing new regulations that would punish public schools for failing to test enough of their students. Looking forward, it seems likely that the Department of Education will argue that parental opt-outs violate the 95% participation mandate in § 1111(c)(4)(E)(i) and King’s proposed amendments to Regulation § 200.15.

If and when the federal government begins making such arguments, it will be important for parents and educators to remember that Congress expressly left choices concerning parental opt-outs and enforcement of the 95% participation mandate to the individual states.

* NFIB v. Sibelius, 567 U.S. ___; 132 S.Ct. 2566, 2583; 183 L.Ed.2d 450 (2012).

** 81 Fed. Reg. 34540." https://www.edweek.org/teaching-learning/opinion-does-essa-actually-require-95-participation-on-state-assessments/2016/09

"Every year, millions of students sit down for standardized tests that carry weighty consequences. National tests like the Graduate Record Examinations (GRE) serve as gatekeepers to higher education, while state assessments can determine everything from whether a student will graduate to federal funding for schools and teacher pay. The education industry has long grappled with conscious and subconscious bias against students from certain language backgrounds, as demonstrated by efforts to ban the teaching of black English vernacular in several states. AI has the potential to exacerbate discrimination, experts say. Training essay-scoring engines on datasets of human-scored answers can ingrain existing bias in the algorithms. But the engines also focus heavily on metrics like sentence length, vocabulary, spelling, and subject-verb agreement—the parts of writing that English language learners and other groups are more likely to do differently. The systems are also unable to judge more nuanced aspects of writing, like creativity."... For full post, please visit: https://www.vice.com/amp/en_us/article/pa7dj9/flawed-algorithms-are-grading-millions-of-students-essays

By Renee Dudley

"Part Six: Internal documents show the makers of the new SAT knew the test was overloaded with wordy math problems – a hurdle that could reinforce race and income disparities. The College Board went ahead with the exam anyway." [Picture caption] SOUNDED ALARM: Dan Lotesto, who teaches at the University of Wisconsin-Milwaukee, helped the College Board review potential questions for the redesigned SAT and warned that many questions were sloppy or too long – and that test-takers would suffer. REUTERS/Darren Hauck "NEW YORK – In the days after the redesigned SAT college entrance exam was given for the first time in March, some test-takers headed to the popular website reddit to share a frustration. They had trouble getting through the exam’s new mathematics sections. “I didn’t have nearly enough time to finish,” wrote a commenter who goes by MathM. “Other people I asked had similar impressions.” The math itself wasn’t the problem, said Vicki Wood, who develops courses for PowerScore, a South Carolina-based test preparation company. The issue was the wordy setups that precede many of the questions. “The math section is text heavy,” said Wood, a tutor who took the SAT in May. “And I ran out of time.” The College Board, the maker of the exam, had reason to expect just such an outcome for many test-takers. When it decided to redesign the SAT, the New York-based not-for-profit sought to build an exam with what it describes as more “real world” applications than past incarnations of the test. Students wouldn’t simply need to be good at algebra, for instance. The new SAT would require them to “solve problems in rich and varied contexts. But in evaluating that approach, the College Board’s own research turned up problems that troubled even the exam makers."... ---------------- Part One: College Board gave SATs it knew were “compromised” Part Two: Despite tighter security, new SAT gets hacked Part Three: Chinese cheating rings penetrate U.S. colleges Part Four: Widespread cheating alleged in program owned by ACT Part Five: Breach exposes questions for upcoming SAT exams

For full post and links to Parts 1-5 of the Investigative series, click on title above or here: http://www.reuters.com/investigates/special-report/college-sat-redesign/

By Niki Kelly // The Journal Gazette

"INDIANAPOLIS - A testing error has invalidated hundreds of student ISTEP+ math scores around the state, including at three area schools. The students were mistakenly given access to calculators on a section of the test where calculators were not allowed. The Indiana Department of Education and school officials say testing vendor Pearson is to blame for the error. "It's so discouraging for the children. It's discouraging for everyone," said Lori Vaughn, assistant superintendent at DeKalb Central United schools. "It is what it is. I hate that expression but we're going to move on. It's a black eye when DOE puts (scores) out." She said 34 students in third grade at Waterloo Elementary and 19 students in fourth grade at the school will receive "undetermined" scores. This results in passing rates of less than a percent for third grade and 17 percent for fourth. "It's horrific," Vaughn said. "And that's what's going to be put out with no explanation. It will impact our participation rate and our accountability grade." Test scores are a large factor in the A to F accountability grades that schools will receive later this year. Department of Education officials told Vaughn there is nothing that can be done now but schools can appeal those A to F grades when they are issued. She explained that schools received guidance on calculators that seemed different than previous years. Two people in the district called the company separately to verify the information and were told by Pearson to proceed as directed. So when the test began the calculator icon came up on the screen for students who shouldn't have been allowed to use a calculator. Some special education students are provided calculators as an accommodation. Vaughn said two other schools in the district luckily hadn't started testing before the error was realized. Pearson said it is aware of the "isolated issues" having to do with calculator accommodations. "In some cases, Pearson inadvertently provided inaccurate or unclear guidance on the use of calculators during testing. In these instances, we followed up quickly to help local school officials take corrective action," a statement from the company said. "Pearson regrets that any Indiana students, teachers, and schools were impacted by this issue." It affected only 20 schools out of hundreds, including New Haven Middle School and Emmanuel-St. Michael Lutheran School in Fort Wayne Molly Deuberry, communications director for the Department of Education, didn't have an overall number of students affected. The biggest problem came at Rochester schools in Fulton County, where 700 elementary, middle and high school kids mistakenly had access to the calculator. Some used it and others were stopped by individual teachers. Their results have been invalidated. Some sophomores who were specifically affected will need to retake the math portion of the assessment. A Department of Education press release said it is working with school corporation's to evaluate options for limiting the accountability impact. Rochester and other schools may have a high volume of undetermined math results due to the invalidation, which in turn leave proficiency rates and growth scores to be based on a small subset of the overall school population in 2016-17, and student test results from the 2015-16 school year. The department does not have any authority under current statutes to address or rectify this concern. However, the State Board of Education conducts an appeals process for schools that believe the final A-F letter grade does not accurately reflect the school's performance, growth, or multiple measures. Parents received access to student scores starting this week. Individual appeals can be brought."...

For original post, please see:

http://www.journalgazette.net/news/local/indiana/20170620/error-invalidates-hundreds-of-istep-math-scores

By Elissa Nadworny

"Fatima Martinez knows there's a lot riding on her SAT score. "My future is at stake," says the Los Angeles high school senior. "The score I will receive will determine which UC schools I get into." But that may not always be the case.

A lawsuit expected to be filed Tuesday is challenging the University of California system's use of the SAT or ACT as a requirement for admission. A draft of the document obtained by NPR argues that the tests — long used to measure aptitude for college — are deeply biased and provide no meaningful information about a student's ability to succeed, and therefore their requirement is unconstitutional.

"The evidence that we're basing the lawsuit on is not in dispute," says attorney Mark Rosenbaum of the pro bono firm Public Counsel. "What the SAT and ACT are doing are exacerbating inequities in the public school system and keeping out deserving students every admissions cycle."

Public Counsel is filing the suit in California Superior Court on behalf of students and a collection of advocacy groups."... For full story, please visit: https://www.npr.org/2019/12/10/786257347/lawsuit-claims-sat-and-act-are-illegal-in-california-admissions

By Morgan Boydston, KTVB

BOISE - How would you feel if you found your child was being tracked from the minute you registered them for kindergarten, until they enter the work force? Idaho has several agreements that allow and require them to do just that. Many parents don't realize that their student's personal information is being collected and shared at the state and federal level, among many different agencies.

KTVB talked with concerned parents, as well as the State Department of Education to get to the bottom of why students' data is being stored and shared.

"I believe our youngest, most vulnerable citizens probably should have the most protection of privacy," said Mila Wood, a concerned mother and spokesperson for Idahoans for Local Education.

From the very beginning of the school day, children are shedding data. From the bus stop to the classroom laptop, hundreds of data points are being collected by state, corporate and federal agencies.

"They collect everything, they absolutely collect everything," Wood added. "Actually one of the very first items that kind of brought my attention was this little card in my son's wallet when he was in eighth grade and it's an Idaho Department of Labor card."

Stacey Knudsen is another parent active in finding out how, where and why her children's personal information is being stored. Sensitive information attached to their individual student ID numbers such as disciplinary actions, meal choices, socioeconomic status and much, much more.

"This information is really sensitive," Wood added. "When we talk about keeping kids safe, and their data, that's extremely important," said Jeff Church, spokesperson for the Idaho Department of Education.

The Idaho State Board of Education is constitutionally responsible for supervising public education from kindergarten through college. For that reason, a state-wide data system was created to evaluate and improve the process by which a student moves through the education system in Idaho. The Board works in conjunction with the State Department of Education, which tracks K-12 data.

"We only collect the data that we truly need, whether it be for federal reporting, state reporting or financial calculations and payments out to school districts," Church said. "Over the last year we have gone through a process of removing upwards of 200 data elements within that system."

Department officials say they have been working to collect a lot less data than they used to by asking the question: Do we need the data? "If we don't for federal or state or financial calculations, we don't need it and we don't want it," Church added.

But parents say they are still concerned because the Department of Education still collects 390 elements and many of those elements are alarming.

"There's certainly not a need for us to be storing the amount of data that we're storing," said Knudsen.

Church argues that the aggregate academic information, like test scores, is crucial for policy-making decisions and measuring Idaho's success compared to other states.

"Seeing the data and how students across the state are doing on math informs the superintendent on policy decisions to say we need to make a change and move toward what works," he said. Concerned parents believe the problems stem from personally identifiable information that other state, federal and private agencies have access to.

"Where is this information going? Who is utilizing my child's psychometric data?" Wood asked.

Parents also wonder why they are not given the option to give, or deny, consent for the data.

"Nobody can really give us a clear picture of who is accessing and how they're keeping that data safe," Knudsen said.

To protect that data, there is a federal law in place called the Family Educational Rights and Privacy Act, or FERPA. But activists say it's become too relaxed over the last several years. In 2014, Idaho enacted its own student privacy law. The Board of Ed says the state-appointed Data Management Council does not allow a free-flow of information because the council oversees any requests to get ahold of any data.

"At no time is an individual student's data utilized for decision-making purposes or for individual purpose of any kind," Church added.

The department shares group and personal data with many state and federal departments, as well as private companies, including, but not limited to: - The Department of Health and Welfare

- Federal Education Facts

- Smarter Balanced Consortium

- Title I Student Counts

- Migrant Student Information Exchange

- ISAT, College Board

- Data Recognition Corp

- Individual Student Identifier for K-12 Longitudinal Data System

"So they are all sharing the data together within our state longitudinal data system," Wood said.

They also share with the State Board of Education, which has agreements with other state and federal agencies such as the Department of Juvenile Corrections, Department of Labor, Department of Transportation, and National Student Clearinghouse. Knudsen and Wood have plenty of advice for parents that are just finding out about this phenomenon.

"What you think is just between you and the teacher and the school, that's no longer the case," Knudsen said. "Be a little more wary of what you fill out, and really read through the documents that you're signing at school."

Church says parents can contact the the Department of Education and ask to see their child's personal data. Parents must file a public records request, and then meet with a representative in person.

The Board of Education says parents also have the option to go directly to their child's school and request to see the data there, at the source."

For full post including news video coverage, click on title above or here:

http://www.ktvb.com/news/investigations/7-investigations/student-data-being-stored-and-swapped-among-many-agencies/50092079

"Education and testing mammoth Pearson has an established history in botching high-stakes testing. Pearson did it again, in Mississippi. According to the Associated Press (AP), Mississippi canceled its contract with the testing giant after Pearson fessed up to mixing up scoring tables for an exam that now has approximately 1,000 Mississippi students either graduating when exit scores were not actually high enough or not graduating because of test scores that were not too low after all.

From AP on Friday, June 16, 2017:

"The Mississippi Department of Education is firing a testing company, saying scoring errors raise questions about the graduation status of nearly 1,000 students statewide. The state Board of Education revoked a contract with NCS Pearson in closed session Friday, after the Pearson PLC unit told officials it used the wrong table to score U.S. history exams for students on track to graduate this spring. Students who did poorly got overly high scores, while those who did better didn’t get enough credit. Associate Superintendent Paula Vanderford says it’s too soon to know how many students may have graduated or been denied diplomas in error, or what the state will do about either circumstance." The AP release continues with an inept-yet-contrite Pearson will “assist the state in any way possible.” Of course, the way to assist the state is to not put the state in this awful position to begin with. And it’s not the first time Pearson incompetence has caused Mississippi problems. As the AP continues:

"In 2012, a scoring error on the high school biology exam wrongly denied diplomas to five students. Pearson compensated them with $50,000 scholarships to any Mississippi university. Another 116 student who were affected less severely got $10,000 or $1,000 scholarships. In 2015, Pearson paid the state $250,000 after its online testing platform crashed for a day. What is astounding is that even as Pearson profits are suffering to a record extent, its CEO, John Fallon, received a 20-percent pay raise in May 2017. From the May 05, 2017, Telegraph: "Two thirds of shareholders rejected the company’s remuneration report at its AGM after Mr Fallon received a £343,000 [$439,383] bonus, equivalent to a 20pc [percent] pay rise, despite having presided over its worst 12 months in nearly half a century on the stock exchange.

Mr Fallon’s position was undermined as 66pc of shareholders voted against his pay in a meeting marked by protests from teaching unions over Pearson’s activities in the developing world. …

Earlier in the day, Mr Fallon had sought to calm criticism of his bonus by spending all of it, net of tax, on Pearson shares to align his own interests with those of shareholders.

He declined to comment on whether he considered rejecting the bonus, which came after a £2.6bn [$3.34 billion] annual loss and the biggest ever one-day fall in Pearson’s shares following a massive profit warning. …

Despite the controversy, the shares were up nearly 12pc in the afternoon after Pearson unveiled a new £300m [$384 million] tranche of job cuts and office closures, in the latest phase of Mr Fallon’s battle to reverse its fortunes. His third round of restructuring comes after 4,000 staff were cut last year, when it sought similar savings."

Indeed, Fallon is being rewarded for throwing the crew overboard on a poison ship that is taking more water than ever. It seems, however, that the Mississippi Board of Education has finally had enough of Pearson." For original post, see:

https://deutsch29.wordpress.com/2017/06/17/pearson-botches-mississippi-testing-again-mississippi-immediately-severs-contract/

|

By Jeff Amy JACKSON, Miss. (AP) "The Mississippi Department of Education is firing a testing company, saying scoring errors raise questions about the graduation status of nearly 1,000 students statewide.

The state Board of Education revoked a contract with NCS Pearson in closed session Friday, after the Pearson PLC unit told officials it used the wrong table to score U.S. history exams for students on track to graduate this spring. Students who did poorly got overly high scores, while those who did better didn’t get enough credit. Associate Superintendent Paula Vanderford says it’s too soon to know how many students may have graduated or been denied diplomas in error, or what the state will do about either circumstance. Pearson spokeswoman Laura Howe apologized on behalf of the company and said Pearson is working to correct the scores. “We are disappointed by today’s board decision but stand ready to assist the state in any way possible,” she wrote in an email. Students typically study U.S. history in their third year in high school, and take the subject test that spring. Students who score poorly, though, can take the test up to three more times as a senior. The 951 students in questions were either seniors, or juniors scheduled to graduate early, and needed their scores to earn diplomas. The answers about graduating students will be tricky because students have different options to graduate. Formerly, every student had to pass each of Mississippi’s four subject tests in biology, history, algebra and English to earn a high school diploma. Now, students can fail a test and still graduate if class grades are high enough, they score well enough on other subject tests, they score above 17 on part of the ACT college test, or earn a C or better in a college class. Eventually, the tests will count for 25 percent of the grades in each subject. About 27,000 students took the test overall. Vanderford said scores for each one will have to be verified. The exam scores also affect the grades that Mississippi gives to public schools and districts. “The agency is committed to ensuring that the data is correct,” she said. Vanderford said Pearson has had other problems with its Mississippi tests. In 2012, a scoring error on the high school biology exam wrongly denied diplomas to five students. Pearson compensated them with $50,000 scholarships to any Mississippi university. Another 116 student who were affected less severely got $10,000 or $1,000 scholarships. In 2015, Pearson paid the state $250,000 after its online testing platform crashed for a day. Pearson had a contract worth a projected $24 million over the next six years to provide tests for history, high school biology, 5th grade science and 8th grade science. The board hired Minnesota-based Questar Assessment to administer all those tests for one year for $2.2 million. Questar, which is being bought by nonprofit testing giant ETS, already runs all of Mississippi’s language arts and math tests. Because Mississippi owns the questions to the history and science tests, Vanderford said it will be possible for Questar to administer those exams on short notice. The state will seek a contractor to give those tests on a long-term basis in coming months." For main story, please see: https://apnews.com/115d48fe350843d6baa60bc277fd1bc8

The following resolution was proposed and passed in April 2020 at the CA-HI State NAACP Resolutions Conference, and passed by the national delegates at the NAACP National Conference on September 26th, 2020. https://www.naacp.org/wp-content/uploads/2020/09/2020-Resolutions-version-to-be-reviewed-by-the-Convention-Delegates.pdf "WHEREAS the COVID19 pandemic has resulted in widespread school closures that are disproportionately disadvantaging families in under-resourced communities; and WHEREAS resulting emergency learning tools have primarily been comprised of untested, online technology apps and software programs; and WHEREAS, the National Education Policy Center has documented evidence of widespread adoptions of apps and online programs that fail to meet basic safety and privacy protections; and WHEREAS privately managed cyber/online schools, many of which have been involved in wide-reaching scandals involving fraud, false marketing, and unethical practices, have seized the COVID crisis to increase marketing of their programs that have resulted in negative outcomes for students most in need of resources and supports; and WHEREAS, parents and students have a right to be free from intrusive monitoring of their children’s online behaviors, have a right to know what data are being collected, what entities have access, how long data will be held, in what ways data would combined, and how data could be protected against exploitation; WHEREAS increased monitoring and use of algorithmic risk assessments on students’ behavioral data are likely to disproportionately affect students of color and other underrepresented or underserved groups, such as immigrant families, students with previous disciplinary issues or interactions with the criminal justice system, and students with disabilities; and WHEREAS serious harms resulting from the use of big data and predictive analytics have been documented to include targeting based on vulnerability, misuse of personal information, discrimination, data breaches, political manipulation and social harm, data and system errors, and limiting or creating barriers to access for services, insurance, employment, and other basic life necessities; BE IT THEREFORE RESOLVED that the NAACP will advocate for strict enforcement of the Family Education Rights and Privacy Act to protect youth from exploitative data practices that violate their privacy rights or lead to predictive harms; and BE IT FURTHER RESOLVED that the NAACP will advocate for federal, state, and local policy to ensure that schools, districts, and technology companies contracting with schools will neither collect, use, share, nor sell student information unless given explicit permission by parents in plain language and only after being given full informed consent from parents about what kinds of data would be collected and how it would be utilized; and BE IT FURTHER RESOLVED that the NAACP will work independently and in coalition with like-minded civil rights, civili liberties, social justice, education and privacy groups to collectively advocate for stronger protection of data and privacy rights; and BE IT FURTHER RESOLVED that the NAACP will oppose state and federal policies that would promote longitudinal data systems that track and/or share data from infancy/early childhood in exploitative, negatively impactful, discriminatory, or racially profiling ways through their education path and into adulthood; and BE IT FINALLY RESOLVED that the NAACP will urge Congress and state legislatures to enact legislation that would prevent technology companies engaged in big data and predictive analytics from collecting, sharing, using, and/or selling children’s educational or behavioral data."

"Dear Members of the California State Board of Education,

Last Spring, 3.2 million students in California (grades 3-8 and 11) took the new, computerized Math and English Language Arts/Literacy CAASPP tests (California Assessment of Student Performance and Progress). The tests were developed by the SmarterBalanced Assessment Consortium, and administered and scored by ETS (Educational Testing Service). Costs are estimated at $360 million dollars in federal tax dollars and $240 million dollars in state funds for 3 years of administration and scoring.

Despite the documented failure of the assessments to meet basic standards of testing and accountability, [invalid] scores are scheduled to be released to the public on September 9th. According to media reports, the 11th grade scores will be used for educational decision-making by nearly 200 colleges and universities in six states. For detailed documents, see Critical Questions about Computerized Assessments and SmarterBalanced Test Scores, the SR Education SBAC invalidation report, the following video, and transcript provided here.

At the September 2nd, 2015 State Board of Education meeting, you heard public comment from Dr. Doug McRae, a retired test and measurement expert who has for the past five years communicated directly and specifically to the Board about validity problems with the new assessments. He has submitted the following written comments for Item #1 [CAASPP Update] at the latest meeting and spoke again about the lack of evidence for validity, reliability, and fairness of the new assessments."... For full post, click on title above or here: http://eduresearcher.com/2015/09/08/openletter/

(adding College Board comment) A class-action lawsuit has been filed in federal court on behalf of students who took online Advanced Placement tests last week and ran into technical trouble submitting their answers. It demands that the College Board score their answers instead of requiring them to retake the test in June, and provide hundreds of millions of dollars in monetary relief. The lawsuit, dated Tuesday, says that students’ inability to submit answers was the fault of the exam creators, and it charges that the College Board engaged in a number of “illegal activities,” including breach of contract, gross negligence, misrepresentation and violations of the Americans With Disabilities Act. It also seeks more than $500 million in compensatory damages as well as punitive damages. The College Board owns the AP program, although the AP tests are created and administered by the Educational Testing Service. Both of those organizations were named as defendants in the lawsuit, which was filed in a U.S. District Court in California. Peter Schwartz, College Board Chief risk officer and general counsel, said in a statement: “It is wrong factually and baseless legally; the College Board will vigorously and confidently defend against it, and expect to prevail.”

He also said, “When the country shut down due to coronavirus, we surveyed AP students nationwide, and an overwhelming 91 percent reported a desire to take the AP Exam at the end of the course. Within weeks, we redesigned the AP Exams so that they could be taken at home. Nearly 3 million AP Exams have been taken over the first seven days. Those students who were unable to successfully submit their exam can still take a makeup and have the opportunity to earn college credit.” The Educational Testing Service did not respond to a query about the lawsuit. The College Board said last week that it had found the problems students faced submitting answers were largely caused by outdated browsers and students’ failure to see messages announcing the end of an exam. This is the first time that AP tests have been given online at home, a result of the shutdown of schools because of the coronavirus pandemic. The tests were previously given at school. But the College Board said it had surveyed students and that most wanted to take the tests online, noting that the scores can factor into college admissions decisions and that students can receive college credit for high scores. The online tests, in numerous subjects, were shortened from several hours to 45 minutes. Critics had warned that online testing is not fair to students who have no computer, access to Internet or quiet work spaces from which to study and work, or to students with disabilities who do not have appropriate accommodations — challenges the College Board acknowledged and said it tried to ameliorate. Critics also questioned the validity of the shortened exams.

The lawsuit was filed by parents on behalf of students who could not submit answers, as well as by the National Center of Fair and Open Testing, a nonprofit organization known as FairTest that works to end the misuse of standardized tests. (The lawsuit cites a post on The Answer Sheet blog with news about the problems students were facing.) Schwartz, in his statement, called the lawsuit “a PR stunt masquerading as a legal complaint” that was “manufactured” FairTest. FairTest interim director Bob Schaeffer said his organization did not start the lawsuit but was asked to join as a plaintiff by the lead counsel, and that his organization has been collecting complaints about the online tests. “The College Board was warned about many potential access, technology and security problems by FairTest and other groups that had documented crashes when other computerized tests were introduced,” said Schaeffer. “Nevertheless, the board rushed ‘untested’ AP computerized exams into the marketplace in order to preserve its largest revenue-generating program when they could no longer administer in-school tests.” The College Board, a nonprofit organization that operates substantially like a business, said that students last week took 2.186 million AP exams in various subjects during the first week of the two-week May testing window, and that “less than 1 percent of students were unable to submit their responses.” The College Board did not provide the exact number of students who had problems but did note in an email that some students took more than one test. That makes it impossible for the public to know exactly how many students were affected. Most of the students who had problems found that they could not submit all or some of their answers. Many took photos or videos of their responses, but the College Board told them their responses could not be scored and that they would have to retake their exams in June. Then, on Sunday, the College Board announced that students taking exams during this week of testing could email responses if they found they had trouble submitting. Students who took the tests last week, however, could not submit their answers for scoring and still had to retake them in June.

The lawsuit asks that the College Board accept any test answers from last week’s AP tests that can be shown to have been completed in time by time stamp, photo and email. It charges that the College Board ignored warnings that giving AP tests online would discriminate against students with disabilities and those who did not have access to technology or the Internet at home to take the exams. The plaintiffs are seeking compensatory damages of more than $500 million and “punitive damages in an amount sufficient to punish defendants” and “to deter them from engaging in wrongful conduct in the future.” The suit was filed by Phillip A. Baker from Baker, Keener & Nahra LLP in Los Angeles and Marci Lerner Miller from Miller Advocacy Group in Newport Beach. https://www.washingtonpost.com/education/2020/05/20/class-action-lawsuit-filed-against-college-board-about-botched-ap-tests/ Here is the lawsuit: https://www.scribd.com/document/462280771/AP-Lawsuit#download&from_embed

"The California Alliance of Researchers for Equity in Education recently released a research brief documenting concerns and recommendations related to the Common Core State Standards Assessments in California (also referred to as the CAASPP, California Assessment of Student Performance and Progress or “SBAC” which refers to the “Smarter Balanced” Assessment Consortium). A two-page synopsis as well as the full CARE-ED research brief may be downloaded from the main http://care-ed.org website. The following is an introduction:

“Here in California, public schools are gearing up for another round of heavy testing this spring, including another round of Common Core State Standards assessments. In this research brief, the California Alliance of Researchers for Equity in Education (CARE-ED), a statewide collaborative of university-based education researchers, analyzes the research basis for the assessments tied to the Common Core State Standards (CCSS) that have come to California. We provide historical background on the CCSS and the assessments that have accompanied them, as well as evidence of the negative impacts of high-stakes testing. We focus on the current implementation of CCSS assessments in California, and present several concerns. Finally, we offer several research-based recommendations for moving towards meaningful assessment in California’s public schools. Highlights of the research brief are available for download here.

The complete research brief on CCSS Assessments is available for download here.”

Background from the 2 page overview includes the following summary of concerns: - “The assessments have been carefully examined by independent examiners of the test content who concluded that they lack validity, reliability, and fairness, and should not be administered, much less be considered a basis for high-stakes decision making.

- Nonetheless, CA has moved forward in full force. In spring 2015, 3.2 million students in California (grades 3-8 and 11) took the new, computerized Math and English Language Arts/Literacy CAASPP tests (California Assessment of Student Performance and Progress). Scores were released to the public in September 2015, and as many predicted, a majority of students failed.

- Although proponents argue that the CCSS promotes critical thinking skills and student-centered learning (instead of rote learning), research demonstrates that imposed standards, when linked with high-stakes testing, not only de-professionalizes teaching and narrows the curriculum, but in so doing, also reduces the quality of education and student learning, engagement, and success.

- The implementation of the CCSS assessments raises at least four additional concerns of equity and access. First, the cost of implementing the CCSS assessments is high and unwarranted, diverting hundreds of millions of dollars from other areas of need. Second, the technology and materials needed for CCSS assessments require high and unwarranted costs, and California is not well-equipped to implement the tests. Third, the technology requirements raise concerns not only about cost, but also about access. Fourth, the CCSS assessments have not provided for adequate accommodations for students with disabilities and English Language learners, or for adequate communication about such accommodations to teachers.”…

And the following quote captures a culminating statement:

“…We support the public call for a moratorium on high-stakes testing broadly, and in particular, on the use of scientifically discredited assessment instruments (like the current SBAC, PARCC, and Pearson instruments) and on faulty methods of analysis (like value-added modeling of test scores for high-stakes decision making).”…

For the full research brief, including guiding questions and recommendations, please see: http://www.care-ed.org

As of February 2, 2016, the following university-based researchers in California have endorsed the statement.

University affiliations are provided for identification purposes only. Al Schademan, Associate Professor, California State University, Chico

Alberto Ochoa, Professor Emeritus, San Diego State University

Allison Mattheis, Assistant Professor, California State University, Los Angeles

Allyson Tintiangco-Cubales, Professor, San Francisco State University

Amy Millikan, Director of Clinical Education, San Francisco Teacher Residency

Anaida Colon-Muniz, Associate Professor, Chapman University

Ann Berlak, Retired lecturer, San Francisco State University

Ann Schulte, Professor, California State University, Chico

Annamarie Francois, Executive Director, University of California, Los Angeles

Annie Adamian, Lecturer, California State University, Chico

Anthony Villa, Researcher, Stanford University

Antonia Darder, Leavey Endowed Chair, Loyola Marymount University

Arnold Danzig, Professor, San José State University

Arturo Cortez, Adjunct Professor, University of San Francisco

Barbara Henderson, Professor, San Francisco State University

Betina Hsieh, Assistant Professor, California State University, Long Beach

Brian Garcia-O’Leary, Teacher, California State University, San Bernardino

Bryan K Hickman, Faculty, Salano Community College

Christine Sleeter, Professor Emerita, California State University, Monterey Bay

Christine Yeh, Professor, University of San Francisco

Christopher Sindt, Dean, Saint Mary’s College of California

Cindy Cruz, Associate Professor, University of California, Santa Cruz

Cinzia Forasiepi, Lecturer, Sonoma State University

Cristian Aquino-Sterling, Assistant Professor, San Diego State University

Danny C. Martinez, Assistant Professor, Universityof California, Davis

Darby Price, Instructor, Peralta Community College District

David Donahue, Professor, University of San Francisco

David Low, Assistant Professor, California State University, Fresno

David Stronck, Professor Emeritus, California State University, East Bay

Elena Flores, Associate Dean and Professor, University of San Francisco

Elisa Salasin, Program Director, University of California, Berkeley

Emma Fuentes, Associate Professor, University of San Francisco

Estela Zarate, Associate Professor, California State University, Fullerton

Genevieve Negrón-Gonzales, Assistant Professor, University of San Francisco

George Lipsitz, Professor University of California, Santa Barbara

Gerri McNenny, Associate Professor, Chapman University

Heidi Stevenson, Associate Professor, University of the Pacific

Helen Maniates, Assistant Professor, University of San Francisco

Cynthia McDermott, Chair, Antioch University

Jacquelyn V Reza, Adjunct Faculty, University of San Francisco

Jason Wozniak, Lecturer, San José State University

Jolynn Asato, Assistant Professor, San José State University

Josephine Arce, Professor and Department Chair, San Francisco State University

Judy Pace, Professor, University of San Francisco

Julie Nicholson, Associate Professor of Practice, Mills College

Karen Cadiero-Kaplan, Professor, San Diego State University

Karen Grady, Professor, Sonoma State University

Kathryn Strom, Assistant Professor, California State University, East Bay

Kathy Howard, Associate Professor, California State University, San Bernardino

Kathy Schultz, Dean and Professor, Mills College

Katya Aguilar, Associate Professor, San José State University

Kevin Kumashiro, Dean and Professor, University of San Francisco

Kevin Oh, Associate Professor, University of San Francisco

Kimberly Mayfield, Chair, Holy Names University

Kitty Kelly Epstein, Doctoral Faculty, Fielding Graduate University

Lance T. McCready, Associate Professor, University of San Francisco

Lettie Ramirez, Professor, California State University, East Bay

Linda Bynoe, Professor Emerita, California State University, Monterey Bay

Maren Aukerman, Assistant Professor, Stanford University

Margaret Grogan, Dean and Professor, Chapman University

Margaret Harris, Lecturer, California State University, East Bay

Margo Okazawa-Rey, Professor Emerita, San Francisco State University

Maria Sudduth, Professor Emerita, California State University, Chico

Marisol Ruiz, Assistant Professor, Humboldt State University

Mark Scanlon-Greene, Mentoring Faculty, Fielding Graduate University

Michael Flores, Professor, Cypress College

Michael J. Dumas, Assistant Professor, University of California, Berkeley

Miguel López, Associate Professor, California State University, Monterey Bay

Miguel Zavala, Associate Professor, Chapman University

Mónica G. García, Assistant Professor, California State University, Northridge

Monisha Bajaj, Associate Professor, University of San Francisco

Nathan Alexander, Assistant Professor, University of San Francisco

Nick Henning, Associate Professor, California State University, Fullerton

Nikola Hobbel, Professor, Humboldt State University

Noah Asher Golden, Assistant Professor, Chapman University

Noah Borrero, Associate Professor, University of San Francisco

Noni M. Reis, Professor, San José State University

Patricia Busk, Professor, University of San Francisco

Patricia D. Quijada, Associate Professor, University of California, Davis

Patty Whang, Professor, California State University, Monterey Bay

Paula Selvester, Professor, California State University, Chico

Pedro Nava, Assistant Professor, Mills College

Pedro Noguera, Professor, University of California, Los Angeles

Penny S. Bryan, Professor, Chapman University

Peter McLaren, Distinguished Professor, Chapman University

Rebeca Burciaga, Assistant Professor, San José State University

Rebecca Justeson, Associate Professor, California State University, Chico

Rick Ayers, Assistant Professor, University of San Francisco

Rita Kohli, Assistant Professor, University of California, Riverside

Roberta Ahlquist, Professor, San José State University

Rosemary Henze, Professor, San José State University

Roxana Marachi, Associate Professor, San José State University

Ruchi Agarwal-Rangnath, Adjunct Professor, San Francisco State University

Scot Danforth, Professor, Chapman University

Sera Hernandez, Assistant Professor, San Diego State University

Shabnam Koirala-Azad, Associate Dean and Associate Professor, University of San Francisco

Sharon Chun Wetterau, Asst Field Director & Lecturer, CSU Dominguez Hills

Sumer Seiki, Assistant Professor, University of San Francisco

Suresh Appavoo, Associate Professor, Dominican University of California

Susan Roberta Katz, Professor, University of San Francisco

Susan Warren, Director and Professor, Azusa Pacific University

Suzanne SooHoo, Professor, Chapman University

Teresa McCarty, GF Kneller Chair, University of California, Los Angeles

Terry Lenihan, Associate Professor and Director, Loyola Marymount University

Theresa Montano, Professor, California State University, Northridge

Thomas Nelson, Doctoral Program Coordinator, University of the Pacific

Tomás Galguera, Professor, Mills College

Tricia Gallagher-Geurtsen, Adjunct Faculty, University of San Diego

Uma Jayakumar, Associate Professor, University of San Francisco

Ursula Aldana, Assistant Professor, University of San Francisco

Valerie Ooka Pang, Professor, San Diego State University

Walter J. Ullrich, Professor Emeritus, California State University, Fresno

Zeus Leonardo, Professor, University of California, Berkeley _______________________ California Alliance of Researchers for Equity in Education. (2016). Common Core State Standards Assessments in California: Concerns and Recommendations. Retrieved from http://www.care-ed.org. ## CARE-ED, the California Alliance of Researchers for Equity in Education, is a statewide collaborative of university-based education researchers that aims to speak as educational researchers, collectively and publicly, and in solidarity with organizations and communities,to reframe the debate on education.

___________________________________ For main post, see: http://eduresearcher.com/2016/03/16/sbac-moratorium/ For Washington Post coverage of the document, see:

https://www.washingtonpost.com/news/answer-sheet/wp/2016/03/16/education-researchers-blast-common-core-standards-urge-ban-on-high-stakes-tests/

For related posts on EduResearcher, see here, here, and here.

For a collection on high-stakes testing with additional research and updates, visit “Testing, Testing, 1,2,3…”

http://bit.ly/testing_testing

By Pippa Allen-Kinross and Kathryn Snowdon

"Questions from an entire Edexcel A-level maths paper were circulated on social media before the exam this year, rather than just a small extract as was originally thought, Pearson has admitted. Edexcel’s parent company also confirmed that 78 students who sat the exam are having their results withheld while it investigates the leak, which has so far led to the arrests of two people. Two questions from the A-level maths 3 paper were posted and circulated on Twitter before the exam was sat on June 14, but Pearson has today said its investigations team later discovered that questions from the entire paper had also been circulated within “closed social media networks”. Although it is not clear how many students had access to these closed social media networks, Pearson’s responsible officer Derek Richardson said in a video statement today that the leak had been traced to one specific centre, which is believed to be in London. Richardson also accepted there was “speculation that exposure was broader” across social media networks, but said Pearson must base judgements on this issue “on hard evidence rather than speculation”. “I’d like to reassure you that we detected the breach quickly, took the appropriate action and by reviewing all the relevant exam papers in detail we have ensured that all students who took the exam according to our rules will be issued a fair grade that reflects their work.” He said Pearson was able to examine the phones of those interviewed about the leak, and police seized equipment from two people who were subsequently arrested. He added that Pearson hopes the police enquiry “will end in a criminal prosecution” for those responsible for the leak. Hayley White, Pearson’s UK assessment director, defended the decision not to discount the two questions that were widely circulated on Twitter. “Through our various levels of analysis we found that student performance on these questions was as expected and it wouldn’t be fair to disadvantage everyone by removing them,” she said. “In the limited instances where we discovered anomalies – for example students scoring particularly well on these questions versus the rest of the paper – we have taken these students out of any further statistical analysis that we used to determine the grade boundaries and we’ve had a closer look at their performance.” This year marked the third year in a row that questions from an Edexcel maths paper have been leaked online before the exam, despite Pearson’s efforts to strengthen its security process including introducing microchips to track when schools receive the papers."... For full post, please visit: https://feweek.co.uk/2019/08/09/edexcel-maths-a-level-leak-bigger-than-first-thought-admits-pearson/

By Michelle Davis "A disruption to Internet access at the site of a Kansas-based assessment provider delayed testing of students across the country and caused Alaska to cancel state assessments altogether this school year.

A backhoe used in construction work at the University of Kansas on the afternoon of March 29 accidentally cut a fiber optic cable providing the campus digital connection. Servers at the university's Center for Educational Testing & Evaluation, which provides state assessments for students in Kansas and Alaska, went down.

The stoppage meant students in those states taking CETE tests could not finish or begin testing. And students in 15 other states, in addition to Kansas and Alaska, which use the CETE's Dynamic Learning Maps to assess students with significant cognitive disabilities, also were also unable to access the tests.

"The testing platform ... went down," said Marianne Perie, the director of CETE, who said the signal was severed at the main trunk line bringing Internet to the campus. "It was about the worst place you could cut a line." Students who were testing at the time in Kansas, where the assessment window had recently opened, received popup messages saying their machines was no longer connected to the Internet.

The system automatically saves all test answers students have provided, except for the question a student is working on when the outage takes place, Perie said.

The university worked quickly to patch the cable and testing resumed, with limited capacity the following day, Perie said. On March 31, CETE told states they could return to normal testing, but the system was overloaded and went down again, staying down while officials worked on it through the weekend. This week testing resumed and was back to normal with 21,000 students testing simultaneously with no difficulties, she said.

However, Alaska's interim state education department commissioner Susan McCauley announced on April 1 that the state would cancel CETE's Alaska Measures of Progress testing for all students this academic year, despite the fact that Alaska's testing window had just opened.

McCauley said the unreliability of the system—being told it was back online only to have it crash again—and considerations unique to Alaska prompted her decision to discontinue testing for the year. "The amount of chaos in Alaska schools last week cannot be overstated," she said in an interview this week, adding that teachers had to scramble to create lessons when they thought testing was to take place instead. "To ask teachers and students to 'try it again' with no guarantee that it was going to work was irresponsible." For full post, click on title above or here: http://blogs.edweek.org/edweek/DigitalEducation/2016/04/technical_disruptions_delay_te.html

"Nearly 10,000 tests were scored incorrectly in Tennessee, marking the second time in the last few years that the state has had major problems with its standardized assessment.

According to the Commercial Appeal, 9,400 of the 600,000 TNReady tests given in 2016-17 were scored incorrectly. About 1,700 of those mistakes affected whether students were deemed proficient on the test, the newspaper reports.

State department of education spokeswoman Sara Gast said the errors don't affect statewide results. They occurred because Questar, the company that administers and scores the test, didn't update its scanning software, the Commercial Appeal reports. Questar has now rescored all the incorrectly scored exams, Gast said.

The mistakes affected 70 schools in 33 districts, Chalkbeat Tennessee reports.

"I don't think they can write it off and say it was just a few students," Shelby County Schools board member Chris Caldwell told Chalkbeat. "They owe it to every student to get to the bottom of it and correct anything that needs to be corrected." The mistake also affects teachers, since student test scores factor into their evaluations, Chalkbeat reports. The Tennessee Education Association issued a statement saying it would be looking into testing mistakes it's heard about from teachers, from mistakes in the instruction booklets to "huge shifts" in the state's projections for teacher evaluations.

"This makes the fourth year in a row where major problems have surfaced in a system where there are a lot of high-stakes consequences for students, teachers, and schools based on test scores," TEA spokesman Jim Wrye said. "How do we know this is the full extent of the problem?" In its own statement, Questar apologized for the error. The company "takes responsibility for and apologizes for this scoring error," Chief Operating Officer Brad Baumgartner said. "We are putting in additional steps in our processes to prevent any future occurrence. We are in the process of producing revised reports and committed to doing so as quickly as possible."

Tennessee had trouble with TNReady with a previous vendor, also. It fired Measurement Inc. after that company botched spring 2016 testing. The state hired Questar on a two-year contract last summer."... For full post, see: http://blogs.edweek.org/edweek/high_school_and_beyond/2017/10/thousands_of_tests_scored_incorrectly_in_tennessee.html

By Joseph Spector, Albany Bureau Chief "Albany -- The company administering state tests Wednesday may be the one getting the bad grades from schools. Questar Assessment Inc., which provides computer-based testing to New York schools, failed in its ability to get the exams to nearly 300 districts on Wednesday — the first day of English exams in grades 3-8. "Questar has been working to resolve this as quickly as possible," the state Education Department said in a statement. "We have been in constant contact with schools and reminded them that there is flexibility built into the test schedule." More than 1 million students are eligible to take the English exams that were initially developed under the Common Core standards. And more districts than ever were hoping to use computer-based testing instead of paper exams. Last year, 184 districts had signed up to offer computer tests. Some of the computer testing started Tuesday in select districts, but when the tests went more widespread Wednesday, the site appeared to crash. The state Education Department stressed that districts have flexibility in giving the exams. "At their discretion, schools are able to postpone this morning’s testing and resume testing later today or on another day," the department said." https://www.democratandchronicle.com/story/news/politics/albany/2018/04/11/computer-problems-delay-english-tests-across-new-york/507479002/

|

Your new post is loading...

Your new post is loading...

![Student Data Being Stored and Swapped Among Many Agencies // By Morgan Boydston, KTVB [Click on title for full news video/report] | "Testing, Testing, 1, 2, 3..." | Scoop.it](https://img.scoop.it/FmVdO1XRr0WfpgBOAQgnGjl72eJkfbmt4t8yenImKBVvK0kTmF0xjctABnaLJIm9)

![Pearson Botches Mississippi Testing [Again]; Mississippi Immediately Severs Contract | "Testing, Testing, 1, 2, 3..." | Scoop.it](https://img.scoop.it/MykrtkP-Jy9eoXPczhHLeTl72eJkfbmt4t8yenImKBVvK0kTmF0xjctABnaLJIm9)

![Mississippi Fires [Pearson] Testing Firm After Exams Wrongly Scored | "Testing, Testing, 1, 2, 3..." | Scoop.it](https://img.scoop.it/5maVBJilTmCwsQZ7xc1QZjl72eJkfbmt4t8yenImKBVvK0kTmF0xjctABnaLJIm9)

![Researchers Protest Use of Smarter Balanced SBAC [CAASPP] For Reclassifying English Learners | "Testing, Testing, 1, 2, 3..." | Scoop.it](https://img.scoop.it/rnzi31lpKXAs8nbt3rRkMDl72eJkfbmt4t8yenImKBVvK0kTmF0xjctABnaLJIm9)

![Open Letter to the CA State Board of Education on Release of [False] "Smarter Balanced" Scores | "Testing, Testing, 1, 2, 3..." | Scoop.it](https://img.scoop.it/KkRd51rj08thq3ZUfNSVaTl72eJkfbmt4t8yenImKBVvK0kTmF0xjctABnaLJIm9)