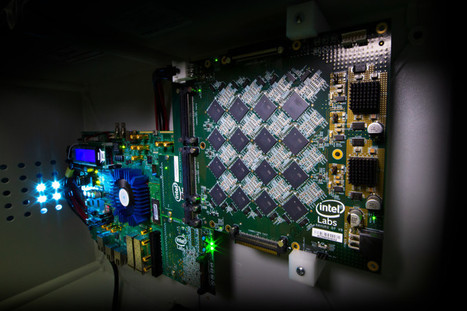

At DARPA's Electronics Resurgence Initiative 2019 in Michigan, Intel introduced a new neuromorphic computer capable of simulating 8 million neurons. Neuromorphic engineering, also known as neuromorphic computing, describes the use of systems containing electronic analog circuits to mimic neuro-biological architectures present in the nervous system. Scientists at MIT, Perdue, Stanford, IBM, HP, and elsewhere have pioneered pieces of full-stack systems, but arguably few have come closer than Intel when it comes to tackling one of the longstanding goals of neuromorphic research — a supercomputer a thousand times more powerful than any today. Case in point? Today during the Defense Advanced Research Projects Agency’s (DARPA) Electronics Resurgence Initiative 2019 summit in Detroit, Michigan, Intel unveiled a system codenamed “Pohoiki Beach,” a 64-chip computer capable of simulating 8 million neurons in total. Intel Labs managing director Rich Uhlig said Pohoiki Beach will be made available to 60 research partners to “advance the field” and scale up AI algorithms like spare coding and path planning. “We are impressed with the early results demonstrated as we scale Loihi to create more powerful neuromorphic systems. Pohoiki Beach will now be available to more than 60 ecosystem partners, who will use this specialized system to solve complex, compute-intensive problems,” said Uhlig. Pohoiki Beach packs 64 128-core, 14-nanometer Loihi neuromorphic chips, which were first detailed in October 2017 at the 2018 Neuro Inspired Computational Elements (NICE) workshop in Oregon. They have a 60-millimeter die size and contain over 2 billion transistors, 130,000 artificial neurons, and 130 million synapses, in addition to three managing Lakemont cores for task orchestration. Uniquely, Loihi features a programmable microcode learning engine for on-chip training of asynchronous spiking neural networks (SNNs) — AI models that incorporate time into their operating model, such that components of the model don’t process input data simultaneously. This will be used for the implementation of adaptive self-modifying, event-driven, and fine-grained parallel computations with high efficiency.

Via Philippe J DEWOST

Via Philippe J DEWOST

Your new post is loading...

Your new post is loading...