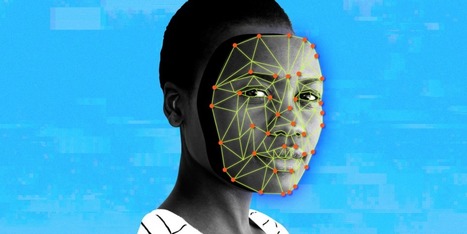

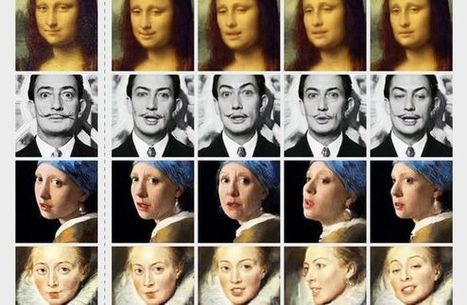

In November 2017, a Reddit account called deepfakes posted pornographic clips made with software that pasted the faces of Hollywood actresses over those of the real performers. Nearly two years later, deepfake is a generic noun for video manipulated or fabricated with artificial intelligence software. The technique has drawn laughs on YouTube, along with concern from lawmakers fearful of political disinformation. Yet a new report that tracked the deepfakes circulating online finds they mostly remain true to their salacious roots.

Startup Deeptrace took a kind of deepfake census during June and July to inform its work on detection tools it hopes to sell to news organizations and online platforms. It found almost 15,000 videos openly presented as deepfakes—nearly twice as many as seven months earlier. Some 96 percent of the deepfakes circulating in the wild were pornographic, Deeptrace says.

Research and publish the best content.

Get Started for FREE

Sign up with Facebook Sign up with X

I don't have a Facebook or a X account

Already have an account: Login

News, reviews, resources for AI, iTech, MakerEd, Coding and more ....

Curated by

John Evans

Your new post is loading... Your new post is loading...

Your new post is loading... Your new post is loading...

|

|