Your new post is loading...

Your new post is loading...

|

Scooped by

nrip

|

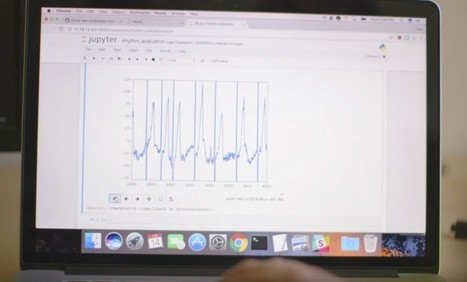

Anticipating the risk of gastrointestinal bleeding (GIB) when initiating antithrombotic treatment (oral antiplatelets or anticoagulants) is limited by existing risk prediction models. Machine learning algorithms may result in superior predictive models to aid in clinical decision-making. Objective: To compare the performance of 3 machine learning approaches with the commonly used HAS-BLED (hypertension, abnormal kidney and liver function, stroke, bleeding, labile international normalized ratio, older age, and drug or alcohol use) risk score in predicting antithrombotic-related GIB. Design, setting, and participants: This retrospective cross-sectional study used data from the OptumLabs Data Warehouse, which contains medical and pharmacy claims on privately insured patients and Medicare Advantage enrollees in the US. The study cohort included patients 18 years or older with a history of atrial fibrillation, ischemic heart disease, or venous thromboembolism who were prescribed oral anticoagulant and/or thienopyridine antiplatelet agents between January 1, 2016, and December 31, 2019. In this cross-sectional study, the machine learning models examined showed similar performance in identifying patients at high risk for GIB after being prescribed antithrombotic agents. Two models (RegCox and XGBoost) performed modestly better than the HAS-BLED score. A prospective evaluation of the RegCox model compared with HAS-BLED may provide a better understanding of the clinical impact of improved performance. link to the original investigation paper https://jamanetwork.com/journals/jamanetworkopen/fullarticle/2780274 read the pubmed article at https://pubmed.ncbi.nlm.nih.gov/34019087/

|

Scooped by

nrip

|

Numerous studies demonstrate frequent mutations in the genome of SARS-CoV-2. Our goal was to statistically link mutations to severe disease outcome. We found that automated machine learning, such as the method of Tsamardinos and coworkers used here, is a versatile and effective tool to find salient features in large and noisy databases, such as the fast growing collection of SARS-CoV-2 genomes. In this work we used machine learning techniques to select mutation signatures associated with severe SARS-CoV-2 infections. We grouped patients into 2 major categories (“mild” and “severe”) by grouping the 179 outcome designations in the GISAID database. A protocol combined of logistic regression and feature selection algorithms revealed that mutation signatures of about twenty mutations can be used to separate the two groups. The mutation signature is in good agreement with the variants well known from previous genome sequencing studies, including Spike protein variants V1176F and S477N that co-occur with DG14G mutations and account for a large proportion of fast spreading SARS-CoV-2 variants. UTR mutations were also selected as part of the best mutation signatures. The mutations identified here are also part of previous, statistically derived mutation profiles. An online prediction platform was set up that can assign a probabilistic measure of infection severity to SARS-CoV-2 sequences, including a qualitative index of the strength of the diagnosis. The data confirm that machine learning methods can be conveniently used to select genomic mutations associated with disease severity, but one has to be cautious that such statistical associations – like common sequence signatures, or marker fingerprints in general – are by no means causal relations, unless confirmed by experiments. Our plans are to update the predictions server in regular time intervals. While this project was underway more than 100 thousand sequences were deposited in public databases, and importantly, new variants emerged in the UK and in South Africa that are not yet included in the current datasets. Also, in addition to mutations, we plan to include also insertions and deletions which will hopefully further improve the predictive power of the server. The study was funded by the Hungarian Ministry for Innovation and Technology (MIT) , within the framework of the Bionic thematic programme of the Semmelweis University. Read the entire study at https://www.biorxiv.org/content/10.1101/2021.04.01.438063v1.full Access the online portal mentioned above at https://covidoutcome.com/

|

Scooped by

nrip

|

E-health has proven to have many benefits including reduced errors in medical diagnosis.

A number of machine learning (ML) techniques have been applied in medical diagnosis, each having its benefits and disadvantages.

With its powerful pre-built libraries, Python is great for implementing machine learning in the medical field, where many people do not have an Artificial Intelligence background.

This talk will focus on applying ML on medical datasets using Scikit-learn, a Python module that comes packed with various machine learning algorithms. It will be structured as follows:

- An introduction to e-health.

- Types of medical data.

- Some Benchmark algorithms used in medical diagnosis: Decision trees, K-Nearest Neighbours, Naive Bayes and Support Vector Machines.

- How to implement benchmark algorithms using Scikit-learn.

- Performance evaluation metrics used in e-health.

This talk is aimed at people interested in real-life applications of machine learning using Python. Although centered around ML in medicine, the acquired skills can be extended to other fields.

About the speaker: Diana Pholo is a PhD student and lecturer in the department of Computer Systems Engineering, at the Tshwane University of Technology.

Here is her Linkedin profile: https://za.linkedin.com/in/diana-pholo-76ba803b access the deck and the original article at https://speakerdeck.com/pyconza/python-as-a-tool-for-e-health-systems-by-diana-pholo

|

Scooped by

nrip

|

Deep mind will use data available to it via a new partnership with Jikei University Hospital in Japan to refine its artificially intelligent (AI) breast cancer detection algorithms. Google AI subsidiary DeepMind has partnered with Jikei University Hospital in Japan to analyze mammagrophy scans from 30,000 women. DeepMind is furthering its cancer research efforts with a newly announced partnership. The London-based Google subsidiary said it has been given access to mammograms from roughly 30,000 women that were taken at Jikei University Hospital in Tokyo, Japan between 2007 and 2018. Deep mind will use that data to refine its artificially intelligent (AI) breast cancer detection algorithms. Over the course of the next five years, DeepMind researchers will review the 30,000 images, along with 3,500 images from magnetic resonance imaging (MRI) scans and historical mammograms provided by the U.K.’s Optimam (an image database of over 80,000 scans extracted from the NHS’ National Breast Screening System), to investigate whether its AI systems can accurately spot signs of cancerous tissue.

|

Scooped by

nrip

|

It might not be long before algorithms routinely save lives—as long as doctors are willing to put ever more trust in machines. An algorithm that spots heart arrhythmia shows how AI will revolutionize medicine—but patients must trust machines with their lives. A team of researchers at Stanford University, led by Andrew Ng, a prominent AI researcher and an adjunct professor there, has shown that a machine-learning model can identify heart arrhythmias from an electrocardiogram (ECG) better than an expert. The automated approach could prove important to everyday medical treatment by making the diagnosis of potentially deadly heartbeat irregularities more reliable. It could also make quality care more readily available in areas where resources are scarce. The work is also just the latest sign of how machine learning seems likely to revolutionize medicine. In recent years, researchers have shown that machine-learning techniques can be used to spot all sorts of ailments, including, for example, breast cancer, skin cancer, and eye disease from medical images. more at : https://www.technologyreview.com/s/608234/the-machines-are-getting-ready-to-play-doctor/

|

|

Scooped by

nrip

|

Since the early years of artificial intelligence, scientists have dreamed of creating computers that can “see” the world. As vision plays a key role in many things we do every day, cracking the code of computer vision seemed to be one of the major steps toward developing artificial general intelligence. But like many other goals in AI, computer vision has proven to be easier said than done. In the past decades, advances in machine learning and neuroscience have helped make great strides in computer vision. But we still have a long way to go before we can build AI systems that see the world as we do. Biological and Computer Vision, a book by Harvard Medical University Professor Gabriel Kreiman, provides an accessible account of how humans and animals process visual data and how far we’ve come toward replicating these functions in computers. Kreiman’s book helps understand the differences between biological and computer vision. The book details how billions of years of evolution have equipped us with a complicated visual processing system, and how studying it has helped inspire better computer vision algorithms. Kreiman also discusses what separates contemporary computer vision systems from their biological counterpart. Hardware differences Biological vision is the product of millions of years of evolution. There is no reason to reinvent the wheel when developing computational models. We can learn from how biology solves vision problems and use the solutions as inspiration to build better algorithms. Before being able to digitize vision, scientists had to overcome the huge hardware gap between biological and computer vision. Biological vision runs on an interconnected network of cortical cells and organic neurons. Computer vision, on the other hand, runs on electronic chips composed of transistors Architecture differences There’s a mismatch between the high-level architecture of artificial neural networks and what we know about the mammal visual cortex. Goal differences Several studies have shown that our visual system can dynamically tune its sensitivities to the common. Creating computer vision systems that have this kind of flexibility remains a major challenge, however. Current computer vision systems are designed to accomplish a single task. Integration differences In humans and animals, vision is closely related to smell, touch, and hearing senses. The visual, auditory, somatosensory, and olfactory cortices interact and pick up cues from each other to adjust their inferences of the world. In AI systems, on the other hand, each of these things exists separately. read more at https://venturebeat.com/2021/05/15/understanding-the-differences-between-biological-and-computer-vision/

|

Scooped by

nrip

|

Two scientific leaps, in machine learning algorithms and powerful biological imaging and sequencing tools , are increasingly being combined to spur progress in understanding diseases and advance AI itself. Cutting-edge, machine-learning techniques are increasingly being adapted and applied to biological data, including for COVID-19. Recently, researchers reported using a new technique to figure out how genes are expressed in individual cells and how those cells interact in people who had died with Alzheimer's disease. Machine-learning algorithms can also be used to compare the expression of genes in cells infected with SARS-CoV-2 to cells treated with thousands of different drugs in order to try to computationally predict drugs that might inhibit the virus. While, Algorithmic results alone don't prove the drugs are potent enough to be clinically effective. But they can help identify future targets for antivirals or they could reveal a protein researchers didn't know was important for SARS-CoV-2, providing new insight on the biology of the virus read the original article which speaks about a lot more at https://www.axios.com/ai-machine-learning-biology-drug-development-b51d18f1-7487-400e-8e33-e6b72bd5cfad.html

|

Scooped by

nrip

|

Algorithms based on machine learning and deep learning, intended for use in diagnostic imaging, are moving into the commercial pipeline. However, providers will have to overcome multiple challenges to incorporate these tools into daily clinical workflows in radiology. There now are numerous algorithms in various stages of development and in the FDA approval process, and experts believe that there could eventually be hundreds or even thousands of AI-based apps to improve the quality and efficiency of radiology. The emerging applications based on machine learning and deep learning primarily involve algorithms to automate such processes in radiology as detecting abnormal structures in images, such as cancerous lesions and nodules. The technology can be used on a variety of modalities, such as CT scans and X-rays. The goal is to help radiologists more effectively detect and track the progression of diseases, giving them tools to enhance speed and accuracy, thus improving quality and reducing costs. While the number of organizations incorporating these products into daily workflows is small today, experts expect many providers to adopt these solutions as the industry overcomes implementation challenges. Data dump

Radiologists’ growing appreciation for AI may result from the technology’s promise to help the profession cope with an explosion in the amount of data for each patient case. Radiologists also are grappling with the growth in data from sources outside radiology, such as lab tests or electronic medical records. This is another area where AI could help radiologists by analyzing data from disparate sources and pulling out key pieces of information for each case,. There are other issues that AI could address as well, such as “observer fatigue,” which is an “aspect of radiology practice and a particular issue in screening examinations where the likelihood of finding a true positive is low,” wrote researchers from Massachusetts General Hospital and Harvard Medical School in a 2018 article in the Journal of the American College of Radiology. These researchers foresee the utility of an AI program that could identify cases from routine screening exams with a likely positive result and prioritize those cases for radiologists’ attention. AI software also could help radiologists improve worklists of cases in which referring physicians already suspect that a medical problem exists. read more at the original source: https://www.healthdatamanagement.com/news/algorithms-begin-to-show-practical-use-in-diagnostic-imaging

|

Scooped by

nrip

|

Olive automates repetitive tasks and can match patients across databases at different hospitals When Sean Lane, a former NSA operative who served five tours of duty in Afghanistan and Iraq, first entered into the health care-AI arena, he was overwhelmed with data silos, systems that don’t speak to each other, and many, many portals and screens. “I was not going to create another screen,” Lane told a packed room on Monday at ApplySci’s annual health technology conference at the MIT Media Lab in Cambridge, Mass. Instead, Lane and a team taught an AI system to use software that already exists in health care just like a human would use it. They named it Olive. “Olive loves all that crappy software that health care already has,” said Lane. “Olive can look at any software program, any application for the first time she’s ever seen it, and understand how to use it.” For example, Olive navigates electronic medical records, logs into hospital portals, creates reports, files insurance claims, and more. Olive does so thanks to three key traits. First, using computer vision and Robotic Process Automation, or RPA, the program can interact with any software interface just as a human would, opening browsers and typing. Second, machine learning enables Olive to make decisions the way human health care workers do. The team trained Olive with historical data on how health care workers perform digital tasks, such as how to file an insurance eligibility check for a patient seeking to undergo a procedure. Finally, Olive relies on a unique skill that Lane developed based on his work at the NSA identifying criminals across disparate government sources—the ability to match identities across databases. Just as NSA software can determine if a terrorist in the CIA database is the same as in the Homeland Security database, so Olive matches a patient across disparate databases and software, such as multiple electronic health care record programs. Read the full article at https://spectrum.ieee.org/the-human-os/computing/software/this-healthcare-ai-loves-crappy-software

|

Your new post is loading...

Your new post is loading...