Your new post is loading...

Your new post is loading...

|

Scooped by

nrip

|

Digital health tools, platforms, and artificial intelligence– or machine learning–based clinical decision support systems are increasingly part of health delivery approaches, with an ever-greater degree of system interaction. Critical to the successful deployment of these tools is their functional integration into existing clinical routines and workflows. This depends on system interoperability and on intuitive and safe user interface design. Its extremely important that research and efforts are directed towards minimizing emergent workflow stress and ensuring purposeful design for integration. Usability of tools in practice is as important as algorithm quality. Regulatory and health technology assessment frameworks recognize the importance of these factors to a certain extent, but their focus remains mainly on the individual product rather than on emergent system and workflow effects. The measurement of performance and user experience has so far been performed in ad hoc, nonstandardized ways by individual actors using their own evaluation approaches. This paper proposes that a standard framework for system-level and holistic evaluation which be built into interacting digital systems to enable systematic and standardized system-wide, multiproduct, postmarket surveillance and technology assessment. Such a system could be made available to developers through regulatory or assessment bodies as an application programming interface and could be a requirement for digital tool certification, just as interoperability is. This would enable health systems and tool developers to collect system-level data directly from real device use cases, enabling the controlled and safe delivery of systematic quality assessment or improvement studies suitable for the complexity and interconnectedness of clinical workflows using developing digital health technologies. read the entire paper at https://www.jmir.org/2023/1/e50158

|

Scooped by

nrip

|

A new diagnostic technique that has the potential to identify opioid-addicted patients at risk for relapse could lead to better treatment and outcomes. Using an algorithm that looks for patterns in brain structure and functional connectivity, researchers were able to distinguish prescription opioid users from healthy participants. If treatment is successful, their brains will resemble the brain of someone not addicted to opioids. “People can say one thing, but brain patterns do not lie,” says lead researcher Suchismita Ray, an associate professor in the health informatics department at Rutgers School of Health Professions. “The brain patterns that the algorithm identified from brain volume and functional connectivity biomarkers from prescription opioid users hold great promise to improve over current diagnosis.” In the study in NeuroImage: Clinical, Ray and her colleagues used MRIs to look at the brain structure and function in people diagnosed with prescription opioid use disorder who were seeking treatment compared to individuals with no history of using opioids. The scans looked at the brain network believed to be responsible for drug cravings and compulsive drug use. At the completion of treatment, if this brain network remains unchanged, the patient needs more treatment. read the study at https://doi.org/10.1016/j.nicl.2021.102663 read the article at https://www.futurity.org/opioid-addiction-relapse-algorithm-2586182-2/

|

Scooped by

nrip

|

Improving the management of unstructured content is crucial when it comes to creating successful healthcare platforms. Clinicians can build better pictures of their patients when they use healthcare platforms which harness unstructured content, as well as structured content, to create a single source of clinical information. Analysts estimate that as much as 80 per cent of patient information is currently unstructured and is not contained in Electronic Health Records (EHRs). This means that vital clinical information, such as paper records or medical imaging, which does not tick specific boxes, can be overlooked. to read more, visit https://www.healthcareitnews.com/news/apac/improving-healthcare-better-managing-unstructured-content

|

Scooped by

nrip

|

“ATMAN AI”, an Artificial Intelligence algorithm that can detect the presence of COVID-19 disease in Chest X Rays, has been developed to combat COVID fatalities involving lung. ATMAN AI is used for chest X-ray screening as a triaging tool in Covid-19 diagnosis, a method for rapid identification and assessment of lung involvement. This is a joint effort of the DRDO Centre for Artificial Intelligence and Robotics (CAIR), 5C Network & HCG Academics. This will be utilized by online diagnostic startup 5C Network with support of HCG Academics across India. Triaging COVID suspect patients using X Ray is fast, cost effective and efficient. It can be a very useful tool especially in smaller towns in India owing to lack of easy access to CT scans there. This will also reduce the existing burden on radiologists and make CT machines which are being used for COVID be used for other diseases and illness owing to overload for CT scans. The novel feature namely “Believable AI” along with existing ResNet models have improved the accuracy of the software and being a machine learning tool, the accuracy will improve continually. Chest X-Rays of RT-PCR positive hospitalized patients in various stages of disease involvement were retrospectively analysed using Deep Learning & Convolutional Neural Network models by an indigenously developed deep learning application by CAIR-DRDO for COVID -19 screening using digital chest X-Rays. The algorithm showed an accuracy of 96.73%. read more at http://indiaai.gov.in/news/drdo-cair-5g-network-and-hcg-academics-develop-atman-ai

|

Scooped by

nrip

|

Since the start of the pandemic, new technologies have been developed to help reduce the spread of the infection. Some of the most common safety measures today include measuring a person’s temperature, covering your nose and mouth with a mask, contact tracing, disinfection, and social distancing. Many businesses have adopted various technologies, including those with artificial intelligence (AI) underneath, helping to adhere to the COVID-19 safety measures. As an example, numerous airlines, hotels, subways, shopping malls, and other institutions are already using thermal cameras to measure an individual’s temperature before people are allowed entry. In its turn, public transport in France relies on AI-based surveillance cameras to monitor whether or not people are social-distancing or wearing masks. Another example is requiring the download of contact-tracing apps delivered by governments across the globe. However, there are a number of issues. While many of these solutions help to ensure that COVID-19 prevention practices are observed, many of them have flaws or limits. In this article, we will cover some of the issues creating obstacles for fighting the pandemic. Issue #1. Manual temperature scanning is tricky Issue #2. Monitoring crowds is even more complex Issue #3. Contact tracing leads to privacy concerns Issue #4. UV rays harm eyes and skin Issue #5. UVC robots are extremely expensive Issue #6. No integration, no compliance, no transparency Regardless of the safety measures in place and existing issues, innovations are already playing a vital role in the fight against COVID-19. By improving on existing technology, we can make everyone safer as we all adjust to the new normal. read the details at https://www.altoros.com/blog/whats-wrong-with-ai-tools-and-devices-preventing-covid-19/

|

Scooped by

nrip

|

There is a seriousness, almost an urgent kind, amongst the healthcare ecosystem to adopt digital technologies more openly as compared to the pre - covid era. Since we have always been talking about the importance of taking healthcare digital, this acceptance of digital technologies has impacted us tremendously and favourably. Plus91's Digital Health Systems have always been a few years too soon for the market, and Covid just fast-forwarded the world to use us right away. What is your take on virtual methods of providing treatment? All virtual treatment methods, whether it is TeleHealth, Remote Monitoring, Tele Pathology are very much a necessity. Covid has simply brought them into the limelight and forced the world to adopt them quickly. I believe they all benefit healthcare immensely, and thus should be adopted wholeheartedly by doctors and patients. They end up offering a wider variety of options for both and allow a far richer treatment mindset to get created in the coming years. Doctors benefit from being accessible to patients from across the globe more easily and frequently for both offering care as well as 2nd/3rd opinions. This helps them acquire experience on a wider range of patients besides the ones that come to them purely due to geographical viability. Patients benefit a lot as they can access doctors more easily, and also get doctors who may be in a different part of the world from them who are experts at dealing with a specific condition without having to bear the cost of travel. What impact do you want to create in the medical field? I want to make healthcare more holistic, error-free, and open. I believe in the distant future we will be able to address the whole issue of disease and mankind will be completely focused on health from the wellness perspective rather than a treatment perspective. And I want to be an integral part of that change. read the whole interview at : https://www.eatmy.news/2021/04/nrip-nihalani-i-want-to-make-healthcare.html

|

Scooped by

nrip

|

In recent times, researchers have increasing found that the power of computers and artificial intelligence is enabling more accurate diagnosis of a patient's current heart health and can provide an accurate projection of future heart health, potential treatments and disease prevention In a paper published in the European Heart Journal, researchers from King's College London, show how linking computer and statistical models can improve clinical decisions relating to the heart. The research team is lead by Dr. Pablo Lamata. In his statement he said that "We found that making appropriate clinical decisions is not only about data, but how to combine data with the knowledge that we have built up through years of research." The Digital Twin The team have coined the phrase the Digital Twin to describe this integration of the two models, a computerised version of our heart which represents human physiology and individual data. "The Digital Twin will shift treatment selection from being based on the state of the patient today to optimising the state of the patient tomorrow, The idea is that the electronic health record will be growing into a more detailed description of what we could call a digital avatar, a digital representation of how the heart is working. This could mean that a trip to the doctor's office could be a more digital experience. " Mechanistic models see researchers applying the laws of physics and maths to simulate how the heart will behave. Statistical models require researchers to look at past data to see how the heart will behave in similar conditions and infer how it will do it over time. Models can pinpoint the most valuable piece of diagnostic data and can also reliably infer biomarkers that cannot be directly measured or that require invasive procedures. "It's like the weather: understanding better how it works, helps us to predict it. And with the heart, models will also help us to predict how better or worse it will get if we interfere with it." read the original unedited article at https://medicalxpress.com/news/2020-03-digital-heart-future-health.html

|

|

Scooped by

nrip

|

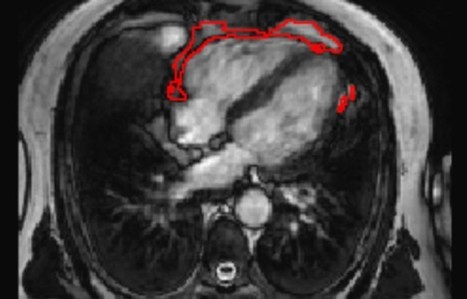

The distribution of fat in the body can influence a person's risk of developing various diseases. The commonly used measure of body mass index (BMI) mostly reflects fat accumulation under the skin, rather than around the internal organs. In particular, there are suggestions that fat accumulation around the heart may be a predictor of heart disease, and has been linked to a range of conditions, including atrial fibrillation, diabetes, and coronary artery disease. A team led by researchers from Queen Mary University of London has developed a new artificial intelligence (AI) tool that is able to automatically measure the amount of fat around the heart from MRI scan images. Using the new tool, the team was able to show that a larger amount of fat around the heart is associated with significantly greater odds of diabetes, independent of a person's age, sex, and body mass index. The research team invented an AI tool that can be applied to standard heart MRI scans to obtain a measure of the fat around the heart automatically and quickly, in under three seconds. This tool can be used by future researchers to discover more about the links between the fat around the heart and disease risk, but also potentially in the future, as part of a patient's standard care in hospital. The research team tested the AI algorithm's ability to interpret images from heart MRI scans of more than 45,000 people, including participants in the UK Biobank, a database of health information from over half a million participants from across the UK. The team found that the AI tool was accurately able to determine the amount of fat around the heart in those images, and it was also able to calculate a patient's risk of diabetes read the research published at https://www.frontiersin.org/articles/10.3389/fcvm.2021.677574/full read more at https://www.sciencedaily.com/releases/2021/07/210707112427.htm also at the QMUL website https://www.qmul.ac.uk/media/news/2021/smd/ai-predicts-diabetes-risk-by-measuring-fat-around-the-heart-.html

|

Scooped by

nrip

|

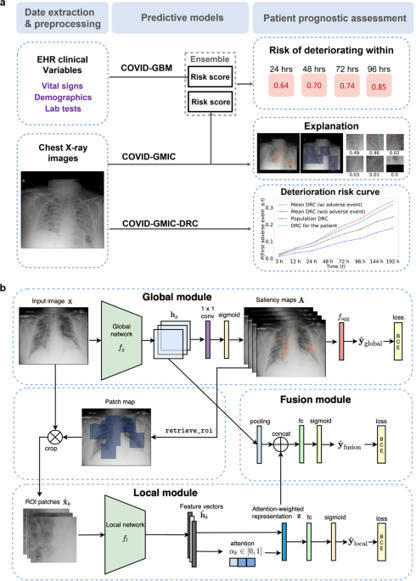

During the coronavirus disease 2019 (COVID-19) pandemic, rapid and accurate triage of patients at the emergency department is critical to inform decision-making. We propose a data-driven approach for automatic prediction of deterioration risk using a deep neural network that learns from chest X-ray images and a gradient boosting model that learns from routine clinical variables. Our AI prognosis system, trained using data from 3661 patients, achieves an area under the receiver operating characteristic curve (AUC) of 0.786 (95% CI: 0.745–0.830) when predicting deterioration within 96 hours. The deep neural network extracts informative areas of chest X-ray images to assist clinicians in interpreting the predictions and performs comparably to two radiologists in a reader study. In order to verify performance in a real clinical setting, we silently deployed a preliminary version of the deep neural network at New York University Langone Health during the first wave of the pandemic, which produced accurate predictions in real-time. In summary, our findings demonstrate the potential of the proposed system for assisting front-line physicians in the triage of COVID-19 patients. read the open article at https://www.nature.com/articles/s41746-021-00453-0

|

Scooped by

nrip

|

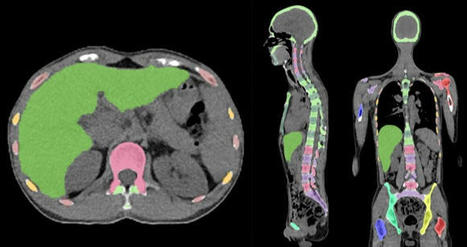

Skeleton/bone marrow involvement in patients with newly diagnosed Hodgkin’s lymphoma (HL) is an important predictor of adverse outcomes1. Studies show that FDG-PET/CT upstages patients with uni- or multifocal skeleton/bone marrow uptake (BMU) when iliac crest bone marrow biopsy fails to find evidence of histology-proven involvement. The general recommendation is, therefore, that bone marrow biopsy can be avoided when FDG-PET/CT is performed at staging. Our aim was to develop an AI-based method for the detection of focal skeleton/BMU and quantification of diffuse BMU in patients with HL undergoing staging with FDG-PET/CT. The output of the AI-based method in a separate test set was compared to the image interpretation of ten physicians from different hospitals. Finally, the AI-based quantification of diffuse BMU was compared to manual quantification. Artificial intelligence-based classification A convolutional neural network (CNN) was used to segment the skeletal anatomy11. Based on this CNN, the bone marrow was defined by excluding the edges from each individual bone; more precisely, 7 mm was excluded from the humeri and femora, 5 mm was excluded from the vertebrae and hip bones, and 3 mm was excluded from the remaining bones. Focal skeleton/bone marrow uptake The basic idea behind our approach is that the distribution of non-focal BMU has a light tail and most pixels will have an uptake reasonably close to the average. There will still be variations between different bones. Most importantly, we found that certain bones were much more likely to have diffuse BMU than others. Hence, we cannot use the same threshold for focal uptake in all bones. At the other end, treating each bone individually is too susceptible to noise. As a compromise, we chose to divide the bones into two groups: -

“spine”—defined as the vertebrae, sacrum, and coccyx as well as regions in the hip bones within 50 mm from these locations, i.e., including the sacroiliac joints. -

“other bones”—defined as the humeri, scapulae, clavicles, ribs, sternum, femora, and the remaining parts of the hip bones. For each group, the focal standardized uptake values (SUVs) were quantified using the following steps: - Threshold computation. A threshold (THR) was computed using the mean and standard deviation (SD) of the SUV inside the bone marrow. The threshold was set to

- 2. Abnormal bone region. The abnormal bone region was defined in the following way:

Only the pixels segmented as bone and where SUV > THR were considered. To reduce the issues of PET/CT misalignment and spill over, a watershed transform was used to assign each of these pixels to a local maximum in the PET image. If this maximum was outside the bone mask, the uptake was assumed to be leaking into the bone from other tissues and was removed. Finally, uptake regions smaller than 0.1 mL were removed. - 3.Abnormal bone SUV quantification. The mean squared abnormal uptake (MSAU) was first calculated as

MSAU=meanof(SUV−THR)2overtheabnormalboneregion. To quantify the abnormal uptake, we used the total squared abnormal uptake (TSAU), rather than the more common total lesion glycolysis (TLG). We believe TLG tends to overestimate the severity of larger regions with moderate uptake. TSAU will assign a much smaller value to such lesions, reflecting the uncertainty that is often associated with their classification. Instead, TSAU will give a larger weight to small lesions with very high uptake. This reflects both the higher certainty with respect to their classification and the severity typically associated to very high uptake. TSAU=MSAU×(volumeoftheabnormalboneregion). This calculation leads to two TSAU values; one for the “spine” and one for the “other bones”. As the TSAU value can be nonzero even for patients without focal uptake, cut-off values were tuned using the training cohort. The AI method was adjusted in the training group to have a positive predictive value of 65% and a negative predictive value of 98%. For the “spine”, a cut-off of 0.5 was used, and for the “other bones”, a cut-off of 3.0 was used. If one of the TSAU values was higher than the corresponding cut-off, the patient was considered to have focal uptake. Results Focal uptake Fourteen of the 48 cases were classified as having focal skeleton/BMU by the AI-based method. The majority of physicians classified 7/48 cases as positive and 41/48 cases as negative for having focal skeleton/BMU. The majority of the physicians agreed with the AI method in 39 of the 48 cases. Six of the seven positive cases (86%) identified by the majority of physicians were identified as positive by the AI method, while the seventh was classified as negative by the AI method and by three of the ten physicians. Thirty-three of the 41 negative cases (80%) identified by the majority of physicians were also classified as negative by the AI method. In seven of the remaining eight patients, 1–3 physicians (out of the ten total) classified the cases as having focal uptake, while in one of the eight cases none of the physicians classified it as having focal uptake. These findings indicate that the AI method has been developed towards high sensitivity, which is necessary to highlight suspicious uptake. Conclusions The present study demonstrates that an AI-based method can be developed to highlight suspicious focal skeleton/BMU in HL patients staged with FDG-PET/CT. This AI-based method can also objectively provide results regarding high versus low BMU by calculating the SUVmedian value in the whole spine marrow and the liver. Additionally, the study also demonstrated that inter-observer agreement regarding both focal and diffuse BMU is moderate among nuclear medicine physicians with varying levels of experience working at different hospitals. Finally, our results show that the automated method regarding diffuse BMU is comparable to the manual ROI method. read the original paper at https://www.nature.com/articles/s41598-021-89656-9

|

Scooped by

nrip

|

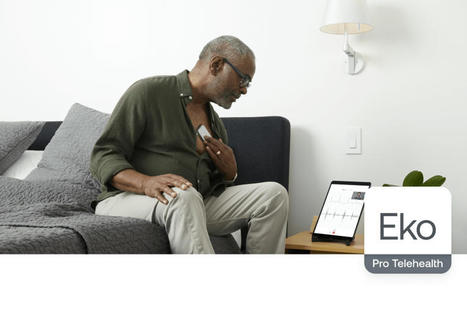

Eko, a cardiopulmonary digital health company, today announced the peer-reviewed publication of a clinical study that found that the Eko artificial intelligence (AI) algorithm for detecting heart murmurs is accurate and reliable, with comparable performance to that of an expert cardiologist. These findings suggest utility of the FDA-cleared Eko AI algorithm as a front line clinical tool to aid clinicians in screening for cardiac murmurs that may be caused by valvular heart disease. For moderate-to-severe aortic stenosis, the algorithm was found to have sensitivity of 93.2% and specificity of 86.0%. The algorithm significantly outperformed general practitioners listening for moderate-to-severe valvular heart disease, as a 2018 study showed general practitioners had sensitivity of 44% and specificity of 69%.

|

Scooped by

nrip

|

Two scientific leaps, in machine learning algorithms and powerful biological imaging and sequencing tools , are increasingly being combined to spur progress in understanding diseases and advance AI itself. Cutting-edge, machine-learning techniques are increasingly being adapted and applied to biological data, including for COVID-19. Recently, researchers reported using a new technique to figure out how genes are expressed in individual cells and how those cells interact in people who had died with Alzheimer's disease. Machine-learning algorithms can also be used to compare the expression of genes in cells infected with SARS-CoV-2 to cells treated with thousands of different drugs in order to try to computationally predict drugs that might inhibit the virus. While, Algorithmic results alone don't prove the drugs are potent enough to be clinically effective. But they can help identify future targets for antivirals or they could reveal a protein researchers didn't know was important for SARS-CoV-2, providing new insight on the biology of the virus read the original article which speaks about a lot more at https://www.axios.com/ai-machine-learning-biology-drug-development-b51d18f1-7487-400e-8e33-e6b72bd5cfad.html

|

Scooped by

nrip

|

People often turn to technology to manage their health and wellbeing, whether it is - to record their daily exercise,

- measure their heart rate, or increasingly,

- to understand their sleep patterns.

Sleep is foundational to a person’s everyday wellbeing and can be impacted by (and in turn, have an impact on) other aspects of one’s life — mood, energy, diet, productivity, and more. As part of Google's ongoing efforts to support people’s health and happiness, Google has announced Sleep Sensing in the new Nest Hub, which uses radar-based sleep tracking in addition to an algorithm for cough and snore detection. The new Nest Hub, with its underlying Sleep Sensing features, is the first step in empowering users to understand their nighttime wellness using privacy-preserving radar and audio signals. Understanding Sleep Quality with Audio Sensing The Soli-based sleep tracking algorithm gives users a convenient and reliable way to see how much sleep they are getting and when sleep disruptions occur. However, to understand and improve their sleep, users also need to understand why their sleep is disrupted. To assist with this, Nest Hub uses its array of sensors to track common sleep disturbances, such as light level changes or uncomfortable room temperature. In addition to these, respiratory events like coughing and snoring are also frequent sources of disturbance, but people are often unaware of these events. As with other audio-processing applications like speech or music recognition, coughing and snoring exhibit distinctive temporal patterns in the audio frequency spectrum, and with sufficient data an ML model can be trained to reliably recognize these patterns while simultaneously ignoring a wide variety of background noises, from a humming fan to passing cars. The model uses entirely on-device audio processing with privacy-preserving analysis, with no raw audio data sent to Google’s servers. A user can then opt to save the outputs of the processing (sound occurrences, such as the number of coughs and snore minutes) in Google Fit, in order to view personal insights and summaries of their night time wellness over time. read the entire unedited blog post at https://ai.googleblog.com/2021/03/contactless-sleep-sensing-in-nest-hub.html

|

Your new post is loading...

Your new post is loading...

In recent conversations with FIND and WHO we have mutually discussed the framework which needs to be defined and put in place for identifying effectiveness in AI tools for use in medicine at the provider level. The basic reasoning behind our conversations has been to ensure that the trust factor behind a particular AI technology being used in healthcare is created and then via regular monitoring maintained rather than simply buying into far fetched stories. This paper and several others on this topic will be highlighted here , and further on Plus91's medium blog we will put several frameworks together