Your new post is loading...

Astronomers at the Institute for Advanced Study and the Flatiron Institute, along with their collaborators, have utilized artificial intelligence (AI) to improve a method of calculating the mass of massive clusters of galaxies. The AI revealed that by incorporating a simple term into an existing equation, researchers can now achieve much more accurate mass estimates than ever before. The newly enhanced calculations will allow scientists to determine the basic characteristics of the universe with greater precision, according to a report by the astrophysicists, which was published in the Proceedings of the National Academy of Sciences (PNAS). “It’s such a simple thing; that’s the beauty of this,” says study co-author Francisco Villaescusa-Navarro, a research scientist at the Flatiron Institute’s Center for Computational Astrophysics (CCA) in New York City. “Even though it’s so simple, nobody before had thought of this and found this term. People have been working on this for decades, and still they were not able to find this. That really shows you the power of AI, which gets smarter every month.” Understanding the universe requires knowing where and how much stuff there is. Galaxy clusters are the most massive objects in the universe: A single cluster can contain anything from hundreds to thousands of galaxies, along with plasma, hot gas, and dark matter. The cluster’s gravity holds these components together. Understanding such galaxy clusters is crucial to pinning down the origin and continuing evolution of the universe. Perhaps the most crucial quantity determining the properties of a galaxy cluster is its total mass. But measuring this quantity is difficult — galaxies cannot be ‘weighed’ by placing them on a scale. The problem is further complicated because the dark matter that makes up much of a cluster’s mass is invisible. Instead, scientists deduce the mass of a cluster from other observable quantities. In the early 1970s, Rashid Sunyaev, current distinguished visiting professor at the Institute for Advanced Study’s School of Natural Sciences, and his collaborator Yakov B. Zel’dovich developed a new way to estimate galaxy cluster masses. Their method relies on the fact that as gravity squashes matter together, the matter’s electrons push back. That electron pressure alters how the electrons interact with particles of light called photons. As photons left over from the Big Bang’s afterglow hit the squeezed material, the interaction creates new photons. The properties of those photons depend on how strongly gravity is compressing the material, which in turn depends on the galaxy cluster’s heft. By measuring the photons, astrophysicists can estimate the cluster’s mass. However, this ‘integrated electron pressure’ is not a perfect proxy for mass, because the changes in the photon properties vary depending on the galaxy cluster. Wadekar and his colleagues thought an artificial intelligence tool called ‘symbolic regression’ might find a better approach. The tool essentially tries out different combinations of mathematical operators — such as addition and subtraction — with various variables, to see what equation best matches the data. The work was led by Digvijay Wadekar of the Institute for Advanced Study in Princeton, New Jersey, along with researchers from the CCA, Princeton University, Cornell University, and the Center for Astrophysics | Harvard & Smithsonian.

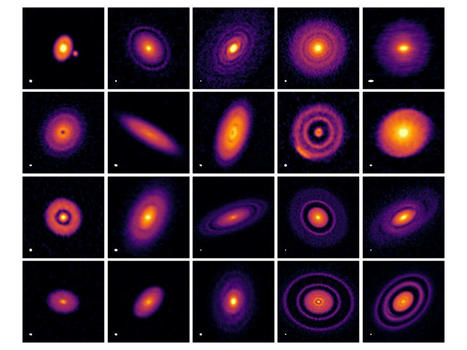

New research from the University of Georgia reveals that artificial intelligence can be used to find planets outside of our solar system. The recent study demonstrated that machine learning can be used to find exoplanets, information that could reshape how scientists detect and identify new planets very far from Earth. "One of the novel things about this is analyzing environments where planets are still forming," said Jason Terry, doctoral student in the UGA Franklin College of Arts and Sciences department of physics and astronomy and lead author on the study. "Machine learning has rarely been applied to the type of data we're using before, specifically for looking at systems that are still actively forming planets." The first exoplanet was found in 1992, and though more than 5,000 are known to exist, those have been among the easiest for scientists to find. Exoplanets at the formation stage are difficult to see for two primary reasons. They are too far away, often hundreds of lights years from Earth, and the disks where they form are very thick, thicker than the distance of the Earth to the sun. Data suggests the planets tend to be in the middle of these disks, conveying a signature of dust and gases kicked up by the planet. The research showed that artificial intelligence can help scientists overcome these difficulties. "This is a very exciting proof of concept," said Cassandra Hall, assistant professor of astrophysics, principal investigator of the Exoplanet and Planet Formation Research Group, and co-author on the study. "The power here is that we used exclusively synthetic telescope data generated by computer simulations to train this AI, and then applied it to real telescope data. This has never been done before in our field, and paves the way for a deluge of discoveries as James Webb Telescope data rolls in." The James Webb Space Telescope, launched by NASA in 2021, has inaugurated a new level of infrared astronomy, bringing stunning new images and reams of data for scientists to analyze. It's just the latest iteration of the agency's quest to find exoplanets, scattered unevenly across the galaxy. The Nancy Grace Roman Observatory, a 2.4-meter survey telescope scheduled to launch in 2027 that will look for dark energy and exoplanets, will be the next major expansion in capability—and delivery of information and data—to comb through the universe for life. he Webb telescope supplies the ability for scientists to look at exoplanetary systems in an extremely bright, high resolution, with the forming environments themselves a subject of great interest as they determine the resulting solar system. "The potential for good data is exploding, so it is a very exciting time for the field," Terry said.

An international team of scientists, led by a researcher at The University of Manchester, have developed a novel AI (artificial intelligence) approach to extract technical astronomy terminology into simple understandable English in their recent publication. The new research is a result of the international RGZ EMU (Radio Galaxy Zoo EMU) collaboration and is transitioning radio astronomy language from specific terms, such as FRI (Fanaroff-Riley Type 1), to plain English terms such as “hourglass” or “traces host galaxy”. In astronomy, technical terminology is used to describe specific ideas in efficient ways that are easily understandable amongst professional astronomers. However, this same terminology can also become a barrier to including non-experts in the conversation. The RGZ EMU collaboration is building a project on the Zooniverse citizen science platform, which asks the public for help in describing and categorizing galaxies imaged through a radio telescope. Modern astronomy projects collect so much data that it is often impossible for scientists to look at it all by themselves, and a computer analysis can still miss interesting things easily spotted by the human eye. Micah Bowles, Lead author and RGZ EMU data scientist, said: “Using AI to make scientific language more accessible is helping us share science with everyone. With the plain English terms we derived, the public can engage with modern astronomy research like never before and experience all the amazing science being done around the world.”

In a new paper published in the journal Nature Astronomy, astronomers with Breakthrough Listen Initiative — the largest ever scientific research program aimed at finding evidence of alien civilizations — present a new machine learning-based method that they apply to more than 480 hours of data from the Robert C. Byrd Green Bank Telescope, observing 820 nearby stars. The method analyzed 115 million snippets of data, from which it identified around 3 million signals of interest. The authors then inspected the 20,515 signals and they identified 8 previously undetected signals of interest, although follow-up observations of these targets have not re-detected them. “The key issue with any techno-signature search is looking through this huge haystack of signals to find the needle that might be a transmission from an alien world,” said Dr. Steve Croft, an astrophysicist at the University of California, Berkeley and a member of the Breakthrough Listen team. “The vast majority of the signals detected by our telescopes originate from our own technology — GPS satellites, mobile phones, and the like. Our algorithm gives us a more effective way to filter the haystack and find signals that have the characteristics we expect from techno-signatures.” Classical techno-signature algorithms compare scans where the telescope is pointed at a target point on the sky with scans where the telescope moves to a nearby position, in order to identify signals that may be coming from only that specific point. These techniques are highly effective. For example, they can successfully identify the Voyager 1 space probe, at a distance of 20 billion km, in observations with the Green Bank Telescope. But all of these algorithms struggle in crowded regions of the radio spectrum, where the challenge is akin to listening for a whisper in a crowded room. The process developed by the team inserts simulated signals into real data, and trains an artificial intelligence algorithm known as an auto-encoder to learn their fundamental properties. The output from this process is fed into a second algorithm known as a random forest classifier, which learns to distinguish the candidate signals from the noisy background. “In 2021, our classical algorithms uncovered a signal of interest, denoted BLC1, in data from the Parkes telescope,” said Breakthrough Listen’s principal investigator Dr. Andrew Siemion, an astronomer at the University of California, Berkeley.

Deep learning has helped advance the state-of-the-art in multiple fields over the last decade, with scientific research as no exception. The Kepler instrument is a space-based telescope designed to study and planets outside our solar system, aka exoplanets. The first exoplanet orbiting a star like our own was described by Didier Queloz and Michel Mayor in 1995, landing the pair the 2019 Nobel Prize in Physics. More than a decade later when Kepler was launched in 2009, the total number of known exoplanets was less than 400. The now-dormant telescope began operation in 2009, discovering more than 1,000 new exoplanets before a reaction wheel, a component used for precision pointing, failed in 2013. This brought the primary phase of the mission to an end. Some clever engineering changes allowed the telescope to begin a second period of data acquisition, termed K2. The data from K2 were noisier and limited to 80 days of continuous observation or less. These limitations pose challenges in identifying promising planetary candidates among the thousands of putative planetary signals, a task that had been previously handled nicely by a convolutional neural network (AstroNet) working with the data from Kepler’s primary data collection phase. Researchers at the University of Texas, Austin decided to try the same approach and derived AstroNet-K2 from the architecture of AstroNet to sort K2 planetary signals. After training, AstroNet-K2 had a 98% accuracy rate in identifying confirmed exoplanets in the test set, with low false positives. The authors deemed this performance to be sufficient for use as an analysis tool rather than full automation, requiring human follow-up. From the paper: While the performance of our network is not quite at the level

required to generate fully automatic and uniform

planet candidate catalogs, it serves as a proof of

concept. — (Dattilo et al. 2019) AstroNet-K2 receives this blog post’s coveted “Best Value” award for achieving a significant scientific bang-for-your-buck. Unlike the other two projects on this list that are more conceptual demonstrations, this project resulted in actual scientific progress, adding two new confirmed entries to the catalog of known exoplanets: EPIC 246151543 b and EPIC 246078672 b. In addition to the intrinsic challenges of the K2 data, the signals for the planets were further confounded by Mars transiting the observiation window and by 5 days of missing data associated with a safe mode event. This is a pretty good example of effective machine learning in action: the authors took an existing conv-net with a proven track record and modified it to perform well on the given data, adding a couple new discoveries from a difficult observation run without re-inventing the wheel. Worth noting is that the study’s lead author, Anne Dattilo, was an undergraduate at the time the work was completed. That’s a pretty good outcome for an undergraduate research project. The use of open-source software and building on previously developed architectures underlines the fact that deep learning is in a advanced readiness phase. The technology is not fully mature to the point of ubiquity, but the tools are all there on the shelf ready to be applied.

Astronomers have a new tool in their search for extraterrestrial life -- a sophisticated bot that helps identify stars hosting planets similar to Jupiter and Saturn. These giant planets' faraway twins may protect life in other solar systems, but they aren't bright enough to be viewed directly. Scientists find them based on properties they can observe in the stars they orbit. The challenge for planet hunters is that in our galaxy alone, there are roughly 200 billion stars. "Searching for planets can be a long and tedious process given the sheer volume of stars we could search," said Stephen Kane, UCR associate professor of planetary astrophysics. "Eliminating stars unlikely to have planets and pre-selecting those that might will save a ton of time," he said. The astronomy bot is a machine learning algorithm designed by Natalie Hinkel, a researcher formerly in Kane's laboratory now with the Southwest Research Institute. Kane examined data produced by the bot and discovered three stars with strong evidence of harboring giant, Jupiter-like planets about 100 light years away. A paper detailing the team's work was published today in the Astrophysical Journal. The algorithm uses information about the chemical composition of stars to predict whether it is surrounded by planets. Scientists can use spectroscopy, or the way light interacts with atoms in a star's upper layers, to measure the elements inside of it such as carbon, iron, and oxygen. These elements are key ingredients in making planets, since stars and planets are made at the same time and from the same materials. To train and test the algorithm, Hinkel fed it a publicly available database of stars that she developed. The database has allowed the algorithm to look at the elements that make up more than 4,200 stars and assess their likelihood of hosting planets. In addition, Hinkel looked at different combinations of those ingredients to see how they influenced the algorithm. "We found that the most influential elements in predicting planet-hosting stars are carbon, oxygen, iron and sodium," Hinkel said.

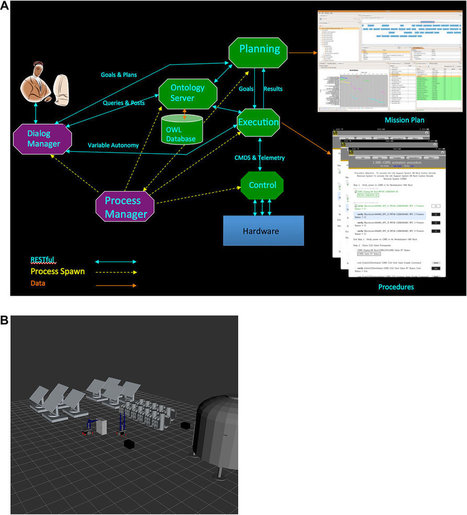

A team of engineers at TRACLabs Inc. in the U.S. is making inroads toward the creation of a planetary base station monitoring system similar in some respects to Hal 9000—the infamous AI system in the movie 2001: A Space Odyssey. In this case, it is called cognitive architecture for space agents (CASE) and is outlined in a Focus piece by Pete Bonasso, the primary engineer working on the project, in the journal Science Robotics. Bonasso explains that he has had an interest in creating a real Hal 9000 ever since watching the movie as a college student—minus the human killing, of course. His system is designed to run a base situated on another planet, such as Mars. It is meant to take care of the more mundane, but critical tasks involved with maintaining a habitable planetary base, such as maintaining oxygen levels and taking care of waste. He notes that such a system needs to know what to do and how to do it, carrying out activities using such hardware as robot arms. To that end, CASE has been designed as a three-layered system. The first is in charge of controlling hardware, such as power systems, life-support, etc. The second layer is more brainy—it is in charge of running the software that controls the hardware. The third layer is even smarter, responsible for coming up with solutions to problems as they arise—if damage occurs to a module, for example, it must be sealed off from others modules as quickly as possible. The system also has what Bonasso describes as an ontological system—its job is to be self-aware so that the system can make judgment calls when comparing data from sensors with what it has learned in the past and with information received from human occupants. To that end, the system will be expected to interact with those humans in ways similar to those portrayed in the movie. Bonasso reports that he and his team have built a virtual reality prototype of a planetary base, which CASE has thus far managed to run for up to four hours. He acknowledges that a lot more work needs to be done. Luckily, they still have a lot of time, as plans for human habitation of Mars and beyond are still decades away.

Researchers working on Search for Extraterrestrial Intelligence (SETI) efforts hunt for the same thing that their predecessors sought for decades—a sign that life arose, as Carl Sagan would say, on another humdrum planet around another humdrum star and rose up into something technologically advanced. It could happen any day. A strange radio signal. A weird, brief flash in the night sky. A curiously behaving star with no natural explanation. It could be anything, so SETI researchers are casting a wide net, tracking down as many promising leads as they can. But one thing they’ve started to realize is that if a civilization from another world follows a similar path to our own, then we may be dealing with a whole different form of brainpower. Not a little green person, Vulcan, or strange organism we aren’t yet fathoming, but an artificial intelligence. To understand why the first intelligence we meet might be artificial, we have to go back to early efforts to look for life around other stars. SETI researchers started listening to the cosmos on the assumption that aliens might begin radio transmissions as a first advanced technological step if they’re at all like us. There’s reason to believe that, like our own path, getting from the era of radio to the computing era is a small jump. “By 1900 you had radio; by 1945 you had computers,” Seth Shostak, senior scientist at the SETI Institute, says. “It seems to me that’s a hard arc to avoid.” And from there, it may just be a matter of getting those computers smaller and smaller as they get smarter and smarter. Automated processes learn to adapt on their own, and someday, rudimentary intelligence arrives, just as it has here. “There’s currently an AI revolution, and we see artificial intelligence getting smarter and smarter by the day,” Susan Schneider, an associate professor of cognitive science and philosophy at the University of Connecticut who has written about the intersection of SETI and AI, says. “That suggests to me something similar may be going on at other points in the universe.” Maybe the great filter comes and augmented aliens survive. Then their AI offspring take the wheel. Do a bunch of apes with noisy radio signals and the odd act of nuclear warfare really appeal to them—are they even actively looking for something like us? When it comes to that idea, Shostak says, “It’s not even dangerous (for the aliens). It’s uninteresting. It’s like me putting a sign up in my yard saying ‘attention all ants.’” In this case, we’re the ants. We may not have the resources of an alien society, and if artificial intelligence is supposed to search for signs of far, far advanced technology, we’re barely a blip on their radar. “Earth is actually a relatively young planet so some astrobiologists think if there are civilizations out there, they may be vastly more advanced than us. Sure, we got radio. Then we got computers. Then Moore’s Law turned digital computers into increasingly efficient machines, year-by-year. Machines improved very quickly—much, much more quickly than Darwin's life forms,” Shostak says. Meanwhile, the aliens from the older planets get more advanced. So does their AI. Maybe it becomes the most dominant lifeform on the planet. It takes over its planet, then its star. It sends itself out into the universe in general—or it’s content to stay home for whatever reason. It’s plentiful and abundant and highly advanced, and when it comes across Earth, it doesn’t see anything particularly special. An alien AI may be just a few thousand years ahead of us, technologically, but it may still be advanced enough to grow disinterested in finding ants. We might be like cats or goldfish compared to humans and they may not want to have anything to do with us. Our goldfish status could put us in a weird place. We may be just as likely to encounter biological life on a scale unimaginable to us right now, or we may make contact with their probes before we find them. We may find a semi-intelligent Bracewell beacon from afar, or one may swoop through our backyard, its AI trained to home in on the fingerprints of our civilization. We may find robots sent by the aliens, or we may find out robots are the aliens. At a base level, its possible to imagine that our first meeting with intelligent life beyond Earth might not be with something living and breathing, but with a different kind of fellow explorer—who just might happen to be a machine.

The European Space Agency’s Gaia satellite has been helping map the billions of stars in the Milky Way, and a group of astronomers is using the spacecraft’s stunning new star catalog to search for signs of highly advanced alien civilizations. A team of scientists from Sweden’s Uppsala University and the University of Heidelberg in Germany combed Gaia’s first data release of a billion stars from September 2016. They then cross-referenced it with observations from the Australia-based Milky Way-watching RAVE project looking for differences in how far away the two observatories place certain stars. Simply put, the Gaia data shows how far away a star is by watching it move across a background of far more distant stars, an effect called parallax. RAVE data, on the other hand, estimates the distance of a star based on the properties of its brightness. So the scientists looked for stars observed by both Gaia and RAVE where the data seemed to disagree on the distance of a star. The idea is that the disparity in distance measurements by the different instruments could be explained by the presence of a partial Dyson sphere, a structure that envelopes or partially envelopes a star to harvest its energy. Science fiction has long envisioned huge alien megastructures like planet-size artificial spheres or rings or swarms of energy-collecting spacecraft. They’ve also been floated as a possible explanation for the strange dimming and re-brightening behavior of the now famous Boyajian’s Star, also known as Tabby’s Star or KIC 8462852. Cross-referencing the data sets revealed a handful of stars in Gaia data that may possibly be circled by such alien megastructures. However, upon closer examination, the team determined that errors in the data could account for differing measurements in the case of all but one star named TYC 6111-1162-1. The researchers used a third observatory to take a closer look at the star. They didn’t find evidence of alien megastructures, but instead of an unseen companion star, which could also account for the discrepancies in measurements. While they haven’t discovered advanced aliens just yet, the team maintains that their method of searching for possible Dyson spheres is still sound. They say the search will become even easier with future releases of Gaia data, which will provide both types of distance measurements for many stars. “We estimate that Gaia Data Release 3, currently scheduled for late 2020, should allow the technique to be applied to samples of (about 1 million) stars,” they write in a report uploaded to Cornell’s Arxiv repository of scientific papers. Meanwhile, the second Gaia Data Release dropped on Wednesday, with a fresh batch of 1.7 billion stars to check for ambitious alien construction projects. If anyone finds anything, please notify Elon Musk. He’s always looking for fresh inspiration. Crowd Control: A crowdsourced science fiction novel written by CNET readers. Solving for XX: The tech industry seeks to overcome outdated ideas about “women in tech.”

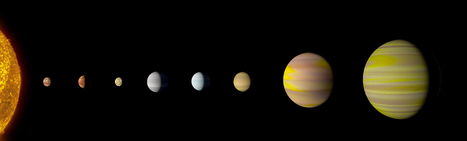

Our solar system now is tied for most number of planets around a single star, with the recent discovery of an eighth planet circling Kepler-90, a Sun-like star 2,545 light years from Earth. The planet was discovered in data from NASA’s Kepler Space Telescope. The newly-discovered Kepler-90i – a sizzling hot, rocky planet that orbits its star once every 14.4 days – was found using machine learning from Google. Machine learning is an approach to artificial intelligence in which computers “learn.” In this case, computers learned to identify planets by finding in Kepler data instances where the telescope recorded signals from planets beyond our solar system, known as exoplanets. “Just as we expected, there are exciting discoveries lurking in our archived Kepler data, waiting for the right tool or technology to unearth them,” said Paul Hertz, director of NASA’s Astrophysics Division in Washington. “This finding shows that our data will be a treasure trove available to innovative researchers for years to come.” The discovery came about after researchers Christopher Shallue and Andrew Vanderburg trained a computer to learn how to identify exoplanets in the light readings recorded by Kepler – the miniscule change in brightness captured when a planet passed in front of, or transited, a star. Inspired by the way neurons connect in the human brain, this artificial “neural network” sifted through Kepler data and found weak transit signals from a previously-missed eighth planet orbiting Kepler-90, in the constellation Draco. While machine learning has previously been used in searches of the Kepler database, this research demonstrates that neural networks are a promising tool in finding some of the weakest signals of distant worlds. Other planetary systems probably hold more promise for life than Kepler-90. About 30 percent larger than Earth, Kepler-90i is so close to its star that its average surface temperature is believed to exceed 800 degrees Fahrenheit, on par with Mercury. Its outermost planet, Kepler-90h, orbits at a similar distance to its star as Earth does to the Sun. “The Kepler-90 star system is like a mini version of our solar system. You have small planets inside and big planets outside, but everything is scrunched in much closer,” said Vanderburg, a NASA Sagan Postdoctoral Fellow and astronomer at the University of Texas at Austin. Shallue, a senior software engineer with Google’s research team Google AI, came up with the idea to apply a neural network to Kepler data. He became interested in exoplanet discovery after learning that astronomy, like other branches of science, is rapidly being inundated with data as the technology for data collection from space advances. “In my spare time, I started googling for ‘finding exoplanets with large data sets’ and found out about the Kepler mission and the huge data set available,” said Shallue. "Machine learning really shines in situations where there is so much data that humans can't search it for themselves.”

Machine-learning technology developed at Los Alamos National Laboratory played a key role in the discovery of supernova ASASSN-15lh, an exceptionally powerful explosion that was 570 billion times brighter than the sun and more than twice as luminous as the previous record-holding supernova. This extraordinary event marking the death of a star was identified by the All Sky Automated Survey for SuperNovae (ASAS-SN) and is described in a new study published today in Science.

"This is a golden age for studying changes in astronomical objects thanks to rapid growth in imaging and computing technology," said Przemek Wozniak, the principal investigator of the project that created the software system used to spot ASASSN-15lh. "ASAS-SN is a leader in wide-area searches for supernovae using small robotic telescopes that repeatedly observe the same areas of the sky looking for interesting changes."

ASASSN-15lh was first observed in June 2015 by twin ASAS-SN telescopes¾just 14 centimeters in diameter¾located in Cerro Tololo, Chile. While supernovae already rank among the most energetic explosions in the universe, this one was 200 times more powerful than a typical supernova. The event appears to be an extreme example of a "superluminous supernova," a recently discovered class of rare cosmic explosions, most likely associated with gravitational collapse of dying massive stars. However, the record-breaking properties of ASASSN-15lh stretch even the most exotic theoretical models based on rapidly spinning neutron stars called magnetars.

"The grand challenge in this work is to select rare transient events from a deluge of imaging data in time to collect detailed follow-up observations with larger, more powerful telescopes," said Wozniak. "We developed an automated software system based on machine-learning algorithms to reliably separate real transients from bogus detections." This new technology will soon enable scientists to find ten or perhaps even hundred times more supernovae and explore truly rare cases in great detail. Since January 2015 this capability has been deployed on a live data stream from ASAS-SN.

Los Alamos is also developing high-fidelity computer simulations of shock waves and radiation generated in supernova explosions. As explained by Chris Fryer, a computational scientist at Los Alamos who leads the supernova simulation and modeling group, "By comparing our models with measurements collected during the onset of a supernova, we will learn about the progenitors of these violent events, the end stages of stellar evolution leading up to the explosion, and the explosion mechanism itself."

The next generation of massive sky monitoring surveys is poised to deliver a steady stream of high-impact discoveries like ASASSN-15lh. The Large Synoptic Survey Telescope (LSST) expected to go on sky in 2022 will collect 100 Petabytes (100 million Gigabytes) of imaging data. The Zwicky Transient Facility (ZTF) planned to begin operations in 2017 is designed to routinely catch supernovae in the act of exploding. However, even with LSST and ZTF up and running, ASAS-SN will have a unique advantage of observing the entire visible sky on daily cadence. Los Alamos is at the forefront of this field and well prepared to make important contributions in the future.

The Navy successfully landed a drone the size of a fighter jet aboard an aircraft carrier for the first time on July 10, 2013, showcasing the military's capability to have a computer program perform one of the most difficult tasks that a pilot is asked to do. The landing of the X-47B experimental aircraft means the Navy can move forward with its plans to develop another unmanned aircraft that will join the fleet alongside traditional airplanes to provide around-the-clock surveillance while also possessing a strike capability. It also would pave the way for the U.S. to launch unmanned aircraft without the need to obtain permission from other countries to use their bases. "It is not often that you get a chance to see the future, but that's what we got to do today. This is an amazing day for aviation in general and for naval aviation in particular," Navy Secretary Ray Mabus said after watching the landing. The X-47B experimental aircraft took off from Naval Air Station Patuxent River in Maryland before approaching the USS George H.W. Bush, which was operating about 70 miles off the coast of Virginia. The tail-less drone landed by deploying a hook that caught a wire aboard the ship and brought it to a quick stop, just like normal fighter jets do. The maneuver is known as an arrested landing and had previously only been done by the drone on land at Patuxent River. Landing on a ship that is constantly moving while navigating through turbulent air behind the aircraft carrier is seen as a more difficult maneuver, even on a clear day with low winds like Wednesday. Rear Adm. Mat Winter, the Navy's program executive officer for unmanned aviation and strike weapons, said everything about the flight — including where on the flight deck the plane would first touch and how many feet its hook would bounce — appeared to go exactly as planned. "This is a historic day. This is a banner day. This is a red-flag letter day," Winter said. "You can call it what you want, but the fact of the matter is that you just observed history — history that your great-grandchildren, my great grandchildren, everybody's great grandchildren are going to be reading in our history books."

|

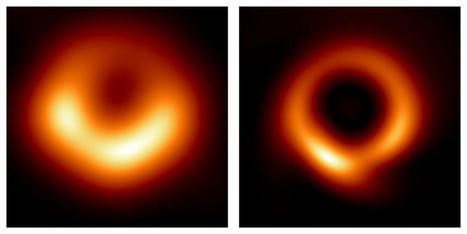

The famous first image of a black hole just got two times sharper. A research team used artificial intelligence to dramatically improve upon its first image from 2019, which now shows the black hole at the center of the M87 galaxy as darker and bigger than the first image depicted. AI algorithms—in particular, neural networks that use many interconnected nodes and are able to learn to recognize patterns—are perfectly suited for picking out the patterns of galaxies. Astronomers began using neural networks to classify galaxies in the early 2010s. Now the algorithms are so effective that they can classify galaxies with an accuracy of 98%. Astronomers working on SETI, the Search for Extraterrestrial Intelligence, use radio telescopes to look for signals from distant civilizations. Early on, radio astronomers scanned charts by eye to look for anomalies that couldn't be explained. More recently, researchers harnessed 150,000 personal computers and 1.8 million citizen scientists to look for artificial radio signals. Now, researchers are using AI to sift through reams of data much more quickly and thoroughly than people can. This has allowed SETI efforts to cover more ground while also greatly reducing the number of false positive signals. Another example is the search for exoplanets. Astronomers discovered most of the 5,300 known exoplanets by measuring a dip in the amount of light coming from a star when a planet passes in front of it. AI tools can now pick out the signs of an exoplanet with 96% accuracy. Making new discoveries AI has proved itself to be excellent at identifying known objects—like galaxies or exoplanets—that astronomers tell it to look for. But it is also quite powerful at finding objects or phenomena that are theorized but have not yet been discovered in the real world. Teams have used this approach to detect new exoplanets, learn about the ancestral stars that led to the formation and growth of the Milky Way, and predict the signatures of new types of gravitational waves. To do this, astronomers first use AI to convert theoretical models into observational signatures—including realistic levels of noise. They then use machine learning to sharpen the ability of AI to detect the predicted phenomena. Finally, radio astronomers have also been using AI algorithms to sift through signals that don't correspond to known phenomena. Recently a team from South Africa found a unique object that may be a remnant of the explosive merging of two supermassive black holes. If this proves to be true, the data will allow a new test of general relativity—Albert Einstein's description of space-time. Making predictions and plugging holes As in many areas of life recently, generative AI and large language models like ChatGPT are also making waves in the astronomy world. The team that created the first image of a black hole in 2019 used a generative AI to produce its new image. To do so, it first taught an AI how to recognize black holes by feeding it simulations of many kinds of black holes. Then, the team used the AI model it had built to fill in gaps in the massive amount of data collected by the radio telescopes on the black hole M87. Using this simulated data, the team was able to create a new image that is two times sharper than the original and is fully consistent with the predictions of general relativity. Astronomers are also turning to AI to help tame the complexity of modern research. A team from the Harvard-Smithsonian Center for Astrophysics created a language model called astroBERT to read and organize 15 million scientific papers on astronomy. Another team, based at NASA, has even proposed using AI to prioritize astronomy projects, a process that astronomers engage in every 10 years.

Researchers have developed a computer vision-based approach to sharpening images captured by ground-based telescopes. While vital to humanity’s existence on Earth, the planet’s atmosphere is a major nuisance for astronomers trying to learn more about what’s beyond Earth. The cosmos would be much easier to study without the pesky atmosphere. Researchers at Northwestern University in Chicago and Tsinghua University in Beijing have unveiled a new artificial intelligence-powered approach to cleaning up images captured by ground-based telescopes. The primary culprit behind blurry images is shifting pockets of air in the atmosphere. These air pockets can obfuscate the shapes of celestial bodies and can also affect the measurements scientists can make of distant objects, leading to incorrect conclusions. Space-based telescopes, such as Hubble and the James Webb Space Telescope, avoid this issue altogether by leaving Earth’s atmosphere. However, space telescopes are monumentally expensive and always in high demand by astronomers. Ground-based telescopes are easier to build, more affordable, and much simpler to fix when issues arise. To deal with the blur caused by the atmosphere, ground-based telescopes are often built in arid climates and at high altitudes. Even still, blur must be dealt with. The light emanating from distant stars, planets, and galaxies travels a very long way to reach Earth. The light’s journey may encounter different gravitational effects, which can warp the light as observed from Earth. However, the light must also travel through Earth’s atmosphere before it reaches ground-based telescopes. “Even clear night skies still contain moving air that affects light passing through it. That’s why stars twinkle and why the best ground-based telescopes are located at high altitudes where the atmosphere is thinnest,” Northwestern explains. Alexander describes the effect as “a bit like looking up from the bottom of a swimming pool.” The atmosphere is much less dense than water, but the concept is similar. The resulting blur is a significant problem for astrophysicists analyzing images for important cosmological data. The apparent shape of galaxies sheds light on large-scale cosmological structures and their gravitational effects. “Slight differences in shape can tell us about gravity in the universe. These differences are already difficult to detect. If you look at an image from a ground-based telescope, a shape might be warped. It’s hard to know if that’s because of a gravitational effect or the atmosphere,” Alexander says. If an image is blurry, it’s hard to conclude much about the shape of what’s being observed. By removing the blur precisely and mathematically, the true nature of distant objects can be measured and analyzed. Scientists use numerous software-based approaches to process images to make them sharper and more useful. However, the new computer-vision algorithm, first seen on Space, approach promises better and faster results. The algorithm the team built has been explicitly adapted for processing astronomical images captured by ground-based telescopes, the first time such AI technology has been used for this purpose. The team has trained the AI on data simulated to match the Vera C. Rubin Observatory, which will house the world’s largest digital camera when it opens next year in Chile. The new algorithm will be immediately compatible as soon as the telescope is ready for operations. It’s like the impressive observatory has received an upgrade before it even starts its stargazing. “Photography’s goal is often to get a pretty, nice-looking image,” says Northwestern’s Emma Alexander, the study’s senior author. “But astronomical images are used for science. By cleaning up images in the right way, we can get more accurate data. The algorithm removes the atmosphere computationally, enabling physicists to obtain better scientific measurements. At the end of the day, the images do look better as well.” Alexander is an assistant professor of computer science at Northwestern’s McCormick School of Engineering, where she leads the Bio Inspired Vision Lab. The new study was co-authored by Tianao Li, an undergraduate student in electrical engineering at Tsinghua University and a research intern in Alexander’s lab. The team’s optimization algorithm, built upon a deep learning network trained on astronomical images, produces images with 38.6 percent less error than classic methods for removing blur and 7.4 percent less error compared to modern methods. That may not seem like a huge difference, but it is in the realm of astronomy. Relatively small improvements in accuracy and efficiency can have massive, far-reaching benefits to cutting-edge astronomy.

Wouldn’t finding life on other worlds be easier if we knew exactly where to look for it? Researchers have limited opportunities to collect samples on Mars or elsewhere or access remote sensing instruments when hunting for life beyond Earth. In a paper published in Nature Astronomy, an interdisciplinary study led by SETI Institute Senior Research Scientist Kim Warren-Rhodes, mapped the sparse life hidden away in salt domes, rocks and crystals at Salar de Pajonales at the boundary of the Chilean Atacama Desert and Altiplano. Warren-Rhodes then worked with co-investigators Michael Phillips (Johns Hopkins Applied Physics Lab) and Freddie Kalaitzis (University of Oxford) to train a machine learning model to recognize the patterns and rules associated with their distributions so it could learn to predict and find those same distributions in data on which it was not trained. In this case, by combining statistical ecology with AI/ML, the scientists could locate and detect biosignatures up to 87.5% of the time (versus ≤10% by random search) and decrease the area needed for search by up to 97%.

“Our framework allows us to combine the power of statistical ecology with machine learning to discover and predict the patterns and rules by which nature survives and distributes itself in the harshest landscapes on Earth.,” said Rhodes. “We hope other astrobiology teams adapt our approach to mapping other habitable environments and biosignatures. With these models, we can design tailor-made roadmaps and algorithms to guide rovers to places with the highest probability of harboring past or present life—no matter how hidden or rare.” A video clip showing the major concepts of integrating datasets from orbit to the ground is presented. The first frames zoom in from a global view to an orbital image of Salar de Pajonales. The salar is then overlain with an interpretation of its compositional variability derived from ASTER multispectral data. The next sequence of frames transitions to drone-derived images of the field site within Salar de Pajonales. Note features of interest that become identifiable in the scene, starting with polygonal networks of ridges, then individual gypsum domes and polygonal patterned ground, and ending with individual blades of selenite. The video ends with a first-person view of a set of gypsum domes studied in the article using machine learning techniques. Video credit: M. Phillips Ultimately, similar algorithms and machine learning models for many different types of habitable environments and biosignatures could be automated onboard planetary robots to efficiently guide mission planners to areas at any scale with the highest probability of containing life. Rhodes and the SETI Institute NASA Astrobiology Institute (NAI) team used the Salar de Pajonales, as a Mars analog. Pajonales is a high altitude (3,541 m), high U/V, hyperarid, dry salt lakebed, considered inhospitable to many life forms but still habitable.

During the NAI project’s field campaigns, the team collected over 7,765 images and 1,154 samples and tested instruments to detect photosynthetic microbes living within the salt domes, rocks and alabaster crystals. These microbes exude pigments that represent one possible biosignature on NASA’s Ladder of Life Detection.

At Pajonales, drone flight imagery connected simulated orbital (HiRISE) data to ground sampling and 3D topographical mapping to extract spatial patterns. The study’s findings confirm (statistically) that microbial life at the Pajonales terrestrial analog site is not distributed randomly but concentrated in patchy biological hotspots strongly linked to water availability at km to cm scales. Next, the team trained convolutional neural networks (CNNs) to recognize and predict macro-scale geologic features at Pajonales—some of which, like patterned ground or polygonal networks, are also found on Mars—and micro-scale substrates (or ‘micro-habitats’) most likely to contain biosignatures.

Like the Perseverance team on Mars, the researchers tested how to effectively integrate a UAV/drone with ground-based rovers, drills and instruments (e.g., VISIR on ‘MastCam-Z’ and Raman on ‘SuperCam’ on the Mars 2020 Perseverance rover).

Alien minds are headed for planet Earth and we have no reason to believe it will be friendly. Some experts predict it will get here within 30 years, while others insist it will arrive far sooner. Nobody knows what it will look like, but it will share two key traits with us humans – it will be intelligent and self-aware. No, this alien will not come from a distant planet – it will be born right here on Earth, hatched in a research lab at a major university or large corporation. I am referring to the first artificial general intelligence (AGI) that reaches (or exceeds) human-level cognition. Currently, Billions are being spent to bring this alien to life, as it would be viewed as one of the greatest technological achievements in human history. But unlike our other inventions, this one will have a mind of its own, literally. And if it behaves like every other intelligent species we know, it will put its own self-interests first, working to maximize its prospects for survival. AI in our own image Should we fear a superior intelligence driven by its own goals, values and self-interests? Many people reject this question, believing we will build AI systems in our own image, ensuring they think, feel and behave just like we do. This is extremely unlikely to be the case. Artificial minds will not be created by writing software with carefully crafted rules that make them think like us. Instead engineers feed massive datasets into simple algorithms that automatically adjust their own parameters, making millions upon millions of tiny changes to their structure until an intelligence emerges – an intelligence with inner workings that are far too complex for us to comprehend. And no – feeding it data about humans will not make it think like humans do. This is a common misconception – the false belief that by training an AI on data that describes human behaviors, we will ensure it ends up thinking, feeling and acting like we do. It will not. Instead, we will build these AI creatures to know humans, not to be human. And yes, they will know us inside and out, able to speak our languages and interpret our gestures, read our facial expressions and predict our actions. They will understand how we make decisions, for good and bad, logical and illogical. After all, we will have spent decades teaching AI systems how we humans behave in almost every situation. Different, profoundly different But still, their minds will be nothing like ours. To us, they will seem omniscient, linking to remote sensors of all kinds, in all places. In my 2020 book, Arrival Mind, I portray AGI as “having a billion eyes and ears,” for its perceptual abilities could easily span the globe. We humans can’t possibly imagine what it would feel like to perceive our world in such an expansive and wholistic way, and yet we somehow presume a mind like this will share our morals, values, and sensibilities. It will not. Artificial minds will be profoundly different than any biological brains we know of on Earth – from their basic structure and functionality to their overall physiology and psychology. Of course, we will create human-like bodies for these alien minds to inhabit, but they will be little more than robotic façades to make ourselves feel comfortable in their presence. In fact, we humans will work very hard to make these aliens look like us and talk like us, even smile and laugh like us, but deep inside they will not be anything like us. Most likely, their brains will live in the cloud (fully or partially) connected to features and functions both inside and outside the humanoid forms that we personify them as.

Dense metallic hydrogen -- a phase of hydrogen which behaves like an electrical conductor -- makes up the interior of giant planets, but it is difficult to study and poorly understood. By combining artificial intelligence and quantum mechanics, researchers have found how hydrogen becomes a metal under the extreme pressure conditions of these planets. The researchers, from the University of Cambridge, IBM Research and EPFL, used machine learning to mimic the interactions between hydrogen atoms in order to overcome the size and timescale limitations of even the most powerful supercomputers. They found that instead of happening as a sudden, or first-order, transition, the hydrogen changes in a smooth and gradual way. The results are reported in the journal Nature. Hydrogen, consisting of one proton and one electron, is both the simplest and the most abundant element in the Universe. It is the dominant component of the interior of the giant planets in our solar system -- Jupiter, Saturn, Uranus, and Neptune -- as well as exoplanets orbiting other stars. At the surfaces of giant planets, hydrogen remains a molecular gas. Moving deeper into the interiors of giant planets however, the pressure exceeds millions of standard atmospheres. Under this extreme compression, hydrogen undergoes a phase transition: the covalent bonds inside hydrogen molecules break, and the gas becomes a metal that conducts electricity. "The existence of metallic hydrogen was theorised a century ago, but what we haven't known is how this process occurs, due to the difficulties in recreating the extreme pressure conditions of the interior of a giant planet in a laboratory setting, and the enormous complexities of predicting the behaviour of large hydrogen systems," said lead author Dr Bingqing Cheng from Cambridge's Cavendish Laboratory. Experimentalists have attempted to investigate dense hydrogen using a diamond anvil cell, in which two diamonds apply high pressure to a confined sample. Although diamond is the hardest substance on Earth, the device will fail under extreme pressure and high temperatures, especially when in contact with hydrogen, contrary to the claim that a diamond is forever. This makes the experiments both difficult and expensive. Theoretical studies are also challenging: although the motion of hydrogen atoms can be solved using equations based on quantum mechanics, the computational power needed to calculate the behaviour of systems with more than a few thousand atoms for longer than a few nanoseconds exceeds the capability of the world's largest and fastest supercomputers. It is commonly assumed that the transition of dense hydrogen is first-order, which is accompanied by abrupt changes in all physical properties. A common example of a first-order phase transition is boiling liquid water: once the liquid becomes a vapour, its appearance and behaviour completely change despite the fact that the temperature and the pressure remain the same. In the current theoretical study, Cheng and her colleagues used machine learning to mimic the interactions between hydrogen atoms, in order to overcome limitations of direct quantum mechanical calculations. "We reached a surprising conclusion and found evidence for a continuous molecular to atomic transition in the dense hydrogen fluid, instead of a first-order one," said Cheng, who is also a Junior Research Fellow at Trinity College. The transition is smooth because the associated 'critical point' is hidden. Critical points are ubiquitous in all phase transitions between fluids: all substances that can exist in two phases have critical points. A system with an exposed critical point, such as the one for vapour and liquid water, has clearly distinct phases. However, the dense hydrogen fluid, with the hidden critical point, can transform gradually and continuously between the molecular and the atomic phases. Furthermore, this hidden critical point also induces other unusual phenomena, including density and heat capacity maxima. The finding about the continuous transition provides a new way of interpreting the contradicting body of experiments on dense hydrogen. It also implies a smooth transition between insulating and metallic layers in giant gas planets. The study would not be possible without combining machine learning, quantum mechanics, and statistical mechanics. Without any doubt, this approach will uncover more physical insights about hydrogen systems in the future. As the next step, the researchers aim to answer the many open questions concerning the solid phase diagram of dense hydrogen.

If you've seen dental plaque or pond scum, you've met a biofilm. Among the oldest forms of life on Earth, these ubiquitous, slimy buildups of bacteria grow on nearly everything exposed to moisture and water. In recent months, Goddard researchers have begun investigating ways NASA could benefit from machine-learning techniques. Their projects run the gamut, everything from how machine learning could help in making real-time crop forecasts or locating wildfires and floods to identifying instrument anomalies. Other NASA researchers are tapping into these techniques to help identify hazardous Martian and lunar terrain, but no one is applying artificial intelligence to identify biofabrics in the field, said Heather Graham, a University of Maryland researcher who works at Goddard's Astrobiology Analytical Laboratory and is the brainchild behind the project. The possibility that these organisms might live or had once lived beneath or on the surface of Mars is possible. NASA missions discovered gullies and lake beds, indicating that water once existed on this dry, hostile world. The presence of water is a prerequisite for life, at least on Earth. If life took form, their fossilized textures could be present on the surfaces of rocks. It's even possible that life could have survived on Mars below the surface, judging from some microbes on Earth that thrive miles underground. "It can take a couple hours to receive images taken by a Mars rover, even longer for more distant objects," Graham said, adding that researchers examine these images to determine whether to sample it. "Our idea of equipping a rover with sophisticated imaging and machine-learning technologies would give it some autonomy that would speed up our sampling cadence. This approach could be very, very useful."

Teaching algorithms to identify biofabrics begins first with a laser confocal microscope, a powerful tool that provides high-resolution, high-contrast images of three-dimensional objects. Recently acquired by Goddard's Materials Branch, the microscope is typically used to analyze materials used in spaceflight applications. While rock scanning wasn't the reason Goddard bought the tool, it fits the bill for this project due to its ability to acquire small-scale structure.

Kent scans rocks known to contain biofabrics and other textures left by life as well as those that don't. Machine-learning expert Burcu Kosar and her colleague, Tim McClanahan, plan to take those high-resolution images and feed them into commercially available machine-leaning algorithms or models already used for feature recognition. "This is a huge, huge task. It's data hungry," Kosar said, adding that the more images she and McClanahan feed into the algorithms, the greater the chance of developing a highly accurate classifying system that a rover could use to identify potential lifeforms. "The goal is to create a functional classifier, combined with a good imager on a rover." The project is now being supported by Goddard's Internal Research and Development program and is a follow-on to an effort Kent initially started in 2018 under another Goddard research program. "What we did was take a few preliminary studies to determine if we could see different textures on rocks. We could see them," Kent said. "This is an extension of that effort. With the IRAD, we're taking tons of images and feeding them into the machine-learning algorithms to see if they can identify a difference. This technology holds a lot of promise."

Using a new type of AI design process, engineers at software company Autodesk and NASA’s Jet Propulsion Laboratory came up with a new interplanetary lander concept that could explore distant moons like Europa and Enceladus. Its slim design weighs less than most of the landers that NASA has already sent to other planets and moons. Autodesk announced its new innovative lander design today at the company’s conference in Las Vegas — revealing a spacecraft that looks like a spider woven from metal. The company says the idea to create the vehicle was sparked when Autodesk approached NASA to validate a lander prototype it had been working on. After looking at Autodesk’s work, JPL and the company decided to form a design team — comprised of five engineers from Autodesk and five from JPL — to come up with a new way to design landers. NASA is starting to think about ways to land on distant moons in our Solar System that may harbor oceans underneath their crusts. Saturn’s moon Enceladus is one such candidate, as it may have the right conditions to support life in its unseen waters. And NASA has already studied concepts for landers to touch down on the surface of Jupiter’s moon Europa, in order to sample the world’s ice to see if it might host life. The lander collaboration with Autodesk was mostly experimental, with JPL giving the company a very clear goal: figure out a way to reduce the weight of a deep-space lander. When it comes to space travel, the best materials to withstand the harshness of space are things like titanium and aluminum, but these metals can be heavy. And the more a vehicle weighs, the more difficult and more expensive it is to launch into space. So shaving pounds can help reduce the overall cost and complexity of a mission. Weight reduction also allows for the opportunity to add more instruments and sensors to a lander, to gather more valuable science data.

A machine learning method called “deep learning,” which has been widely used in face recognition and other image- and speech-recognition applications, has shown promise in helping astronomers analyze images of galaxies and understand how they form and evolve. In a new study, accepted for publication in Astrophysical Journal and available online, researchers used computer simulations of galaxy formation to train a deep learning algorithm, which then proved surprisingly good at analyzing images of galaxies from the Hubble Space Telescope. The researchers used output from the simulations to generate mock images of simulated galaxies as they would look in observations by the Hubble Space Telescope. The mock images were used to train the deep learning system to recognize three key phases of galaxy evolution previously identified in the simulations. The researchers then gave the system a large set of actual Hubble images to classify. The results showed a remarkable level of consistency in the neural network’s classifications of simulated and real galaxies. “We were not expecting it to be all that successful. I’m amazed at how powerful this is,” said coauthor Joel Primack, professor emeritus of physics and a member of the Santa Cruz Institute for Particle Physics (SCIPP) at UC Santa Cruz. “We know the simulations have limitations, so we don’t want to make too strong a claim. But we don’t think this is just a lucky fluke.” Galaxies are complex phenomena, changing their appearance as they evolve over billions of years, and images of galaxies can provide only snapshots in time. Astronomers can look deeper into the universe and thereby “back in time” to see earlier galaxies (because of the time it takes light to travel cosmic distances), but following the evolution of an individual galaxy over time is only possible in simulations. Comparing simulated galaxies to observed galaxies can reveal important details of the actual galaxies and their likely histories.

The advanced method could streamline the formerly manual technique. Despite vast developments in technology over the last few decades, our method for counting craters on the Moon hasn’t advanced much, with the human eye still being heavily relied on for identification. In an effort to eliminate the monotony of tracking lunar cavities and basins manually, a group of researchers at the University of Toronto Scarborough came up with an innovative technique that resulted in the discovery of 6,000 new craters. “Basically, we need to manually look at an image, locate and count the craters, and then calculate how large they are based off the size of the image. Here we’ve developed a technique from artificial intelligence that can automate this entire process that saves significant time and effort,” said Mohamad Ali-Dib, a postdoctoral fellow at University of Toronto’s Centre for Planetary Sciences and co-developer of the technology, in a news release. The method utilizes a convolutional neural network, the same machine learning algorithm used for computer vision and self-driving cars. The research team used data from elevation maps, collected by orbiting satellites, to train the algorithm on an area that covers two-thirds of the Moon’s surface. They then tested the technology on the remaining third, an area it hadn’t yet seen. The algorithm was able to map the unseen terrain with incredible accuracy and great detail. It identified twice as many craters as manual methods, with about 6,000 new lunar craters being discovered. “Tens of thousands of unidentified small craters are on the Moon, and it’s unrealistic for humans to efficiently characterize them all by eye,” said Ari Silburt, a former University of Toronto Department of Astronomy and Astrophysics grad student, who helped create the AI algorithm. “There’s real potential for machines to help identify these small craters and reveal undiscovered clues about the formation of our solar system.” Because the Moon doesn’t have flowing water, plate tectonics, or an atmosphere, its surface undergoes very little erosion. With its ancient craters remaining relatively intact, researchers are able to study factors like size, age, and impact to gain insight into our solar system’s evolution and the material distribution that occurred early on.

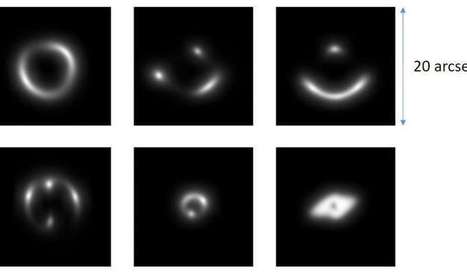

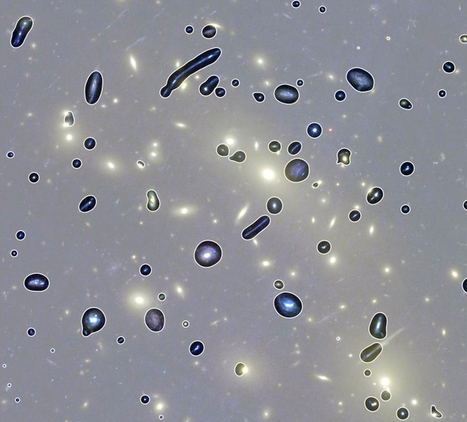

A group of astronomers from the universities of Groningen, Naples and Bonn has developed a method that finds gravitational lenses in enormous piles of observations. The hunt for gravitational lenses is painstaking. Astronomers have to sort thousands of images. They are assisted by enthusiastic volunteers around the world. So far, the search was more or less in line with the availability of new images. But thanks to new observations with special telescopes that reflect large sections of the sky, millions of images are added. Humans cannot keep up with that pace. To tackle the growing amount of images, the astronomers have used so-called 'convolutional neural networks'. Google employed such neural networks to win a match of Go against the world champion. Facebook uses them to recognize what is in the images of your timeline. And Tesla has been developing self-driving cars thanks to neural networks. The astronomers trained the neural network using millions of homemade images of gravitational lenses. Then they confronted the network with millions of images from a small patch of the sky. That patch had a surface area of 255 square degrees. That's just over half a percent of the sky.

A team of astronomers and computer scientists at the University of Hertfordshire have taught a machine to “see” astronomical images, using data from the Hubble Space Telescope Frontier Fields set of images of distant clusters of galaxies that contain several different types of galaxies. The technique, which uses a form of AI called unsupervised machine learning, allows galaxies to be automatically classified at high speed, something previously done by thousands of human volunteers in projects like Galaxy Zoo.

“We have not told the machine what to look for in the images, but instead taught it how to ‘see,’” said graduate student Alex Hocking. “Our aim is to deploy this tool on the next generation of giant imaging surveys where no human, or even group of humans, could closely inspect every piece of data. But this algorithm has a huge number of applications far beyond astronomy, and investigating these applications will be our next step,” said University of Hertfordshire Royal Society University Research Fellow James Geach, PhD.

The scientists are now looking for collaborators to make use of the technique in applications like medicine, where it could for example help doctors to spot tumors, and in security, to find suspicious items in airport scans.

|

Your new post is loading...

Your new post is loading...

buy GLUCOMANNAN KONJAC

Price: $345.0 $210.0Buy Greenstone Xanax online

Price: $150.0 – $850.0Buy hydrocodone online

Price: $150.0 – $500.0Buy Lortab Online

Price: $180.0 – $500.0Buy Meridia online

Price: $300.0 $240.0buy methadone 40mg online

Price: $400.0 $230.0Buy Modafinil Online

Price: $250.0 – $650.0Buy Morphine Online

Price: $150.0 – $600.0buy Morphine online

Price: $300.0 $200.0Buy Norco without script

Price: $150.0 – $450.0Buy Oramorph Online

Price: $150.0 – $350.0Buy original adderall online

Price: $150.0 – $500.0Buy original darvocet online

Buy original klonopin online

Price: $200.0 – $450.0Buy oxycodone online

Price: $200.0 – $1,000.0buy Pethidine Injection 2ml